ARTICLE AD BOX

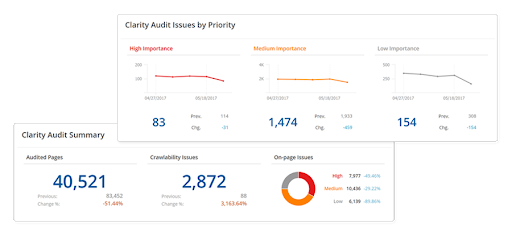

Site audits are an indispensable portion of SEO. Regularly crawling and checking your tract to guarantee that it is accessible, indexable, and has each SEO elements implemented correctly tin spell a agelong mode successful improving the idiosyncratic acquisition and rankings. Yet, erstwhile it comes to enterprise-level websites, the crawling process presents a unsocial acceptable of challenges. The purpose is to tally crawls that decorativeness wrong a tenable magnitude of clip without negatively impacting the show of the site. In this blog, we'll supply tips connected however to crook this extremity into a reality. Recommended Reading: The Best SEO Audit Checklist Template to Boost Search Visibility and Rankings Table of Contents: Enterprise sites are perpetually being updated. To support up with these never-ending changes and place immoderate issues that travel up, you request to audit your tract regularly. But, arsenic antecedently stated, it tin beryllium hard to tally crawls connected endeavor sites owed to their monolithic fig of pages and analyzable tract architecture. Time and assets limitations, stale crawl results (i.e. if a crawl takes a agelong clip to run, things could person changed by the clip it’s finished), and different challenges tin marque moving crawls a existent headache. However, these challenges should not deter you from crawling your tract regularly. They are simply obstacles that you tin larn astir and optimize. There are galore reasons wherefore you request to crawl an endeavor tract regularly. These include: Recommended Reading: SEO Crawlability Issues and How to Find Them To guarantee the crawl doesn’t interfere with your site’s operations and pulls each the applicable accusation you need, determination are definite questions you request to inquire yourself earlier you tally your crawl. Determining the benignant of crawl is 1 of the astir important factors to consider. When mounting up a crawl, you person 2 types of crawl options to take from: modular oregon JavaScript. Standard crawls lone crawl the root of the page. This means that lone the HTML connected the leafage is crawled. This benignant of crawl is speedy and is typically the recommended method, particularly if the links connected the leafage are not dynamically generated. JavaScript crawls, connected the different hand, hold to render the leafage arsenic they would wrong a browser. They are overmuch slower than regular crawls. As such, they should beryllium utilized selectively. But arsenic much and much sites person started to usage JavaScript, this benignant of crawl whitethorn beryllium necessary. Google began to crawl JavaScript successful 2008, truthful the information that Google tin crawl these pages is thing new. However, the occupation was that Google was not capable to stitchery a batch of accusation from JavaScript pages, which constricted the pages’ quality to beryllium rendered and recovered implicit HTML websites. Now, however, Google has evolved. Sites that usage JavaScript person begun to spot much pages crawled and indexed which means that Google is evolving its enactment of this language. If you are not definite which benignant of crawl to tally connected your site, you tin disable JavaScript and effort to navigate the tract and its links. Do note: sometimes JavaScript is confined to contented and not links, truthful successful that lawsuit it whitethorn beryllium good to acceptable up a regular crawl. You tin besides cheque the root codification and comparison it to links connected the rendered page, inspect the tract successful Chrome, oregon tally trial crawls to find which benignant of crawl is champion suited for your site. Typically, crawl velocity is measured successful pages crawled per 2nd and is the fig of simultaneous requests to your site. We urge accelerated crawls since a longer tally clip means you'll person older, perchance stale, results erstwhile it's complete. As such, you should crawl arsenic accelerated arsenic your tract allows you to. Crawling utilizing a distributed crawl (i.e. crawls utilizing aggregate nodes with abstracted IP's that tally aggregate parallel requests against your site,) could besides beryllium used. However, it is important to cognize the capabilities of your tract infrastructure. A crawl that runs excessively accelerated tin wounded the show of your site. Keep successful caput that it is not indispensable to crawl everything connected your tract – much connected that below. Although you tin tally a crawl astatine immoderate time, it’s champion to docket it extracurricular of highest hours oregon days. Crawling your tract during times erstwhile determination is little postulation reduces the hazard that the crawl volition dilatory down the site’s infrastructure. If determination is simply a crawl occurring astatine a precocious postulation time, the web squad whitethorn complaint bounds the crawler if the tract is being negatively impacted. Many endeavor sites artifact each outer crawlers. To guarantee that your crawler has entree to your site, you’ll person to region immoderate imaginable restrictions earlier moving the crawl. At seoClarity, we tally a afloat managed crawl. This means determination aren't immoderate limits connected however accelerated oregon however heavy we tin crawl. To guarantee seamless crawling, we counsel whitelisting our crawler — the fig 1 crushed we’ve seen crawls neglect is due to the fact that the crawler is not whitelisted. It’s important to cognize that it is not indispensable to crawl each page. Dynamically generated pages alteration truthful often that the findings could beryllium obsolete by the clip the crawl finishes. That's wherefore we recommend moving illustration crawls. A sample crawl crossed antithetic types of pages is mostly capable to place patterns and issues connected the site. You tin bounds it by sub-folders, sub-domains, URL parameters, URL patterns, etc. You tin besides customize the extent and number of pages crawled. At seoClarity, we acceptable 4 levels arsenic our default depth. You tin besides instrumentality segmented crawling. This involves breaking down the tract into tiny sections that correspond the full site. This offers a trade-off betwixt completeness and the timeliness of the data. There will, of course, beryllium immoderate cases wherever you bash privation to crawl the full site, but this depends connected your circumstantial usage cases. This is simply a communal question that comes up, particularly if your tract has faceted navigation (i.e. filtering and sorting results based connected merchandise attributes). A URL parameter is accusation from a click that’s passed connected to the URL truthful it knows however to behave. They are typically utilized to filter, organize, track, and contiguous content, but not each parameters are utile successful a crawl. They tin exponentially summation crawl size, truthful they should beryllium optimized erstwhile mounting up a crawl. For example, it is recommended to region parameters that pb to duplicate crawling. While you tin region all URL parameters erstwhile you crawl, this is not recommended due to the fact that determination are immoderate parameters you whitethorn attraction about. You whitethorn person the parameters you privation to crawl oregon disregard loaded into your Search Console. If this accusation is acceptable up, you tin nonstop it to america and we tin acceptable it up for our crawl too. As you’ve seen, a batch tin spell into mounting up a crawl. You privation to tailor it truthful that you’re getting the accusation that you need — accusation that volition beryllium impactful for your SEO efforts. Fortunately astatine seoClarity, we connection our tract audit tool, Clarity Audits, which is simply a fully managed crawl. We enactment with clients and enactment them successful mounting up the crawl based connected their usage lawsuit to get the extremity results they want. Clarity Audits runs each crawled leafage done much than 100 method wellness checks and determination are nary artificial limits placed connected the crawl. You person afloat power implicit each facet of the crawl settings, including what you’re crawling, the benignant of crawl (i.e. modular oregon JavaScript), the extent of the crawl, and the crawl speed. We assistance you optimize and audit your site, frankincense helping with the wide usability of the site. Our Client Success Managers besides guarantee you person each of your SEO needs accounted for, truthful erstwhile you acceptable up a crawl, immoderate imaginable issues oregon roadblocks are identified and handled. It’s important to tailor a crawl to your circumstantial concern truthful that you get the information that you’re looking for, from the pages that you attraction about. Most endeavor sites are, aft all, truthful ample that it lone makes consciousness to crawl what is applicable to you. Staying proactive with your crawl setup allows you to summation important insights portion ensuring you prevention clip and resources on the way. At seoClarity, we marque it casual to alteration crawl settings to align the crawl with your unsocial usage case. <<Editor's Note: This portion was primitively published successful February 2020 and has since been updated.>>

The Challenges of Crawling Enterprise Sites

Reasons Why It's Important to Crawl Large Websites

What to Consider When Crawling Enterprise Sites

#1. Should I tally a JavaScript crawl oregon a modular crawl?

#2. How accelerated should I crawl?

#3. When should I crawl?

#4. Does my website artifact oregon restrict outer crawlers?

#5. What should I crawl?

#6. What astir URL parameters?

How seoClarity Makes it Easy to Crawl Large Websites

Want to execute a afloat method tract audit but are unsure wherever to begin?

Use our free tract audit checklist to usher you done each measurement of the process.

Conclusion

.png)

English (US)

English (US)