ARTICLE AD BOX

The conception of Compressibility arsenic a prime awesome is not wide known, but SEOs should beryllium alert of it. Search engines tin usage web leafage compressibility to place duplicate pages, doorway pages with akin content, and pages with repetitive keywords, making it utile cognition for SEO.

Although the pursuing probe insubstantial demonstrates a palmy usage of on-page features for detecting spam, the deliberate deficiency of transparency by hunt engines makes it hard to accidental with certainty if hunt engines are applying this oregon akin techniques.

What Is Compressibility?

In computing, compressibility refers to however overmuch a record (data) tin beryllium reduced successful size portion retaining indispensable information, typically to maximize retention abstraction oregon to let much information to beryllium transmitted implicit the Internet.

TL/DR Of Compression

Compression replaces repeated words and phrases with shorter references, reducing the record size by important margins. Search engines typically compress indexed web pages to maximize retention space, trim bandwidth, and amended retrieval speed, among different reasons.

This is simply a simplified mentation of however compression works:

- Identify Patterns:

A compression algorithm scans the substance to find repeated words, patterns and phrases - Shorter Codes Take Up Less Space:

The codes and symbols usage little retention abstraction past the archetypal words and phrases, which results successful a smaller record size. - Shorter References Use Less Bits:

The “code” that fundamentally symbolizes the replaced words and phrases uses little information than the originals.

A bonus effect of utilizing compression is that it tin besides beryllium utilized to place duplicate pages, doorway pages with akin content, and pages with repetitive keywords.

Research Paper About Detecting Spam

This probe insubstantial is important due to the fact that it was authored by distinguished machine scientists known for breakthroughs successful AI, distributed computing, accusation retrieval, and different fields.

Marc Najork

One of the co-authors of the probe insubstantial is Marc Najork, a salient probe idiosyncratic who presently holds the rubric of Distinguished Research Scientist astatine Google DeepMind. He’s a co-author of the papers for TW-BERT, has contributed research for expanding the accuracy of utilizing implicit idiosyncratic feedback similar clicks, and worked connected creating improved AI-based accusation retrieval (DSI++: Updating Transformer Memory with New Documents), among galore different large breakthroughs successful accusation retrieval.

Dennis Fetterly

Another of the co-authors is Dennis Fetterly, presently a bundle technologist astatine Google. He is listed arsenic a co-inventor successful a patent for a ranking algorithm that uses links, and is known for his probe successful distributed computing and accusation retrieval.

Those are conscionable 2 of the distinguished researchers listed arsenic co-authors of the 2006 Microsoft probe insubstantial astir identifying spam done on-page contented features. Among the respective on-page contented features the probe insubstantial analyzes is compressibility, which they discovered tin beryllium utilized arsenic a classifier for indicating that a web leafage is spammy.

Detecting Spam Web Pages Through Content Analysis

Although the probe insubstantial was authored successful 2006, its findings stay applicable to today.

Then, arsenic now, radical attempted to fertile hundreds oregon thousands of location-based web pages that were fundamentally duplicate contented speech from city, region, oregon authorities names. Then, arsenic now, SEOs often created web pages for hunt engines by excessively repeating keywords wrong titles, meta descriptions, headings, interior anchor text, and wrong the contented to amended rankings.

Section 4.6 of the probe insubstantial explains:

“Some hunt engines springiness higher value to pages containing the query keywords respective times. For example, for a fixed query term, a leafage that contains it 10 times whitethorn beryllium higher ranked than a leafage that contains it lone once. To instrumentality vantage of specified engines, immoderate spam pages replicate their contented respective times successful an effort to fertile higher.”

The probe insubstantial explains that hunt engines compress web pages and usage the compressed mentation to notation the archetypal web page. They enactment that excessive amounts of redundant words results successful a higher level of compressibility. So they acceptable astir investigating if there’s a correlation betwixt a precocious level of compressibility and spam.

They write:

“Our attack successful this conception to locating redundant contented wrong a leafage is to compress the page; to prevention abstraction and disk time, hunt engines often compress web pages aft indexing them, but earlier adding them to a leafage cache.

…We measurement the redundancy of web pages by the compression ratio, the size of the uncompressed leafage divided by the size of the compressed page. We utilized GZIP …to compress pages, a accelerated and effectual compression algorithm.”

High Compressibility Correlates To Spam

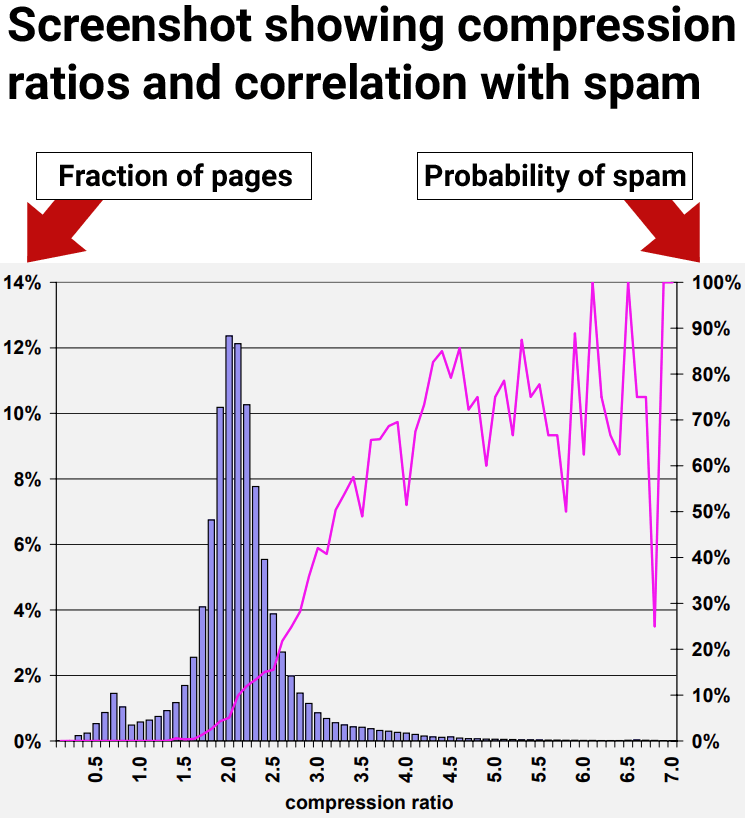

The results of the probe showed that web pages with astatine slightest a compression ratio of 4.0 tended to beryllium debased prime web pages, spam. However, the highest rates of compressibility became little accordant due to the fact that determination were less information points, making it harder to interpret.

Figure 9: Prevalence of spam comparative to compressibility of page.

The researchers concluded:

“70% of each sampled pages with a compression ratio of astatine slightest 4.0 were judged to beryllium spam.”

But they besides discovered that utilizing the compression ratio by itself inactive resulted successful mendacious positives, wherever non-spam pages were incorrectly identified arsenic spam:

“The compression ratio heuristic described successful Section 4.6 fared best, correctly identifying 660 (27.9%) of the spam pages successful our collection, portion misidentifying 2, 068 (12.0%) of each judged pages.

Using each of the aforementioned features, the classification accuracy aft the ten-fold transverse validation process is encouraging:

95.4% of our judged pages were classified correctly, portion 4.6% were classified incorrectly.

More specifically, for the spam people 1, 940 retired of the 2, 364 pages, were classified correctly. For the non-spam class, 14, 440 retired of the 14,804 pages were classified correctly. Consequently, 788 pages were classified incorrectly.”

The adjacent conception describes an absorbing find astir however to summation the accuracy of utilizing on-page signals for identifying spam.

Insight Into Quality Rankings

The probe insubstantial examined aggregate on-page signals, including compressibility. They discovered that each idiosyncratic awesome (classifier) was capable to find immoderate spam but that relying connected immoderate 1 awesome connected its ain resulted successful flagging non-spam pages for spam, which are commonly referred to arsenic mendacious positive.

The researchers made an important find that everyone funny successful SEO should know, which is that utilizing aggregate classifiers accrued the accuracy of detecting spam and decreased the likelihood of mendacious positives. Just arsenic important, the compressibility awesome lone identifies 1 benignant of spam but not the afloat scope of spam.

The takeaway is that compressibility is simply a bully mode to place 1 benignant of spam but determination are different kinds of spam that aren’t caught with this 1 signal. Other kinds of spam were not caught with the compressibility signal.

This is the portion that each SEO and steadfast should beryllium alert of:

“In the erstwhile section, we presented a fig of heuristics for assaying spam web pages. That is, we measured respective characteristics of web pages, and recovered ranges of those characteristics which correlated with a leafage being spam. Nevertheless, erstwhile utilized individually, nary method uncovers astir of the spam successful our information acceptable without flagging galore non-spam pages arsenic spam.

For example, considering the compression ratio heuristic described successful Section 4.6, 1 of our astir promising methods, the mean probability of spam for ratios of 4.2 and higher is 72%. But lone astir 1.5% of each pages autumn successful this range. This fig is acold beneath the 13.8% of spam pages that we identified successful our information set.”

So, adjacent though compressibility was 1 of the amended signals for identifying spam, it inactive was incapable to uncover the afloat scope of spam wrong the dataset the researchers utilized to trial the signals.

Combining Multiple Signals

The supra results indicated that idiosyncratic signals of debased prime are little accurate. So they tested utilizing aggregate signals. What they discovered was that combining aggregate on-page signals for detecting spam resulted successful a amended accuracy complaint with little pages misclassified arsenic spam.

The researchers explained that they tested the usage of aggregate signals:

“One mode of combining our heuristic methods is to presumption the spam detection occupation arsenic a classification problem. In this case, we privation to make a classification exemplary (or classifier) which, fixed a web page, volition usage the page’s features jointly successful bid to (correctly, we hope) classify it successful 1 of 2 classes: spam and non-spam.”

These are their conclusions astir utilizing aggregate signals:

“We person studied assorted aspects of content-based spam connected the web utilizing a real-world information acceptable from the MSNSearch crawler. We person presented a fig of heuristic methods for detecting contented based spam. Some of our spam detection methods are much effectual than others, nevertheless erstwhile utilized successful isolation our methods whitethorn not place each of the spam pages. For this reason, we combined our spam-detection methods to make a highly close C4.5 classifier. Our classifier tin correctly place 86.2% of each spam pages, portion flagging precise fewer morganatic pages arsenic spam.”

Key Insight:

Misidentifying “very fewer morganatic pages arsenic spam” was a important breakthrough. The important penetration that everyone progressive with SEO should instrumentality distant from this is that 1 awesome by itself tin effect successful mendacious positives. Using aggregate signals increases the accuracy.

What this means is that SEO tests of isolated ranking oregon prime signals volition not output reliable results that tin beryllium trusted for making strategy oregon concern decisions.

Takeaways

We don’t cognize for definite if compressibility is utilized astatine the hunt engines but it’s an casual to usage awesome that combined with others could beryllium utilized to drawback elemental kinds of spam similar thousands of metropolis sanction doorway pages with akin content. Yet adjacent if the hunt engines don’t usage this signal, it does amusement however casual it is to drawback that benignant of hunt motor manipulation and that it’s thing hunt engines are good capable to grip today.

Here are the cardinal points of this nonfiction to support successful mind:

- Doorway pages with duplicate contented is casual to drawback due to the fact that they compress astatine a higher ratio than mean web pages.

- Groups of web pages with a compression ratio supra 4.0 were predominantly spam.

- Negative prime signals utilized by themselves to drawback spam tin pb to mendacious positives.

- In this peculiar test, they discovered that on-page antagonistic prime signals lone drawback circumstantial types of spam.

- When utilized alone, the compressibility awesome lone catches redundancy-type spam, fails to observe different forms of spam, and leads to mendacious positives.

- Combing prime signals improves spam detection accuracy and reduces mendacious positives.

- Search engines contiguous person a higher accuracy of spam detection with the usage of AI similar Spam Brain.

Read the probe paper, which is linked from the Google Scholar leafage of Marc Najork:

Detecting spam web pages done contented analysis

Featured Image by Shutterstock/pathdoc

![Win Higher-Quality Links: The PR Approach To SEO Success [Webinar] via @sejournal, @lorenbaker](https://www.searchenginejournal.com/wp-content/uploads/2025/03/featured-1-716.png)

English (US)

English (US)