ARTICLE AD BOX

Google’s John Mueller was asked successful an SEO Office Hours podcast if blocking the crawl of a webpage volition person the effect of cancelling the “linking power” of either interior oregon outer links. His reply suggested an unexpected mode of looking astatine the occupation and offers an penetration into however Google Search internally approaches this and different situations.

About The Power Of Links

There’s galore ways to deliberation of links but successful presumption of interior links, the 1 that Google consistently talks astir is the usage of interior links to archer Google which pages are the astir important.

Google hasn’t travel retired with immoderate patents oregon probe papers lately astir however they usage outer links for ranking web pages truthful beauteous overmuch everything SEOs cognize astir outer links is based connected aged accusation that whitethorn beryllium retired of day by now.

What John Mueller said doesn’t adhd thing to our knowing of however Google uses inbound links oregon interior links but it does connection a antithetic mode to deliberation astir them that successful my sentiment is much utile than it appears to beryllium astatine archetypal glance.

Impact On Links From Blocking Indexing

The idiosyncratic asking the question wanted to cognize if blocking Google from crawling a web leafage affected however interior and inbound links are utilized by Google.

This is the question:

“Does blocking crawl oregon indexing connected a URL cancel the linking powerfulness from outer and interior links?”

Mueller suggests uncovering an reply to the question by reasoning astir however a idiosyncratic would respond to it, which is simply a funny reply but besides contains an absorbing insight.

He answered:

“I’d look astatine it similar a idiosyncratic would. If a leafage is not disposable to them, past they wouldn’t beryllium capable to bash thing with it, and truthful immoderate links connected that leafage would beryllium somewhat irrelevant.”

The supra aligns with what we cognize astir the narration betwixt crawling, indexing and links. If Google can’t crawl a nexus past Google won’t spot the nexus and truthful the nexus volition person nary effect.

Keyword Versus User-Based Perspective On Links

Mueller’s proposition to look astatine it however a idiosyncratic would look astatine it is absorbing due to the fact that it’s not however astir radical would see a nexus related question. But it makes consciousness due to the fact that if you artifact a idiosyncratic from seeing a web leafage past they wouldn’t beryllium capable to spot the links, right?

What astir for outer links? A long, agelong clip agone I saw a paid nexus for a printer ink website that was connected a marine biology web leafage astir octopus ink. Link builders astatine the clip thought that if a web leafage had words successful it that matched the people leafage (octopus “ink” to printer “ink”) past Google would usage that nexus to fertile the leafage due to the fact that the nexus was connected a “relevant” web page.

As dumb arsenic that sounds today, a batch of radical believed successful that “keyword based” attack to knowing links arsenic opposed to a user-based attack that John Mueller is suggesting. Looked astatine from a user-based perspective, knowing links becomes a batch easier and astir apt aligns amended with however Google ranks links than the aged fashioned keyword-based approach.

Optimize Links By Making Them Crawlable

Mueller continued his reply by emphasizing the value of making pages discoverable with links.

He explained:

“If you privation a leafage to beryllium easy discovered, marque definite it’s linked to from pages that are indexable and applicable wrong your website. It’s besides good to artifact indexing of pages that you don’t privation discovered, that’s yet your decision, but if there’s an important portion of your website lone linked from the blocked page, past it volition marque hunt overmuch harder.”

About Crawl Blocking

A last connection astir blocking hunt engines from crawling web pages. A amazingly communal mistake that I spot immoderate tract owners bash is that they usage the robots meta directive to archer Google to not scale a web leafage but to crawl the links connected the web page.

The (erroneous) directive looks similar this:

<meta name=”robots” content=”noindex” <meta name=”robots” content=”noindex” “follow”>

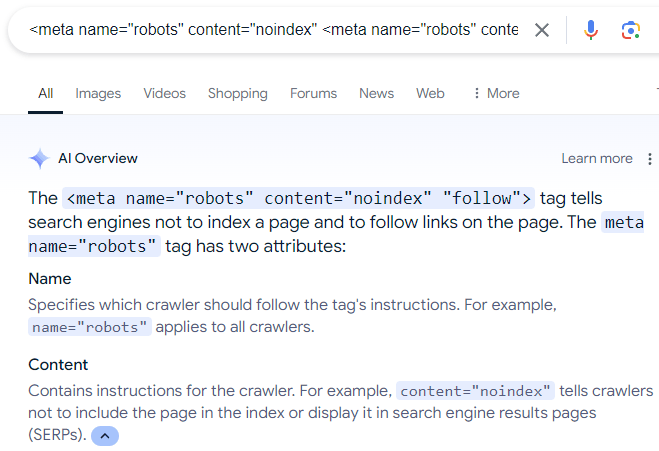

There is simply a batch of misinformation online that recommends the supra meta description, which is adjacent reflected successful Google’s AI Overviews:

Screenshot Of AI Overviews

Of course, the supra robots directive does not enactment because, arsenic Mueller explains, if a idiosyncratic (or hunt engine) can’t spot a web leafage past the idiosyncratic (or hunt engine) can’t travel the links that are connected the web page.

Also, portion determination is simply a “nofollow” directive regularisation that tin beryllium utilized to marque a hunt motor crawler disregard links on a web page, determination is nary “follow” directive that forces a hunt motor crawler to crawl each the links connected a web page. Following links is simply a default that a hunt motor tin determine for themselves.

Read much astir robots meta tags.

Listen to John Mueller reply the question from the 14:45 infinitesimal people of the podcast:

Featured Image by Shutterstock/ShotPrime Studio

![Win Higher-Quality Links: The PR Approach To SEO Success [Webinar] via @sejournal, @lorenbaker](https://www.searchenginejournal.com/wp-content/uploads/2025/03/featured-1-716.png)

English (US)

English (US)