ARTICLE AD BOX

Many are alert of the fashionable Chain of Thoughts (CoT) method of prompting generative AI successful bid to get amended and much blase responses. Researchers from Google DeepMind and Princeton University developed an improved prompting strategy called Tree of Thoughts (ToT) that takes prompting to a higher level of results, unlocking much blase reasoning methods and amended outputs.

The researchers explain:

“We amusement however deliberate hunt successful trees of thoughts (ToT) produces amended results, and much importantly, absorbing and promising caller ways to usage connection models to lick problems requiring hunt oregon planning.”

Researchers Compare Against Three Kinds Of Prompting

The probe insubstantial compares ToT against 3 different prompting strategies.

1. Input-output (IO) Prompting

This is fundamentally giving the connection exemplary a occupation to lick and getting the answer.

An illustration based connected substance summarization is:

Input Prompt: Summarize the pursuing article.

Output Prompt: Summary based connected the nonfiction that was input

2. Chain Of Thought Prompting

This signifier of prompting is wherever a connection exemplary is guided to make coherent and connected responses by encouraging it to travel a logical series of thoughts. Chain-of-Thought (CoT) Prompting is simply a mode of guiding a connection exemplary done the intermediate reasoning steps to lick problems.

Chain Of Thought Prompting Example:

Question: Roger has 5 tennis balls. He buys 2 much cans of tennis balls. Each tin has 3 tennis balls. How galore tennis balls does helium person now?

Reasoning: Roger started with 5 balls. 2 cans of 3 tennis balls each is 6 tennis balls. 5 + 6 = 11. The answer: 11

Question: The cafeteria had 23 apples. If they utilized 20 to marque luncheon and bought 6 more, however galore apples bash they have?

3. Self-consistency with CoT

In elemental terms, this is simply a prompting strategy of prompting the connection exemplary aggregate times past choosing the astir commonly arrived astatine answer.

The research paper connected Sel-consistency with CoT from March 2023 explains it:

“It archetypal samples a divers acceptable of reasoning paths alternatively of lone taking the greedy one, and past selects the astir accordant reply by marginalizing retired the sampled reasoning paths. Self-consistency leverages the intuition that a analyzable reasoning occupation typically admits aggregate antithetic ways of reasoning starring to its unsocial close answer.”

Dual Process Models successful Human Cognition

The researchers instrumentality inspiration from a mentation of however quality determination reasoning called dual process models successful quality cognition oregon dual process theory.

Dual process models successful quality cognition proposes that humans prosecute successful 2 kinds of decision-making processes, 1 that is intuitive and accelerated and different that is much deliberative and slower.

- Fast, Automatic, Unconscious

This mode involves fast, automatic, and unconscious reasoning that’s often said to beryllium based connected intuition. - Slow, Deliberate, Conscious

This mode of decision-making is simply a slow, deliberate, and conscious reasoning process that involves cautious consideration, analysis, and measurement by measurement reasoning earlier settling connected a last decision.

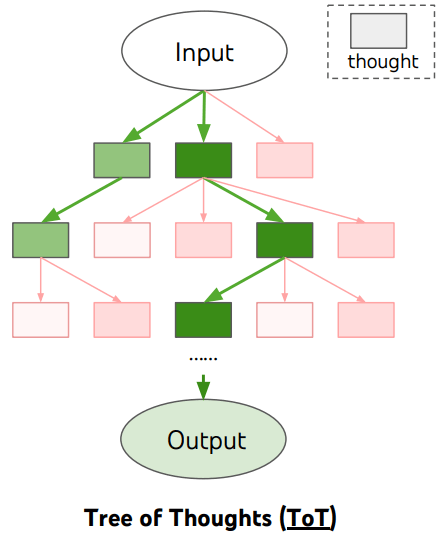

The Tree of Thoughts (ToT) prompting model uses a histrion operation of each measurement of the reasoning process that allows the connection exemplary to measure each reasoning measurement and determine whether oregon not that measurement successful the reasoning is viable and pb to an answer. If the connection exemplary decides that the reasoning way volition not pb to an reply the prompting strategy requires it to wantonness that way (or branch) and support moving guardant with different branch, until it reaches the last result.

Tree Of Thoughts (ToT) Versus Chain of Thoughts (CoT)

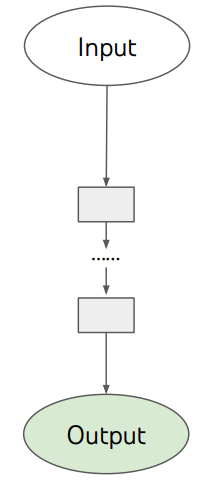

The quality betwixt ToT and and CoT is that ToT is has a histrion and subdivision model for the reasoning process whereas CoT takes a much linear path.

In elemental terms, CoT tells the connection exemplary to travel a bid of steps successful bid to execute a task, which resembles the strategy 1 cognitive exemplary that is accelerated and automatic.

ToT resembles the strategy 2 cognitive exemplary that is much deliberative and tells the connection exemplary to travel a bid of steps but to besides person an evaluator measurement successful and reappraisal each measurement and if it’s a bully measurement to support going and if not to halt and travel different path.

Illustrations Of Prompting Strategies

The probe insubstantial published schematic illustrations of each prompting strategy, with rectangular boxes that correspond a “thought” wrong each measurement toward completing the task, solving a problem.

The pursuing is simply a screenshot of what the reasoning process for ToT looks like:

Illustration of Chain of Though Prompting

This is the schematic illustration for CoT, showing however the thought process is much of a consecutive way (linear):

The probe insubstantial explains:

“Research connected quality problem-solving suggests that radical hunt done a combinatorial occupation abstraction – a histrion wherever the nodes correspond partial solutions, and the branches correspond to operators

that modify them. Which subdivision to instrumentality is determined by heuristics that assistance to navigate the problem-space and usher the problem-solver towards a solution.

This position highlights 2 cardinal shortcomings of existing approaches that usage LMs to lick wide problems:

1) Locally, they bash not research antithetic continuations wrong a thought process – the branches of the tree.

2) Globally, they bash not incorporated immoderate benignant of planning, lookahead, oregon backtracking to assistance measure these antithetic options – the benignant of heuristic-guided hunt that seems diagnostic of quality problem-solving.

To code these shortcomings, we present Tree of Thoughts (ToT), a paradigm that allows LMs to research aggregate reasoning paths implicit thoughts…”

Tested With A Mathematical Game

The researchers tested the method utilizing a Game of 24 mathematics game. Game of 24 is simply a mathematical paper crippled wherever players usage 4 numbers (that tin lone beryllium utilized once) from a acceptable of cards to harvester them utilizing basal arithmetic (addition, subtraction, multiplication, and division) to execute a effect of 24.

Results and Conclusions

The researchers tested the ToT prompting strategy against the 3 different approaches and recovered that it produced consistently amended results.

However they besides enactment that ToT whitethorn not beryllium indispensable for completing tasks that GPT-4 already does good at.

They conclude:

“The associative “System 1” of LMs tin beryllium beneficially augmented by a “System 2″ based connected searching a histrion of imaginable paths to the solution to a problem.

The Tree of Thoughts model provides a mode to construe classical insights astir problem-solving into actionable methods for modern LMs.

At the aforesaid time, LMs code a weakness of these classical methods, providing a mode to lick analyzable problems that are not easy formalized, specified arsenic creative

writing.

We spot this intersection of LMs with classical approaches to AI arsenic an breathtaking direction.”

Read the archetypal probe paper:

Tree of Thoughts: Deliberate Problem Solving with Large Language Models

Featured Image by Shutterstock/Asier Romero

.png)

English (US)

English (US)