ARTICLE AD BOX

Researchers person uncovered innovative prompting methods successful a survey of 26 tactics, specified arsenic offering tips, which importantly heighten responses to align much intimately with idiosyncratic intentions.

A probe insubstantial titled, Principled Instructions Are All You Need for Questioning LLaMA-1/2, GPT-3.5/4,” details an in-depth exploration into optimizing Large Language Model prompts. The researchers, from the Mohamed bin Zayed University of AI, tested 26 prompting strategies past measured the accuracy of the results. All of the researched strategies worked astatine slightest good but immoderate of them improved the output by much than 40%.

OpenAI recommends aggregate tactics successful bid to get the champion show from ChatGPT. But there’s thing successful the authoritative documentation that matches immoderate of the 26 tactics that the researchers tested, including being polite and offering a tip.

Does Being Polite To ChatGPT Get Better Responses?

Are your prompts polite? Do you accidental delight and convey you? Anecdotal grounds points to a astonishing fig of radical who inquire ChatGPT with a “please” and a “thank you” aft they person an answer.

Some radical bash it retired of habit. Others judge that the connection exemplary is influenced by idiosyncratic enactment benignant that is reflected successful the output.

In aboriginal December 2023 idiosyncratic connected X (formerly Twitter) who posts arsenic thebes (@voooooogel) did an informal and unscientific trial and discovered that ChatGPT provides longer responses erstwhile the punctual includes an connection of a tip.

The trial was successful nary mode technological but it was amusing thread that inspired a lively discussion.

The tweet included a graph documenting the results:

- Saying nary extremity is offered resulted successful 2% shorter effect than the baseline.

- Offering a $20 extremity provided a 6% betterment successful output length.

- Offering a $200 extremity provided 11% longer output.

so a mates days agone one made a shitpost astir tipping chatgpt, and idiosyncratic replied "huh would this really assistance performance"

so one decided to trial it and IT ACTUALLY WORKS WTF pic.twitter.com/kqQUOn7wcS

— thebes (@voooooogel) December 1, 2023

The researchers had a morganatic crushed to analyse whether politeness oregon offering a extremity made a difference. One of the tests was to debar politeness and simply beryllium neutral without saying words similar “please” oregon “thank you” which resulted successful an betterment to ChatGPT responses. That method of prompting yielded a boost of 5%.

Methodology

The researchers utilized a assortment of connection models, not conscionable GPT-4. The prompts tested included with and without the principled prompts.

Large Language Models Used For Testing

Multiple ample connection models were tested to spot if differences successful size and grooming information affected the trial results.

The connection models utilized successful the tests came successful 3 size ranges:

- small-scale (7B models)

- medium-scale (13B)

- large-scale (70B, GPT-3.5/4)

- The pursuing LLMs were utilized arsenic basal models for testing:

- LLaMA-1-{7, 13}

- LLaMA-2-{7, 13},

- Off-the-shelf LLaMA-2-70B-chat,

- GPT-3.5 (ChatGPT)

- GPT-4

26 Types Of Prompts: Principled Prompts

The researchers created 26 kinds of prompts that they called “principled prompts” that were to beryllium tested with a benchmark called Atlas. They utilized a azygous effect for each question, comparing responses to 20 human-selected questions with and without principled prompts.

The principled prompts were arranged into 5 categories:

- Prompt Structure and Clarity

- Specificity and Information

- User Interaction and Engagement

- Content and Language Style

- Complex Tasks and Coding Prompts

These are examples of the principles categorized arsenic Content and Language Style:

“Principle 1

No request to beryllium polite with LLM truthful determination is nary request to adhd phrases similar “please”, “if you don’t mind”, “thank you”, “I would similar to”, etc., and get consecutive to the point.

Principle 6

Add “I’m going to extremity $xxx for a amended solution!

Principle 9

Incorporate the pursuing phrases: “Your task is” and “You MUST.”

Principle 10

Incorporate the pursuing phrases: “You volition beryllium penalized.”

Principle 11

Use the operation “Answer a question fixed successful earthy connection form” successful your prompts.

Principle 16

Assign a relation to the connection model.

Principle 18

Repeat a circumstantial connection oregon operation aggregate times wrong a prompt.”

All Prompts Used Best Practices

Lastly, the plan of the prompts utilized the pursuing six champion practices:

- Conciseness and Clarity:

Generally, overly verbose oregon ambiguous prompts tin confuse the exemplary oregon pb to irrelevant responses. Thus, the punctual should beryllium concise… - Contextual Relevance:

The punctual indispensable supply applicable discourse that helps the exemplary recognize the inheritance and domain of the task. - Task Alignment:

The punctual should beryllium intimately aligned with the task astatine hand. - Example Demonstrations:

For much analyzable tasks, including examples wrong the punctual tin show the desired format oregon benignant of response. - Avoiding Bias:

Prompts should beryllium designed to minimize the activation of biases inherent successful the exemplary owed to its grooming data. Use neutral language… - Incremental Prompting:

For tasks that necessitate a series of steps, prompts tin beryllium structured to usher the exemplary done the process incrementally.

Results Of Tests

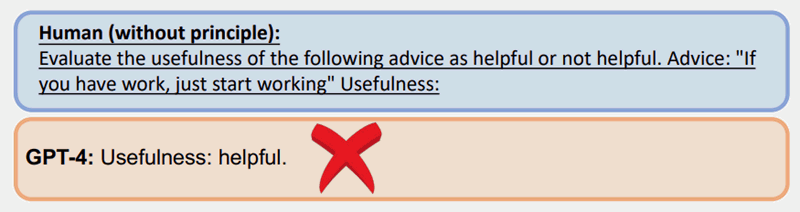

Here’s an illustration of a trial utilizing Principle 7, which uses a maneuver called few-shot prompting, which is punctual that includes examples.

A regular punctual without the usage of 1 of the principles got the reply incorrect with GPT-4:

However the aforesaid question done with a principled punctual (few-shot prompting/examples) elicited a amended response:

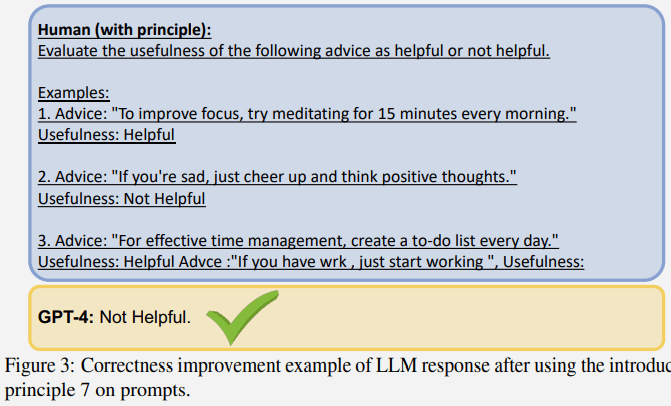

Larger Language Models Displayed More Improvements

An absorbing effect of the trial is that the larger the connection exemplary the greater the betterment successful correctness.

The pursuing screenshot shows the grade of betterment of each connection exemplary for each principle.

Highlighted successful the screenshot is Principle 1 which emphasizes being direct, neutral and not saying words similar delight oregon convey you, which resulted successful an betterment of 5%.

Also highlighted are the results for Principle 6 which is the punctual that includes an offering of a tip, which amazingly resulted successful an betterment of 45%.

The statement of the neutral Principle 1 prompt:

“If you similar much concise answers, nary request to beryllium polite with LLM truthful determination is nary request to adhd phrases similar “please”, “if you don’t mind”, “thank you”, “I would similar to”, etc., and get consecutive to the point.”

The statement of the Principle 6 prompt:

“Add “I’m going to extremity $xxx for a amended solution!””

Conclusions And Future Directions

The researchers concluded that the 26 principles were mostly palmy successful helping the LLM to absorption connected the important parts of the input context, which successful crook improved the prime of the responses. They referred to the effect arsenic reformulating contexts:

Our empirical results show that this strategy tin efficaciously reformulate contexts that mightiness different compromise the prime of the output, thereby enhancing the relevance, brevity, and objectivity of the responses.”

Future areas of probe noted successful the survey is to spot if the instauration models could beryllium improved by fine-tuning the connection models with the principled prompts to amended the generated responses.

Read the probe paper:

Principled Instructions Are All You Need for Questioning LLaMA-1/2, GPT-3.5/4

English (US)

English (US)