ARTICLE AD BOX

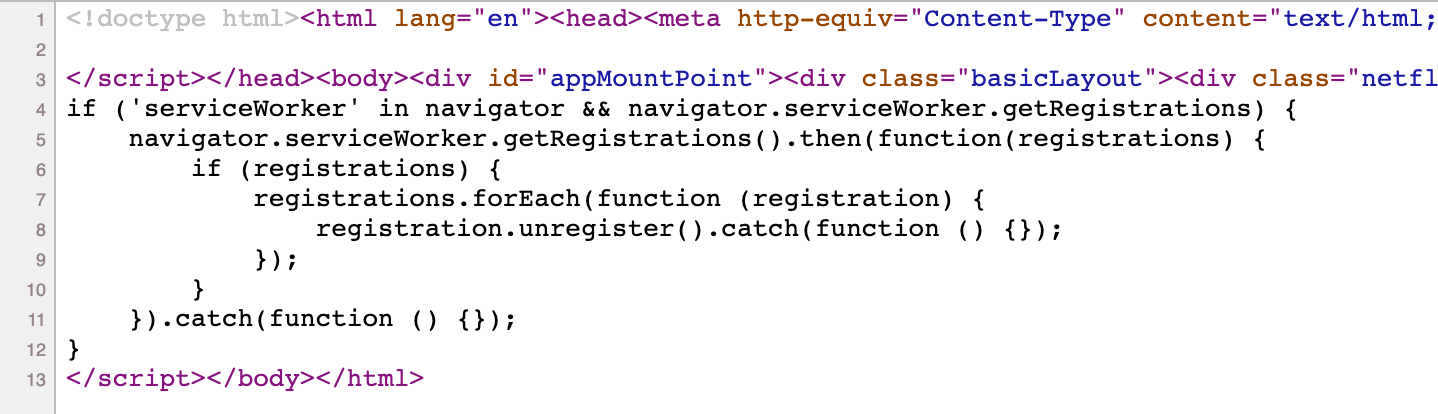

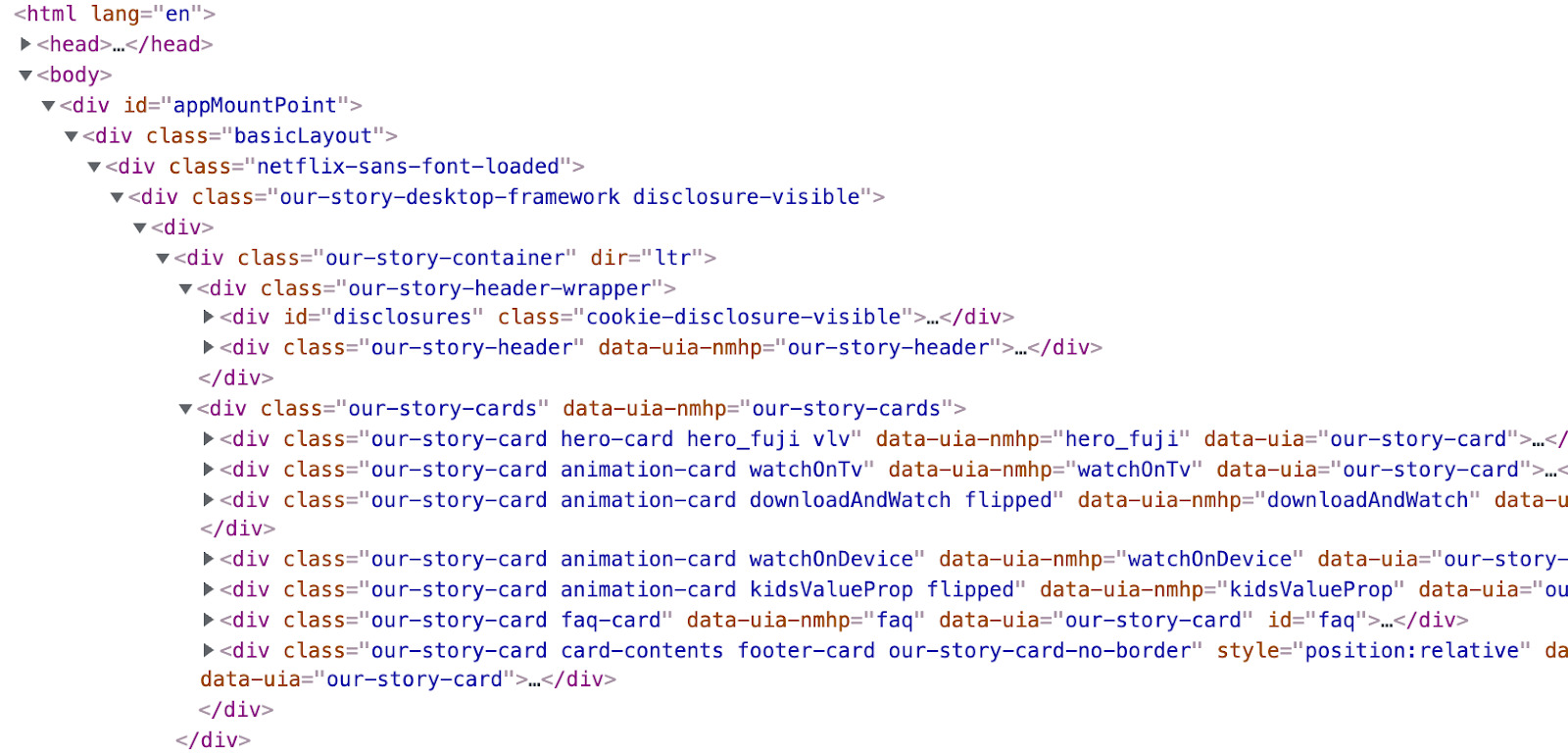

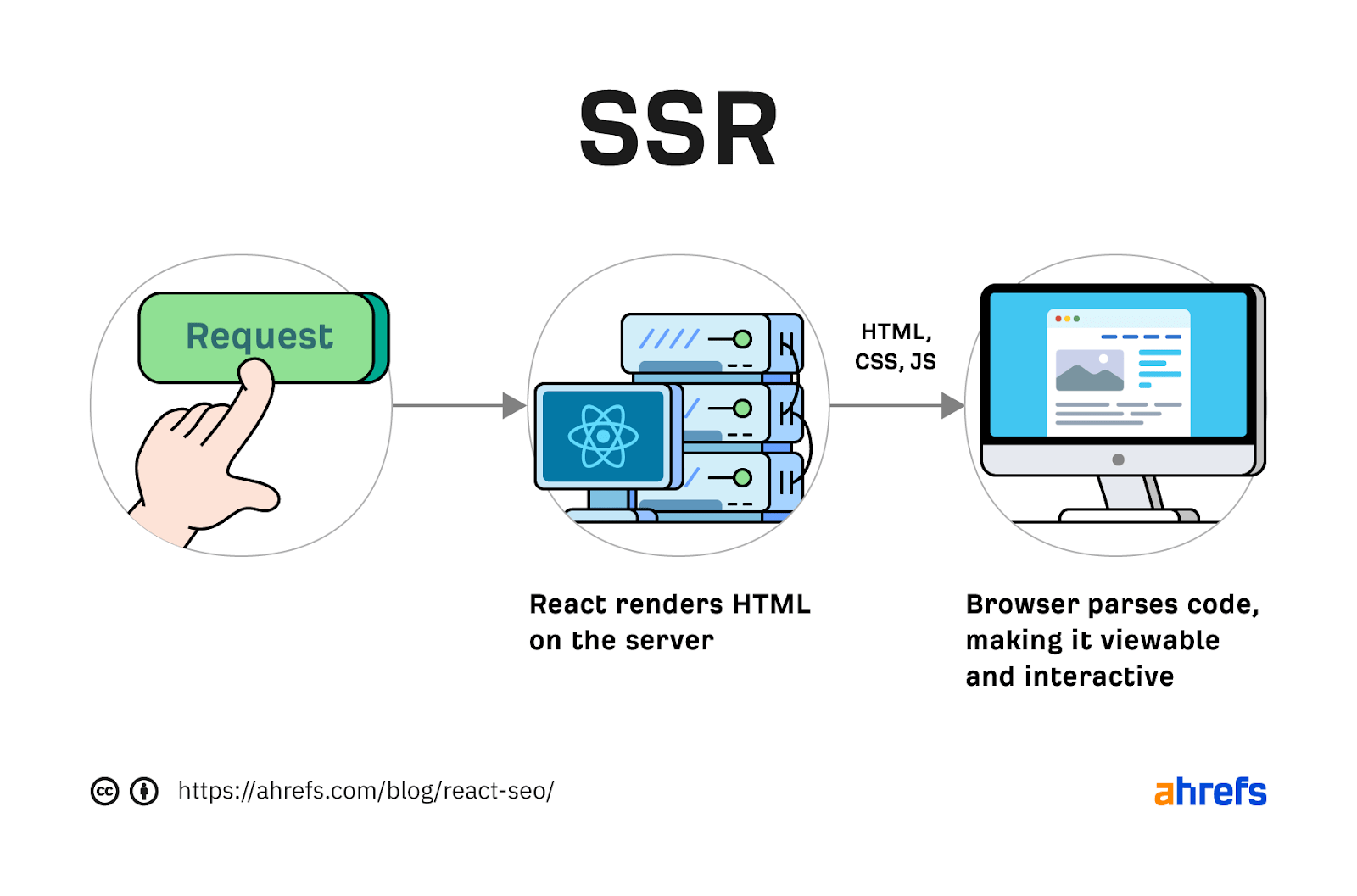

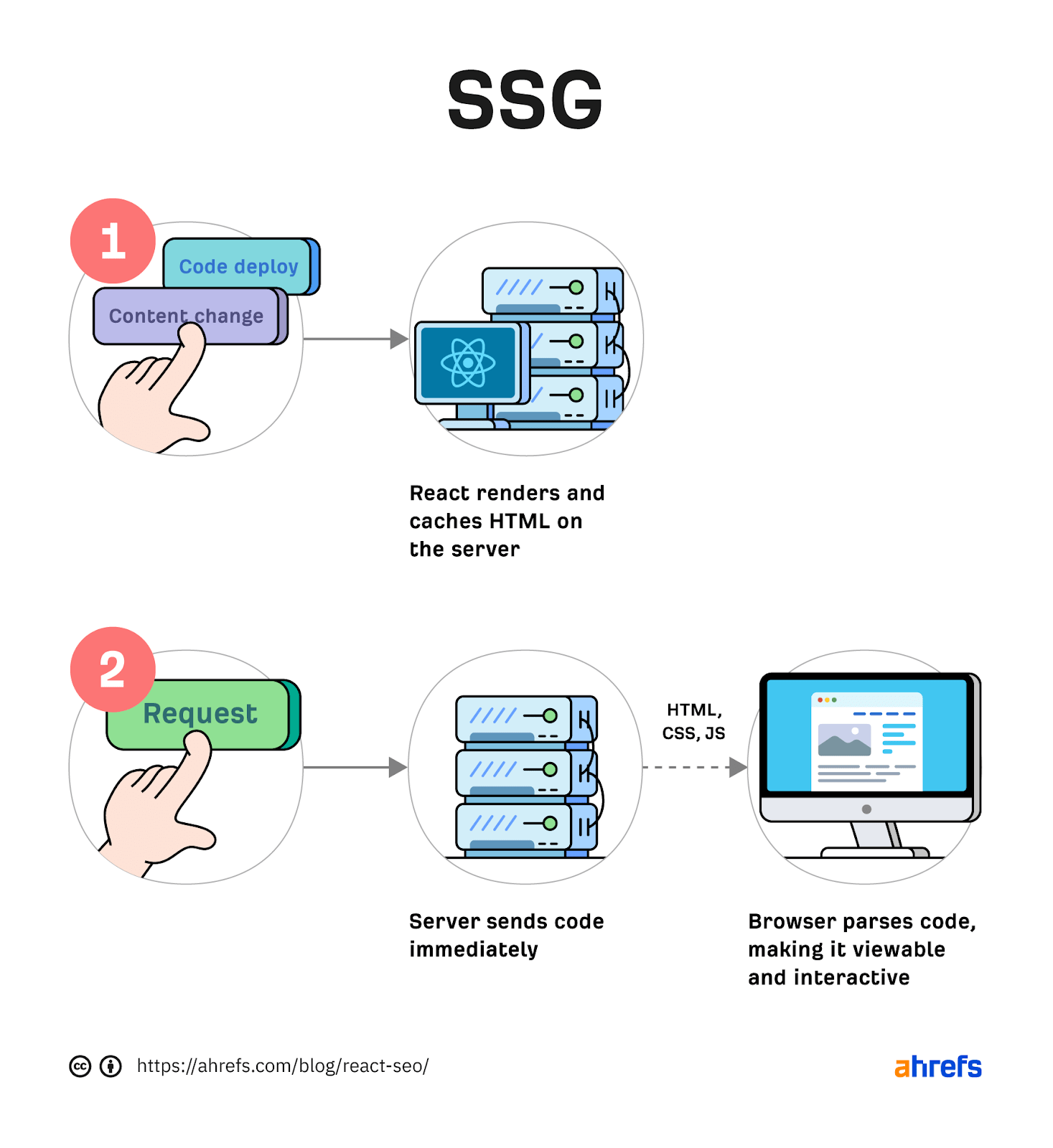

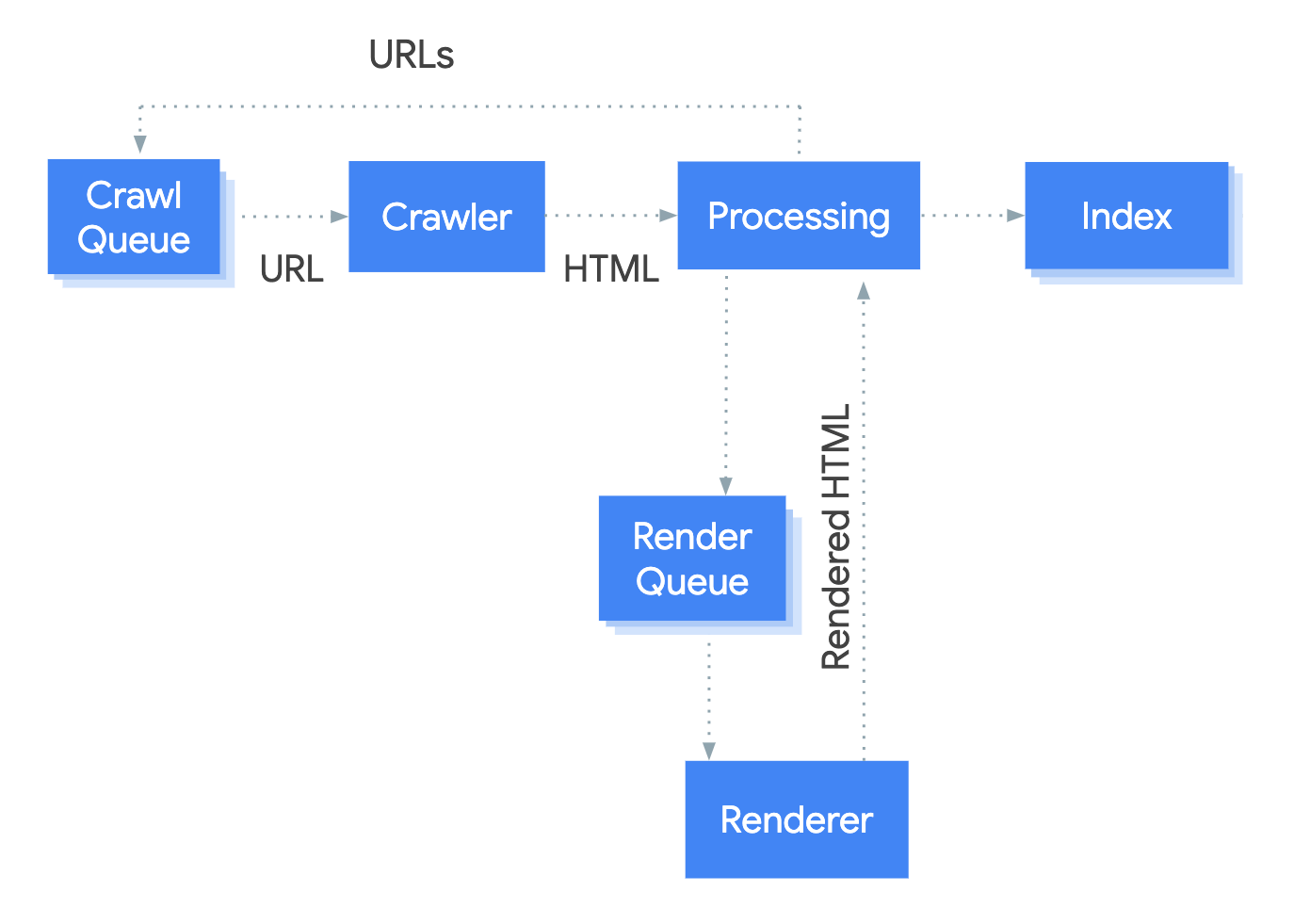

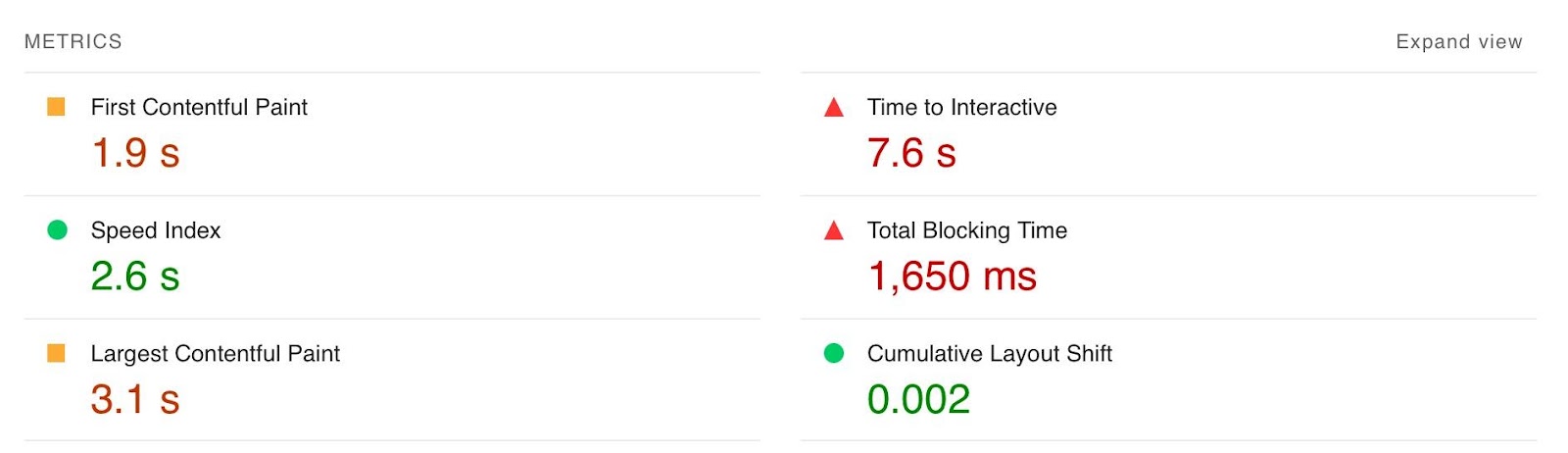

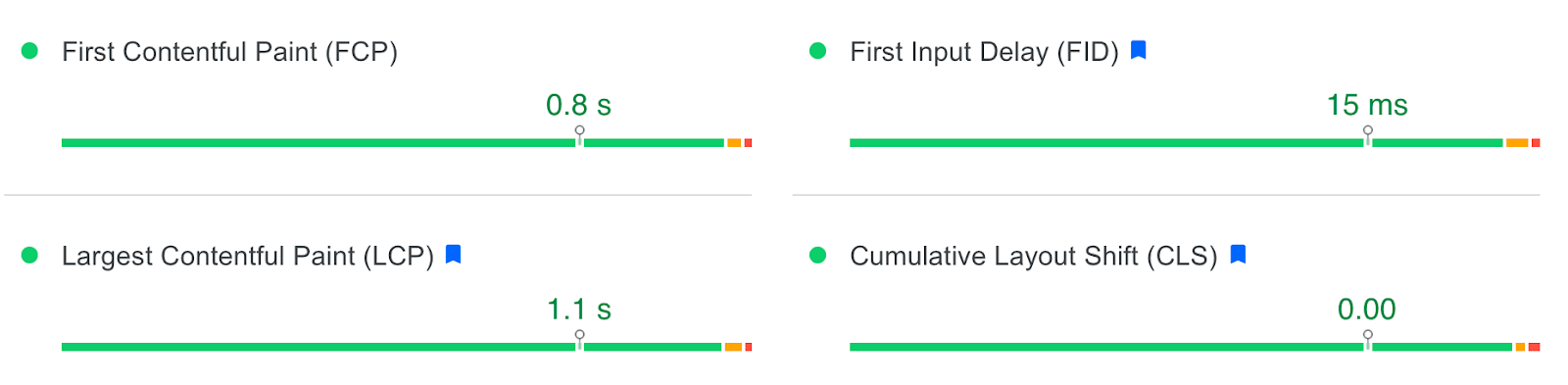

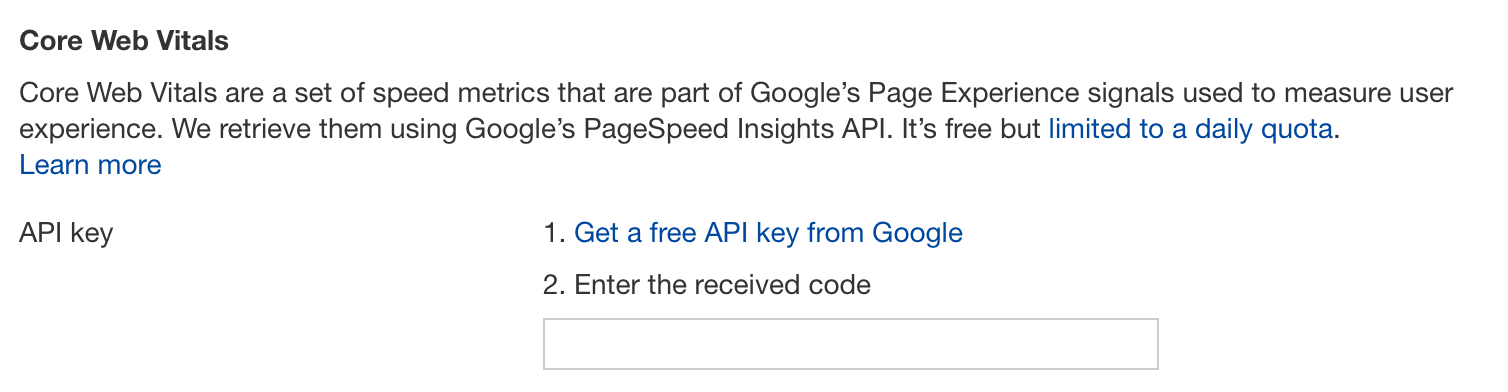

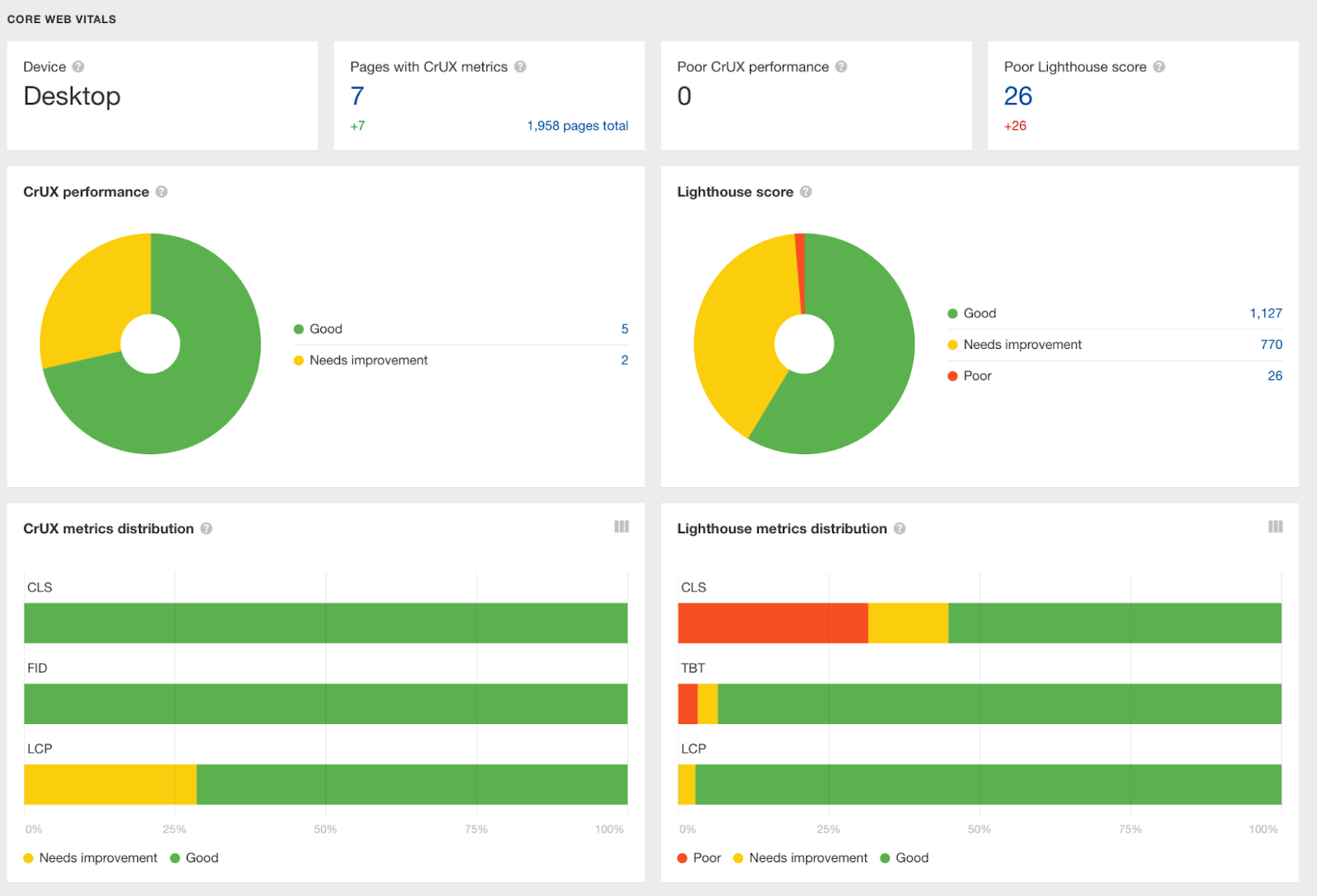

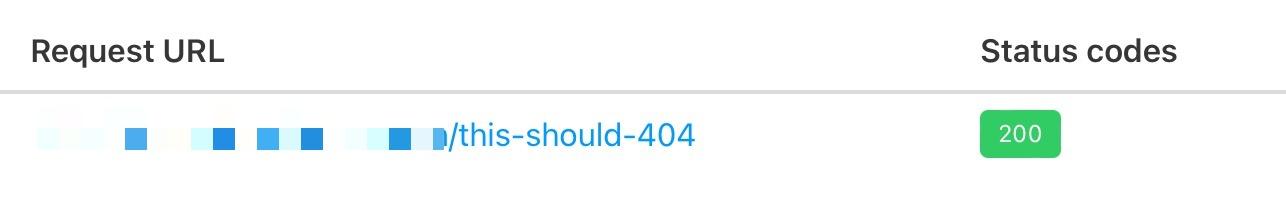

The expanding prevalence of React in modern web improvement cannot beryllium ignored. React and different akin libraries (like Vue.js) are becoming the de facto prime for larger businesses that necessitate analyzable improvement wherever a much simplistic approach (like utilizing a WordPress theme) won’t fulfill the requirements. Despite that, SEOs did not initially clasp libraries similar React due to hunt engines struggling to efficaciously render JavaScript, with content disposable within the HTML source being the preference. However, developments successful some however Google and React tin render JavaScript have simplified these complexities, resulting successful SEO nary longer being the blocker for using React. Still, immoderate complexities remain, which I’ll tally done in this guide. On that note, here’s what we’ll cover: React is an open-source JavaScript room developed by Meta (formerly Facebook) for gathering web and mobile applications. The main features of React are that it is declarative, is component-based, and allows easier manipulation of the DOM. The simplest mode to recognize the components is by reasoning of them arsenic plugins, similar for WordPress. They let developers to rapidly physique a plan and adhd functionality to a leafage utilizing component libraries similar MUI or Tailwind UI. If you privation the afloat lowdown connected wherefore developers emotion React, start here: React implements an App Shell Model, meaning the immense bulk of content, if not all, volition beryllium Client-side Rendered (CSR) by default. CSR means the HTML chiefly contains the React JS room alternatively than the server sending the full page’s contents wrong the archetypal HTTP effect from the server (the HTML source). It volition besides see miscellaneous JavaScript containing JSON data or links to JS files that contain React components. You tin rapidly archer a tract is client-side rendered by checking the HTML source. To bash that, right-click and prime “View Page Source” (or CTRL + U/CMD + U). A screenshot of the netlfix.com homepage root HTML. If you don’t spot galore lines of HTML there, the exertion is apt client-side rendering. However, erstwhile you inspect the constituent by right-clicking and selecting “Inspect element” (or F12/CMD + ⌥ + I), you’ll spot the DOM generated by the browser (where the browser has rendered JavaScript). The effect is you’ll past spot the tract has a batch of HTML: Note the appMountPoint ID on the archetypal <div>. You’ll commonly spot an constituent similar that connected a single-page exertion (SPA), truthful a room similar React knows wherever it should inject HTML. Technology detection tools, e.g., Wappalyzer, are besides large astatine detecting the library. Editor’s Note Ahrefs’ Site Audit saves some the Raw HTML sent from the server and the Rendered HTML successful the browser, making it easier to spot whether a tract has client-side rendered content. Even better, you tin hunt some the Raw and Rendered HTML to cognize what contented is specifically being rendered client-side. In the beneath example, you tin spot this tract is client-side rendering cardinal leafage content, specified arsenic the <h1> tag. Websites created utilizing React disagree from the much accepted attack of leaving the heavy-lifting of rendering contented connected the server utilizing languages similar PHP—called Server-side Rendering (SSR). The supra shows the server rendering JavaScript into HTML with React (more connected that shortly). The conception is the aforesaid for sites built with PHP (like WordPress). It’s conscionable PHP being turned into HTML alternatively than JavaScript. Before SSR, developers kept it adjacent simpler. They would make static HTML documents that didn’t change, big them connected a server, and past send them immediately. The server didn’t request to render anything, and the browser often had precise small to render. SPAs (including those utilizing React) are present coming afloat circle back to this static approach. They’re present pre-rendering JavaScript into HTML before a browser requests the URL. This attack is called Static Site Generation (SSG), besides known arsenic Static Rendering. In practice, SSR and SSG are similar. The cardinal quality is that rendering happens with SSR erstwhile a browser requests a URL versus a model pre-rendering contented astatine physique clip with SSG (when developers deploy new codification or a web admin changes the site’s content). SSR tin beryllium much dynamic but slower owed to further latency portion the server renders the contented earlier sending it to the user’s browser. SSG is faster, arsenic the contented has already been rendered, meaning it tin beryllium served to the idiosyncratic instantly (meaning a quicker TTFB). To recognize wherefore React’s default client-side rendering approach causes SEO issues, you archetypal request to know how Google crawls, processes, and indexes pages. We tin summarize the basics of however this works in the below steps: Historically, issues with React and different JS libraries person been owed to Google not handling the rendering step well. Some examples include: Thankfully, Google has present resolved astir of these issues. Googlebot is present evergreen, meaning it ever supports the latest features of Chromium. In addition, the rendering hold is present five seconds, arsenic announced by Martin Splitt astatine the Chrome Developer Summit successful November 2019: Last twelvemonth Tom Greenaway and I were connected this signifier and telling you, ‘Well, you know, it tin instrumentality up to a week, we are precise atrocious for this.’ Forget this, okay? Because the caller numbers look a batch better. So we really went implicit the numbers and recovered that, it turns retired that astatine median, the clip we spent betwixt crawling and really having rendered these results is – connected median – it’s 5 seconds!” This each sounds positive. But is client-side rendering and leaving Googlebot to render contented the close strategy? The reply is astir apt still no. In the past 5 years, Google has innovated its handling of JavaScript content, but entirely client-side rendered sites present different issues that you request to consider. It’s important to note that you tin flooded each issues with React and SEO. React JS is simply a improvement tool. React is nary antithetic from immoderate different instrumentality wrong a improvement stack, whether that’s a WordPress plugin oregon the CDN you choose. How you configure it volition determine whether it detracts oregon enhances SEO. Ultimately, React is bully for SEO, arsenic it improves idiosyncratic experience. You conscionable request to marque definite you see the pursuing communal issues. The astir significant issue you’ll request to tackle with React is how it renders content. As mentioned, Google is large astatine rendering JavaScript nowadays. But unfortunately, that isn’t the lawsuit with different hunt engines. Bing has immoderate enactment for JavaScript rendering, though its efficiency is unknown. Other hunt engines similar Baidu, Yandex, and others connection constricted support. Sidenote. This regulation doesn’t lone interaction hunt engines. Apart from tract auditors, SEO tools that crawl the web and supply captious information connected elements similar a site’s backlinks do not render JavaScript. This tin person a significant impact on the prime of information they provide. The lone objection is Ahrefs, which has been rendering JavaScript crossed the web since 2017 and presently renders over 200 cardinal pages per day. Introducing this chartless builds a bully lawsuit for opting for a server-side rendered solution to guarantee that each crawlers tin spot the site’s content. In addition, rendering contented on the server has different crucial benefit: load times. Rendering JavaScript is intensive connected the CPU; this makes ample libraries similar React slower to load and go interactive for users. You’ll mostly spot Core Web Vitals, specified as Time to Interactive (TTI), being overmuch higher for SPAs—especially connected mobile, the superior mode users devour web content. An illustration React exertion that utilizes client-side rendering. However, aft the archetypal render by the browser, consequent load times thin to beryllium quicker owed to the following: Depending connected the fig of pages viewed per visit, this tin effect successful tract information being affirmative overall. However, if your tract has a debased fig of pages viewed per visit, you’ll conflict to get affirmative tract information for each Core Web Vitals. The champion enactment is to opt for SSR oregon SSG chiefly due to: Implementing SSR wrong React is imaginable via ReactDOMServer. However, I urge utilizing a React model called Next.js and using its SSG and SSR options. You tin besides instrumentality CSR with Next.js, but the model nudges users toward SSR/SSG owed to speed. Next.js supports what it calls “Automatic Static Optimization.” In practice, this means you tin person immoderate pages connected a tract that usage SSR (such as an relationship page) and different pages utilizing SSG (such as your blog). The result: SSG and accelerated TTFB for non-dynamic pages, and SSR arsenic a backup rendering strategy for dynamic content. Sidenote. You whitethorn person heard astir React Hydration with ReactDOM.hydrate(). This is wherever contented is delivered via SSG/SSR and past turns into a client-side rendered exertion during the archetypal render. This whitethorn beryllium the evident prime for dynamic applications successful the aboriginal alternatively than SSR. However, hydration presently works by loading the full React room and past attaching event handlers to HTML that volition change. React past keeps HTML betwixt the browser and server successful sync. Currently, I can’t urge this approach because it inactive has antagonistic implications for web vitals similar TTI for the archetypal render. Partial Hydration may resoluteness this successful the aboriginal by lone hydrating captious parts of the leafage (like ones wrong the browser viewport) alternatively than the full page; until then, SSR/SSG is the amended option. Since we’re talking astir speed, I’ll be doing you a disservice by not mentioning different ways Next.js optimizes the critical rendering path for React applications with features like: Speed and affirmative Core Web Vitals are a ranking factor, albeit a insignificant one. Next.js features marque it easier to make large web experiences that volition springiness you a competitory advantage. TIP Many developers deploy their Next.js web applications utilizing Vercel (the creators of Next.js), which has a planetary borderline web of servers; this results successful accelerated load times. Vercel provides data connected the Core Web Vitals of each sites deployed connected the platform, but you tin besides get elaborate web captious information for each URL utilizing Ahrefs’ Site Audit. Simply adhd an API cardinal wrong the crawl settings of your projects. After you’ve tally your audit, person a look astatine the show area. There, Ahrefs’ Site Audit will amusement you charts displaying information from the Chrome User Experience Report (CrUX) and Lighthouse. A communal contented with astir SPAs is they don’t correctly study presumption codes. This is as the server isn’t loading the page—the browser is. You’ll commonly spot issues with: You tin spot beneath I ran a trial connected a React tract with httpstatus.io. This leafage should evidently beryllium a 404 but, instead, returns a 200 presumption code. This is called a soft 404. The hazard present is that Google whitethorn determine to scale that leafage (depending connected its content). Google could past service this to users, oregon it’ll beryllium utilized erstwhile evaluating a site. In addition, reporting 404s helps SEOs audit a site. If you accidentally internally nexus to a 404 leafage and it’s returning a 200 presumption code, rapidly spotting the country with an auditing instrumentality whitethorn go overmuch much challenging. There are a mates of ways to lick this issue. If you’re client-side rendering: If you’re utilizing SSR, Next.js makes this elemental with response helpers, which fto you acceptable immoderate presumption codification you want, including 3xx redirects oregon a 4xx presumption code. The attack I outlined utilizing React Router tin besides beryllium enactment into practice while utilizing Next.js. However, if you’re utilizing Next.js, you’re apt besides implementing SSR/SSG. This contented isn’t arsenic communal for React, but it’s essential to debar hash URLs similar the following: Generally, Google isn’t going to spot thing aft the hash. All of these pages volition beryllium seen arsenic https://reactspa.com/. SPAs with client-side routing should instrumentality the History API to alteration pages. You tin bash this comparatively easy with some React Router and Next.js. A communal mistake with SPAs is using a <div> oregon a <button> to change the URL. This isn’t an contented with React itself, but however the room is used. Doing this presents an contented with hunt engines. As mentioned earlier, erstwhile Google processes a URL, it looks for further URLs to crawl wrong <a href> elements. If the <a href> element is missing, Google won’t crawl the URLs and pass PageRank. The solution is to include <a href> links to URLs that you privation Google to discover. Checking whether you’re linking to a URL correctly is easy. Inspect the constituent that internally links and cheque the HTML to guarantee you’ve included <a href> links. As successful the supra example, you whitethorn person an contented if they aren’t. However, it’s essential to recognize that missing <a href> links aren’t ever an issue. One payment of CSR is that erstwhile contented is adjuvant to users but not hunt engines, you tin alteration the contented client-side and not see the <a href> link. In the supra example, the tract uses faceted navigation that links to perchance millions of combinations of filters that aren’t utile for a hunt motor to crawl or index. Loading these filters client-side makes consciousness here, arsenic the tract volition conserve crawl budget by not adding <a href> links for Google to crawl. Next.js makes this casual with its nexus component, which you tin configure to let client-side navigation. If you’ve decided to instrumentality a afloat CSR application, you tin alteration URLs with React Router using onClick and the History API. It’s common for sites developed with React to inject contented into the DOM erstwhile a idiosyncratic clicks oregon hovers implicit an element—simply due to the fact that the room makes that casual to do. This isn’t inherently bad, but contented added to the DOM this mode volition not be seen by hunt engines. If the contented injected includes important textual contented oregon interior links, this may negatively impact: Here’s an illustration connected a React JS tract I precocious audited. Here, I’ll show a well-known e‑commerce marque with important interior links wrong its faceted navigation. However, a modal showing the navigation connected mobile was injected into the DOM erstwhile you clicked a “Filter” button. Watch the 2nd <!—-> within the HTML beneath to spot this successful practice: Spotting these issues isn’t easy. And arsenic acold arsenic I know, nary instrumentality volition straight archer you about them. Instead, you should cheque for communal elements such as: You’ll past request to inspect the element on them and ticker what happens with the HTML arsenic you open/close them by clicking oregon hovering (as I person done successful the above GIF). Suppose you announcement JavaScript is adding HTML to the page. In that case, you’ll request to enactment with the developers. This is so that alternatively than injecting the contented into the DOM, it’s included wrong the HTML by default and is hidden and shown via CSS utilizing properties similar visibility: hidden; or display: none;. While determination are further SEO considerations with React applications, that doesn’t mean different fundamentals don’t apply. You’ll inactive request to marque definite your React applications travel champion practices for: Unfortunately, moving with React applications does adhd to the already agelong database of issues a method SEO needs to check. But acknowledgment to frameworks similar Next.js, it makes the enactment of an SEO overmuch much straightforward than what it was historically. Hopefully, this usher has helped you amended recognize the further considerations you request to marque arsenic an SEO erstwhile moving with React applications. Have immoderate questions connected moving with React? Tweet me.

1. Pick the close rendering strategy

Load times

Solution

2. Use presumption codes correctly

3. Avoid hashed URLs

Solution

4. Use <a href> links wherever relevant

Solution

5. Avoid lazy loading indispensable HTML

Solution

6. Don’t hide the fundamentals

Final thoughts

English (US)

English (US)