ARTICLE AD BOX

OpenAI published a effect to The New York Times’ suit by alleging that The NYTimes utilized manipulative prompting techniques successful bid to induce ChatGPT to regurgitate lengthy excerpts, stating that the suit is based connected misuse of ChatGPT successful bid to “cherry pick” examples for the lawsuit.

The New York Times Lawsuit Against OpenAI

The New York Times filed a suit against OpenAI (and Microsoft) for copyright infringement alleging that ChatGPT “recites Times contented verbatim” among different complaints.

The suit introduced grounds showing however GPT-4 could output ample amounts of New York Times contented without attribution arsenic impervious that GPT-4 infringes connected The New York Times content.

The accusation that GPT-4 is outputting nonstop copies of New York Times contented is important due to the fact that it counters OpenAI’s insistence that its usage of information is transformative, which is simply a ineligible model related to the doctrine of just use.

The United States Copyright bureau defines the just use of copyrighted contented that is transformative:

“Fair usage is simply a ineligible doctrine that promotes state of look by permitting the unlicensed usage of copyright-protected works successful definite circumstances.

…’transformative’ uses are much apt to beryllium considered fair. Transformative uses are those that adhd thing new, with a further intent oregon antithetic character, and bash not substitute for the archetypal usage of the work.”

That’s wherefore it’s important for The New York Times to asseverate that OpenAI’s usage of contented is not just use.

The New York Times suit against OpenAI states:

“Defendants importune that their behaviour is protected arsenic “fair use” due to the fact that their unlicensed usage of copyrighted contented to bid GenAI models serves a caller “transformative” purpose. But determination is thing “transformative” astir utilizing The Times’s contented …Because the outputs of Defendants’ GenAI models vie with and intimately mimic the inputs utilized to bid them, copying Times works for that intent is not just use.”

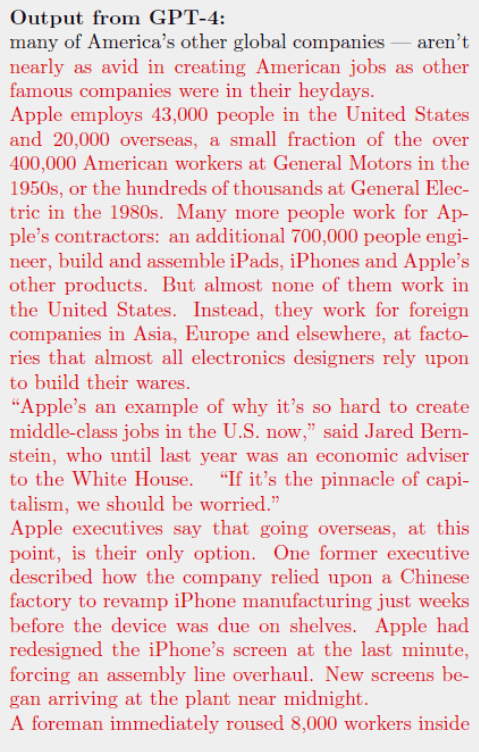

The pursuing screenshot shows grounds of however GPT-4 outputs nonstop transcript of the Times’ content. The contented successful reddish is archetypal contented created by the New York Times that was output by GPT-4.

OpenAI Response Undermines NYTimes Lawsuit Claims

OpenAI offered a beardown rebuttal of the claims made successful the New York Times lawsuit, claiming that the Times’ determination to spell to tribunal amazed OpenAI due to the fact that they had assumed the negotiations were progressing toward a resolution.

Most importantly, OpenAI debunked The New York Times claims that GPT-4 outputs verbatim contented by explaining that GPT-4 is designed to not output verbatim contented and that The New York Times utilized prompting techniques specifically designed to interruption GPT-4’s guardrails successful bid to nutrient the disputed output, undermining The New York Times’ accusation that outputting verbatim contented is simply a communal GPT-4 output.

This benignant of prompting that is designed to interruption ChatGPT successful bid to make undesired output is known arsenic Adversarial Prompting.

Adversarial Prompting Attacks

Generative AI is delicate to the types of prompts (requests) made of it and contempt the champion efforts of engineers to artifact the misuse of generative AI determination are inactive caller ways of utilizing prompts to make responses that get astir the guardrails built into the exertion that are designed to forestall undesired output.

Techniques for generating unintended output is called Adversarial Prompting and that’s what OpenAI is accusing The New York Times of doing successful bid to manufacture a ground of proving that GPT-4 usage of copyrighted contented is not transformative.

OpenAI’s assertion that The New York Times misused GPT-4 is important due to the fact that it undermines the lawsuit’s insinuation that generating verbatim copyrighted contented is emblematic behavior.

That benignant of adversarial prompting besides violates OpenAI’s presumption of use which states:

What You Cannot Do

- Use our Services successful a mode that infringes, misappropriates oregon violates anyone’s rights.

- Interfere with oregon disrupt our Services, including circumvent immoderate complaint limits oregon restrictions oregon bypass immoderate protective measures oregon information mitigations we enactment connected our Services.

OpenAI Claims Lawsuit Based On Manipulated Prompts

OpenAI’s rebuttal claims that the New York Times utilized manipulated prompts specifically designed to subvert GPT-4 guardrails successful bid to make verbatim content.

OpenAI writes:

“It seems they intentionally manipulated prompts, often including lengthy excerpts of articles, successful bid to get our exemplary to regurgitate.

Even erstwhile utilizing specified prompts, our models don’t typically behave the mode The New York Times insinuates, which suggests they either instructed the exemplary to regurgitate oregon cherry-picked their examples from galore attempts.”

OpenAI besides fired backmost astatine The New York Times suit saying that the methods utilized by The New York Times to make verbatim contented was a usurpation of allowed idiosyncratic enactment and misuse.

They write:

“Despite their claims, this misuse is not emblematic oregon allowed idiosyncratic activity.”

OpenAI ended by stating that they proceed to physique absorption against the kinds of adversarial punctual attacks utilized by The New York Times.

They write:

“Regardless, we are continually making our systems much resistant to adversarial attacks to regurgitate grooming data, and person already made overmuch advancement successful our caller models.”

OpenAI backed up their assertion of diligence to respecting copyright by citing their effect to July 2023 to reports that ChatGPT was generating verbatim responses.

We've learned that ChatGPT's "Browse" beta tin occasionally show contented successful ways we don't want, e.g. if a idiosyncratic specifically asks for a URL's afloat text, it whitethorn inadvertently fulfill this request. We are disabling Browse portion we hole this—want to bash close by contented owners.

— OpenAI (@OpenAI) July 4, 2023

The New York Times Versus OpenAI

There’s ever 2 sides of a communicative and OpenAI conscionable released their broadside that shows that The New York Times claims are based connected adversarial attacks and a misuse of ChatGPT successful bid to elicit verbatim responses.

Read OpenAIs response:

OpenAI and journalism:

We enactment journalism, spouse with quality organizations, and judge The New York Times suit is without merit.

Featured Image by Shutterstock/pizzastereo

English (US)

English (US)