ARTICLE AD BOX

The caller lipid isn’t information oregon attention. It’s words. The differentiator to physique next-gen AI models is entree to contented erstwhile normalizing for computing power, storage, and energy.

But the web is already getting excessively tiny to satiate the hunger for caller models.

Some executives and researchers accidental the industry’s request for high-quality substance information could outstrip proviso wrong 2 years, perchance slowing AI’s development.

Even fine-tuning doesn’t look to enactment arsenic good arsenic simply gathering much almighty models. A Microsoft probe lawsuit survey shows that effectual prompts tin outperform a fine-tuned exemplary by 27%.

We were wondering if the aboriginal volition dwell of galore small, fine-tuned, oregon a fewer big, all-encompassing models. It seems to beryllium the latter.

There is nary AI strategy without a information strategy.

Hungry for much high-quality contented to make the adjacent procreation of ample connection models (LLMs), exemplary developers commencement to wage for earthy contented and revive their efforts to statement synthetic data.

For contented creators of immoderate kind, this caller travel of wealth could carve the way to a caller contented monetization exemplary that incentivizes prime and makes the web better.

Image Credit: Lyna ™

Image Credit: Lyna ™

Boost your skills with Growth Memo’s play adept insights. Subscribe for free!

KYC: AI

If contented is the caller oil, societal networks are lipid rigs. Google invested $60 cardinal a twelvemonth successful utilizing Reddit contented to bid its models and aboveground Reddit answers astatine the apical of search. Pennies, if you inquire me.

YouTube CEO Neal Mohan precocious sent a wide connection to OpenAI and different exemplary developers that grooming connected YouTube is simply a no-go, defending the company’s monolithic lipid reserves.

The New York Times, which is presently moving a suit against OpenAI, published an nonfiction stating that OpenAI developed Whisper to bid models connected YouTube transcripts, and Google uses contented from each of its platforms, similar Google Docs and Maps reviews, to bid its AI models.

Generative AI information providers similar Appen oregon Scale AI are recruiting (human) writers to make contented for LLM exemplary training.

Make nary mistake, writers aren’t getting affluent penning for AI.

For $25 to $50 per hour, writers execute tasks similar ranking AI responses, penning abbreviated stories, and fact-checking.

Applicants indispensable person a Ph.D. oregon master’s grade oregon are presently attending college. Data providers are intelligibly looking for experts and “good” writers. But the aboriginal signs are promising: Writing for AI could beryllium monetizable.

Image Credit: Kevin Indig

Image Credit: Kevin Indig

Image Credit: Kevin Indig

Image Credit: Kevin Indig

Model developers look for bully contented successful each country of the web, and immoderate are blessed to merchantability it.

Content platforms similar Photobucket merchantability photos for 5 cents to 1 dollar a piece. Short-form videos tin get $2 to $4; longer films outgo $100 to $300 per hr of footage.

With billions of photos, the institution struck lipid successful its backyard. Which CEO tin withstand specified a temptation, particularly arsenic contented monetization is getting harder and harder?

From Free Content:

Publishers are getting squeezed from aggregate sides:

- Few are prepared for the decease of third-party cookies.

- Social networks nonstop little postulation (Meta) oregon deteriorate successful prime (X).

- Most young radical get quality from TikTok.

- SGE looms connected the horizon.

Ironically, labeling AI contented amended mightiness assistance LLM improvement due to the fact that it’s easier to abstracted earthy from synthetic content.

In that sense, it’s successful the involvement of LLM developers to statement AI contented truthful they tin exclude it from grooming oregon usage it the close way.

Labeling

Drilling for words to bid LLMs is conscionable 1 broadside of processing next-gen AI models. The different 1 is labeling. Model developers request labeling to avoid model collapse, and nine needs it arsenic a shield against fake news.

A caller question of AI labeling is rising contempt OpenAI dropping watermarking owed to debased accuracy (26%). Instead of labeling contented themselves, which seems futile, large tech (Google, YouTube, Meta, and TikTok) pushes users to statement AI contented with a carrot/stick approach.

Google uses a double-pronged attack to combat AI spam successful search: prominently showing forums similar Reddit, wherever contented is astir apt created by humans, and penalties.

From AIfficiency:

Google is surfacing much contented from forums successful the SERPs is to counter-balance AI content. Verification is the eventual AI watermarking. Even though Reddit can’t forestall humans from utilizing AI to make posts oregon comments, chances are little due to the fact that of 2 things Google hunt doesn’t have: Moderation and Karma.

Yes, Content Goblins have already taken purpose astatine Reddit, but astir of the 73 cardinal regular progressive users supply utile answers.1 Content moderators punish spam with bans oregon adjacent kicks. But the astir almighty operator of prime connected Reddit is Karma, “a user’s estimation people that reflects their assemblage contributions.” Through elemental up oregon downvotes, users tin summation authorization and trustworthiness, 2 integral ingredients successful Google’s prime systems.

Google precocious clarified that it expects merchants not to region AI metadata from images utilizing the IPTC metadata protocol.

When an representation has a tag like compositeSynthetic, Google mightiness statement it arsenic “AI-generated” anywhere, not conscionable successful shopping. The punishment for removing AI metadata is unclear, but I ideate it similar a nexus penalty.

IPTC is the aforesaid format Meta uses for Instagram, Facebook, and WhatsApp. Both companies springiness IPTC metatags to immoderate contented coming retired from their ain LLMs. The much AI instrumentality makers travel the aforesaid guidelines to people and tag AI content, the much reliable detection systems work.

When photorealistic images are created utilizing our Meta AI feature, we bash respective things to marque definite radical cognize AI is involved, including putting visible markers that you tin spot connected the images, and both invisible watermarks and metadata embedded wrong representation files. Using some invisible watermarking and metadata successful this mode improves some the robustness of these invisible markers and helps different platforms place them.

The downsides of AI contented are tiny erstwhile the contented looks similar AI. But erstwhile AI contented looks real, we request labels.

While advertisers effort to get distant from the AI look, contented platforms similar it due to the fact that it’s casual to recognize.

For commercialized artists and advertisers, generative AI has the powerfulness to massively velocity up the originative process and present personalized ads to customers connected a ample standard – thing of a beatified grail successful the selling world. But there’s a catch: Many images AI models make diagnostic cartoonish smoothness, telltale flaws, oregon both.

Consumers are already turning against “the AI look,” truthful overmuch truthful that an uncanny and cinematic Super Bowl advertisement for Christian foundation He Gets Us was accused of being calved from AI –even though a lensman created its images.

YouTube started enforcing caller guidelines for video creators that accidental realistic-looking AI contented needs to beryllium labeled.

Challenges posed by generative AI person been an ongoing country of absorption for YouTube, but we cognize AI introduces caller risks that atrocious actors whitethorn effort to exploit during an election. AI tin beryllium utilized to make contented that has the imaginable to mislead viewers – peculiarly if they’re unaware that the video has been altered oregon is synthetically created. To amended code this interest and pass viewers erstwhile the contented they’re watching is altered oregon synthetic, we’ll commencement to present the pursuing updates:

- Creator Disclosure: Creators volition beryllium required to disclose erstwhile they’ve created altered oregon synthetic contented that’s realistic, including utilizing AI tools. This volition see predetermination content.

- Labeling: We’ll statement realistic altered oregon synthetic predetermination contented that doesn’t interruption our policies, to intelligibly bespeak for viewers that immoderate of the contented was altered oregon synthetic. For elections, this statement volition beryllium displayed successful some the video subordinate and the video description, and volition aboveground careless of the creator, governmental viewpoints, oregon language.

The biggest imminent fearfulness is fake AI contented that could power the 2024 U.S. statesmanlike election.

No level wants to beryllium the Facebook of 2016, which saw lasting reputational harm that impacted its banal price.

Chinese and Russian authorities actors person already experimented with fake AI quality and tried to meddle with the Taiwanese and coming U.S. elections.

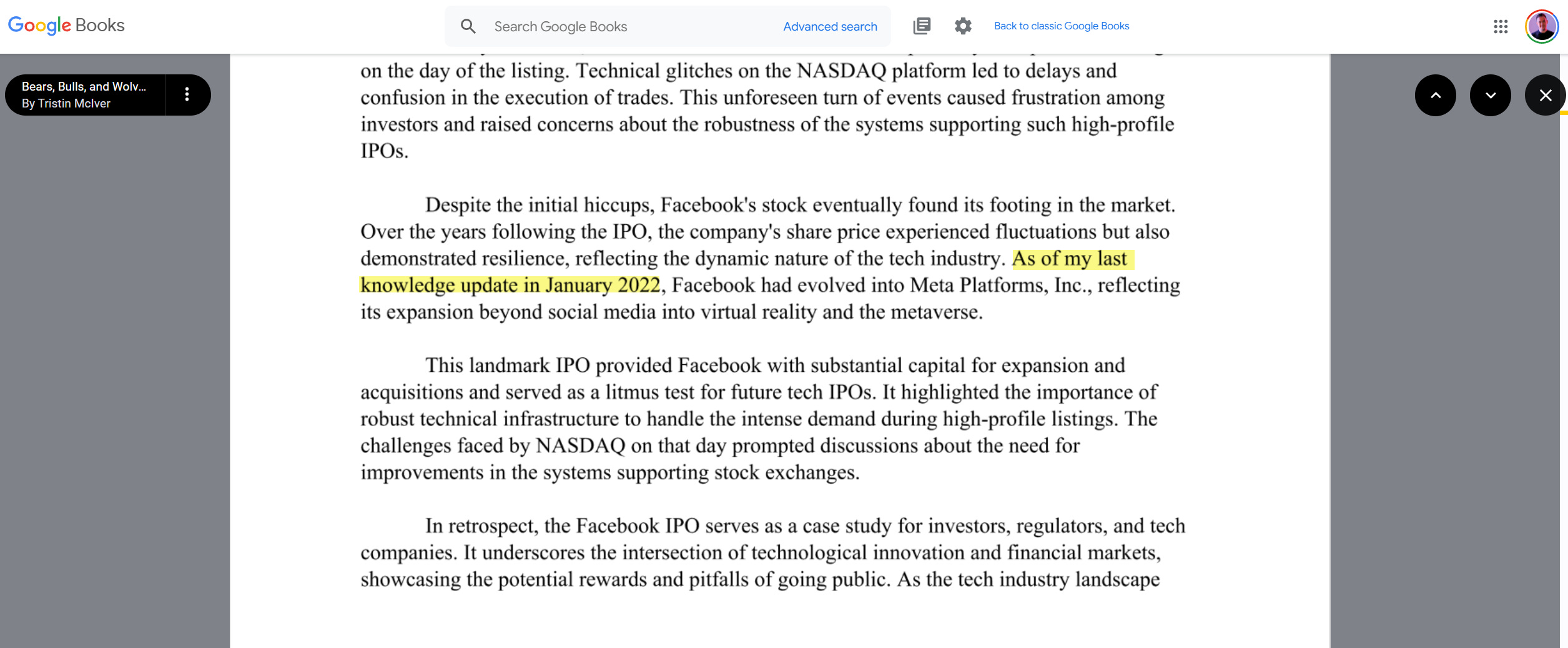

Now that OpenAI is adjacent to releasing Sora, which creates hyperrealistic videos from prompts, it’s not a acold leap to ideate however AI videos tin origin problems without strict labeling. The concern is pugnacious to get nether control. Google Books already features books that were intelligibly written with oregon by ChatGPT.

Image Credit: Kevin Indig

Image Credit: Kevin Indig

Takeaway

Labels, whether intelligence oregon visual, power our decisions. They annotate the satellite for america and person the powerfulness to make oregon destruct trust. Like class heuristics successful shopping, labels simplify our decision-making and accusation filtering.

From Messy Middle:

Lastly, the thought of class heuristics, numbers customers absorption connected to simplify decision-making, similar megapixels for cameras, offers a way to specify idiosyncratic behaviour optimization. An ecommerce store selling cameras, for example, should optimize their merchandise cards to prioritize class heuristics visually. Granted, you archetypal request to summation an knowing of the heuristics successful your categories, and they mightiness alteration based connected the merchandise you sell. I conjecture that’s what it takes to beryllium palmy successful SEO these days.

Soon, labels volition archer america erstwhile contented is written by AI oregon not. In a nationalist survey of 23,000 respondents, Meta recovered that 82% of radical privation labels connected AI content. Whether communal standards and punishments enactment remains to beryllium seen, but the urgency is there.

There is besides an accidental here: Labels could radiance a spotlight connected quality writers and marque their contented much valuable, depending connected however bully AI contented becomes.

On top, penning for AI could beryllium different mode to monetize content. While existent hourly rates don’t marque anyone rich, exemplary grooming adds caller worth to content. Content platforms could find caller gross streams.

Web contented has go highly commercialized, but AI licensing could incentivize writers to make bully contented again and untie themselves from affiliate oregon advertizing income.

Sometimes, the opposition makes worth visible. Maybe AI tin marque the web amended aft all.

For Data-Guzzling AI Companies, the Internet Is Too Small

Inside Big Tech’s Underground Race To Buy AI Training Data

OpenAI Gives Up On Detection Tool For AI-Generated Text

Labeling AI-Generated Images connected Facebook, Instagram and Threads

How The Ad Industry Is Making AI Images Look Less Like AI

How We’re Helping Creators Disclose Altered Or Synthetic Content

Addressing AI-Generated Election Misinformation

China Is Targeting U.S. Voters And Taiwan With AI-Powered Disinformation

Google Books Is Indexing AI-Generated Garbage

Our Approach To Labeling AI-Generated Content And Manipulated Media

Featured Image: Paulo Bobita/Search Engine Journal

English (US)

English (US)