ARTICLE AD BOX

Thank you for subscribing!

Ever wonderment however websites get listed connected hunt engines and however Google, Bing, and others supply america with tons of accusation successful a substance of seconds?

The concealed of this lightning-fast show lies successful hunt indexing. It tin beryllium compared to a immense and perfectly ordered catalog archive of each pages. Getting into the scale means that the hunt motor has seen your page, evaluated, and remembered it. And, therefore, it tin amusement this leafage successful hunt results.

Let’s excavation into the process of indexing from scratch successful bid to understand:

- How the hunt engines cod and store the accusation from billions of websites, including yours

- How you tin negociate this process

- What you request to cognize astir indexing tract resources with the assistance of antithetic technologies

What is hunt motor indexing?

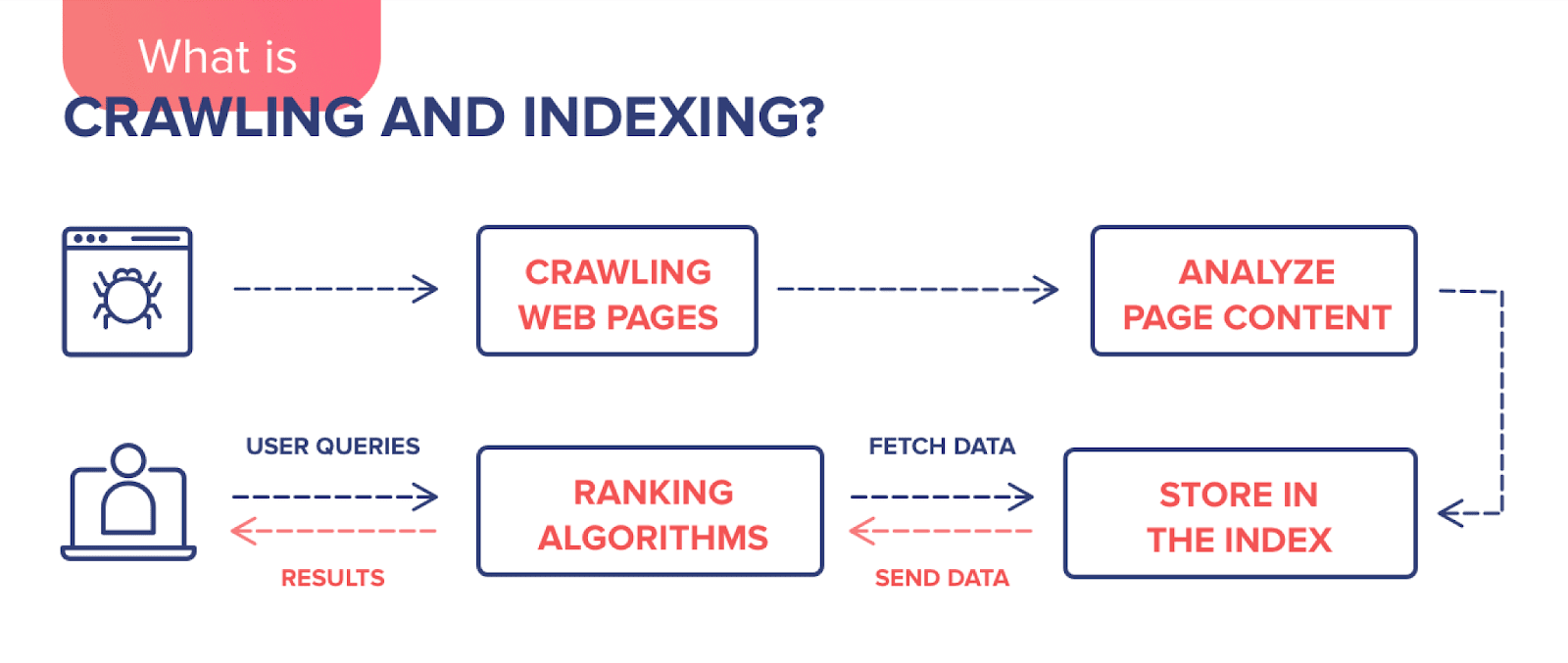

To enactment successful the contention for the archetypal presumption successful SERP, your website has to spell done a enactment process:

Step 1. Web spiders (or bots) scan each the website’s known URLs. This is called crawling.

Step 2. The bots cod and store information from the web pages, which is called indexing.

Step 3. And finally, the website and its pages tin vie successful the crippled trying to rank for a circumstantial query.

In short, if you privation users to find your website connected Google, it needs to beryllium indexed: accusation astir the leafage should beryllium added to the hunt motor database.

The hunt motor scans your website to find retired what it is astir and which benignant of contented is connected its pages. If the hunt motor likes what it sees, it tin past store copies of the pages successful the hunt index. For each page, the hunt motor stores the URL and contented information. Here is what Google says:

“When crawlers find a web page, our systems render the contented of the page, conscionable arsenic a browser does. We instrumentality enactment of cardinal signals—from keywords to website freshness—and we support way of it each successful the Search index.”

Web crawlers scale pages and their content, including text, interior links, images, audio, and video files. If the contented is considered to beryllium invaluable and competitive, the hunt motor volition adhd the leafage to the index, and it’ll beryllium successful the “game” to vie for a spot successful the hunt results for applicable idiosyncratic hunt queries.

As a result, erstwhile users participate a hunt query connected the Internet, the hunt motor rapidly looks done its database of scanned websites and shows lone applicable pages successful the SERP, similar a librarian looking for the books successful a catalog—alphabetically, by subject, and by the nonstop title.

Keep successful mind: pages are lone added to the scale if they incorporate prime contented and don’t trigger immoderate alarms by doing shady things similar keyword stuffing oregon gathering a clump of links from irrefutable sources. At the extremity of this post, we’ll sermon the astir communal indexing errors.

What helps crawlers find your site?

If you privation a hunt motor to find retired astir your website oregon its caller pages, you person to amusement it to the hunt engine. The astir fashionable and effectual ways include: submitting a sitemap to Google, utilizing outer links, engaging societal media, and utilizing peculiar tools.

Let’s dive into these ways to velocity up the indexing process:

1. Submitting your sitemap to Google

To marque definite we are connected the aforesaid page, let’s archetypal refresh our memories. The XML sitemap is simply a database of each the pages connected your website (an XML file) crawlers request to beryllium alert of. It serves arsenic a navigation usher for bots. The sitemap does assistance your website get indexed faster with a much businesslike crawl rate.

Furthermore, it tin beryllium particularly adjuvant if your contented is not easy discoverable by a crawler. It is not, however, a warrant that those URLs volition beryllium crawled oregon indexed.

If you inactive don’t person a sitemap, instrumentality a look astatine our guide to palmy SEO mapping.

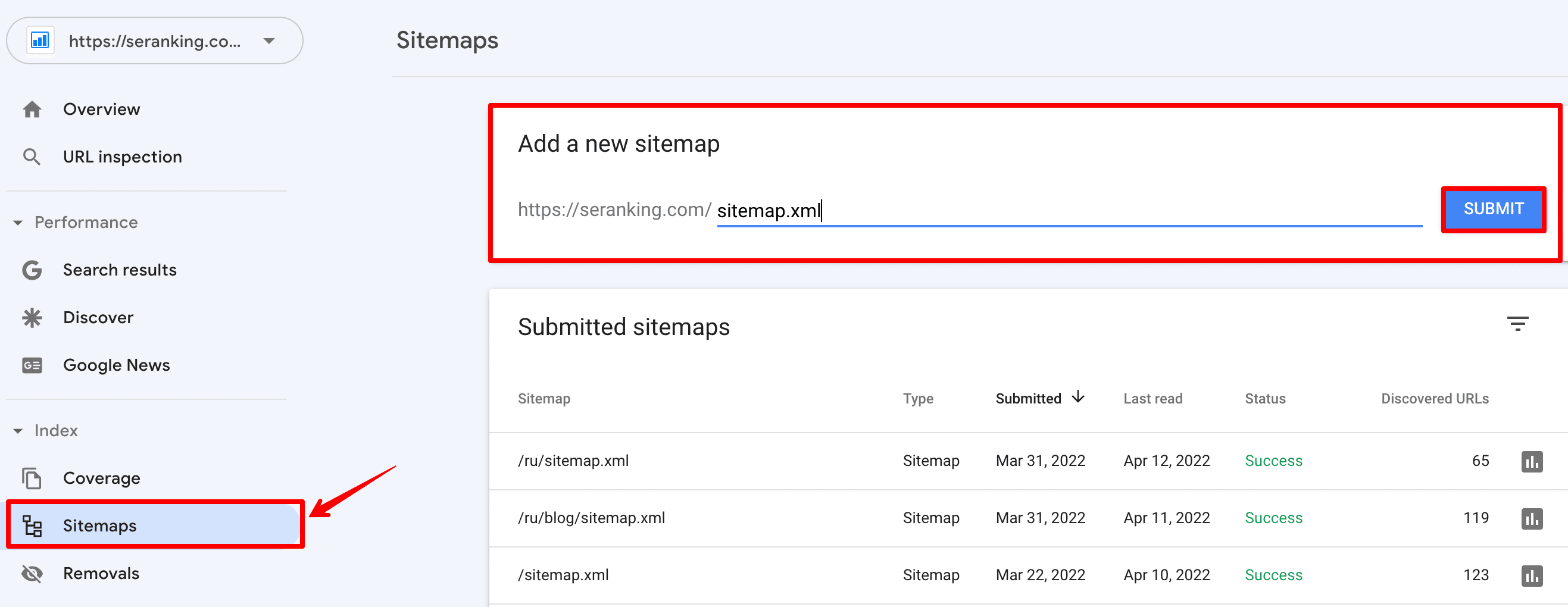

Once you person your sitemap ready, spell to your Google Search Console and:

Open the Sitemaps report ▶️ Click Add a caller sitemap ▶️ Enter your sitemap URL (normally, it is located astatine yourwebsite.com/sitemap.xml) ▶️ Hit the Submit button.

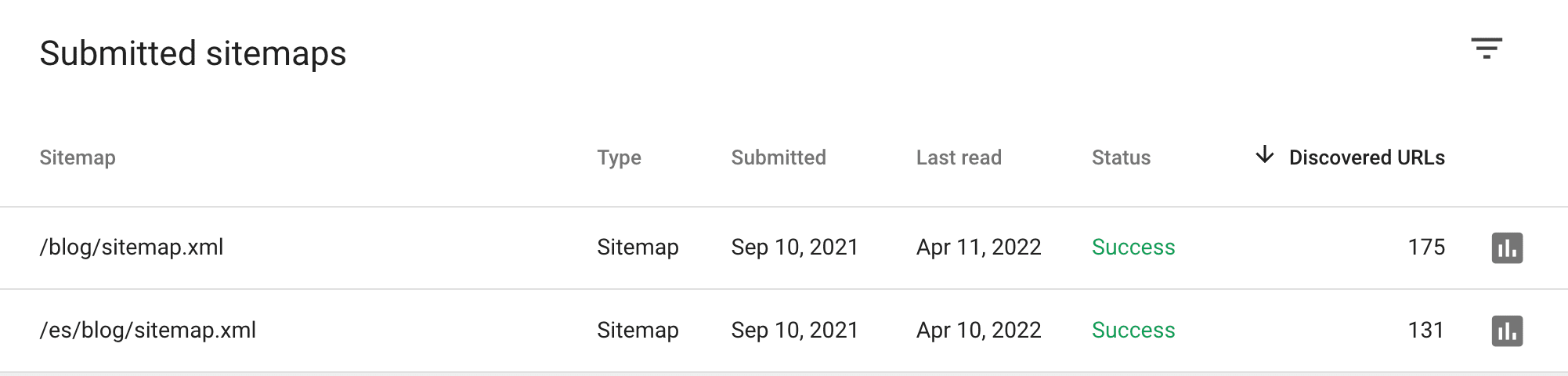

Soon, you’ll spot if Google was capable to decently process your sitemap. If everything went well, the presumption volition beryllium Success.

In the aforesaid array of your Sitemap report, you’ll spot the fig of discovered URLs. By clicking the icon adjacent to the fig of discovered URLs, you’ll get to the Index Coverage Report. Below, I volition archer you constituent by constituent however to usage this study to cheque your website indexing.

2. Adding links to outer resources

Backlinks are a cornerstone of however hunt engines recognize the value of a page. They springiness a awesome to Google that the assets is utile and that it’s worthy getting connected apical of the SERP.

Recently, during Google SEO bureau hours, John Mueller said, “Backlinks are the champion mode to get Google to scale content.” In his words, you tin taxable a sitemap with URLs to GSC. There is nary disadvantage to doing that, particularly if it’s a caller website, wherever Google has perfectly nary signals oregon nary accusation astir it astatine all. Telling the hunt motor astir the URLs via a sitemap is simply a mode of getting that archetypal ft successful the door. But it’s nary warrant that Google volition prime that up.

John Mueller advises webmasters to cooperate with antithetic blogs and resources and get links pointing to their websites. That astir apt would bash much than conscionable going to Search Console and saying I privation this URL indexed immediately.

Here are a fewer ways to get prime backlinks:

- Guest posting: people your high-quality posts with indispensable links connected idiosyncratic else’s blog and reputable websites (e.g., Forbes, Entrepreneur, Business Insider, TechCrunch).

- Creating property releases: pass the assemblage astir your marque by publishing noteworthy quality astir your company, merchandise updates, and important events connected antithetic websites.

- Writing testimonials: find companies that are applicable to your manufacture and taxable a testimonial successful speech for a backlink.

- Social Media Linking: don’t hide astir Facebook, Instagram, LinkedIn, and YouTube—add links to your website pages successful posts. Social media is simply a cost-effective instrumentality that tin assistance thrust postulation to your site, boost marque awareness, and improve your SEO. By the way, we’ll speech astir societal signals below.

- Other fashionable strategies to get backlinks are described in this article.

3. Improving societal signals

Search engines privation to supply users with high-quality contented that meets their hunt intent. To bash so, Google takes into relationship societal signals—likes, shares, and views of societal media posts. All of them pass hunt engines that the contented is gathering the needs of their users, and is applicable and authoritative. If users actively stock your page, similar it, and urge it for reading, hunt bots volition not get past specified content. That’s wherefore it’s precise important to beryllium progressive successful societal media.

Mind that Google says that societal signals are not a nonstop ranking factor. Still, they tin indirectly assistance with SEO. Google’s concern with Twitter, which added tweets to SERP, is further grounds of the increasing value of societal media successful hunt rankings.

Social signals see each enactment connected Facebook, Twitter, Pinterest, LinkedIn, Instagram, YouTube, etc. Instagram lets you usage the Swipe Up diagnostic to nexus to your landing pages. With Facebook, you tin make a station for each important link. On YouTube, you tin adhd a nexus to the video description. LinkedIn allows you to rise your website and institution credibility. Understanding the idiosyncratic platforms you’re targeting lets you amended tailor your attack to maximize your website effectiveness.

There are a fewer things to remember:

- Post daily: regularly updated contented successful societal channels tells radical and Google that your website and institution are active.

- Post applicable content: your contented should beryllium astir your company, industry, and brand—this is what your followers are waiting for.

- Create shareable content: memes, infographics, and assorted researches ever get a batch of likes and reposts.

- Optimize your societal profile: marque definite to adhd the nexus to your website to the relationship info section.

As a regularisation of thumb, the much societal buzz you make astir your website, the faster you volition get your website indexed.

4. Using adhd URL tools

Another mode to awesome astir a caller website leafage and effort to velocity up its indexing is utilizing adhd URL tools. It allows you to petition the indexing of URLs. This enactment is disposable successful GCS and different peculiar services. Let’s instrumentality a look astatine antithetic adhd URL tools.

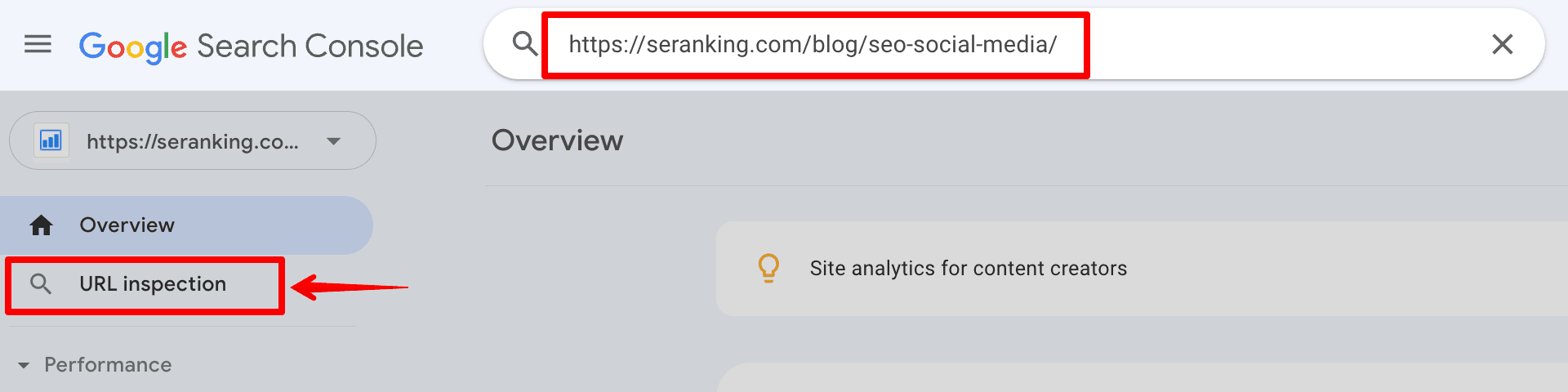

Google Search Console

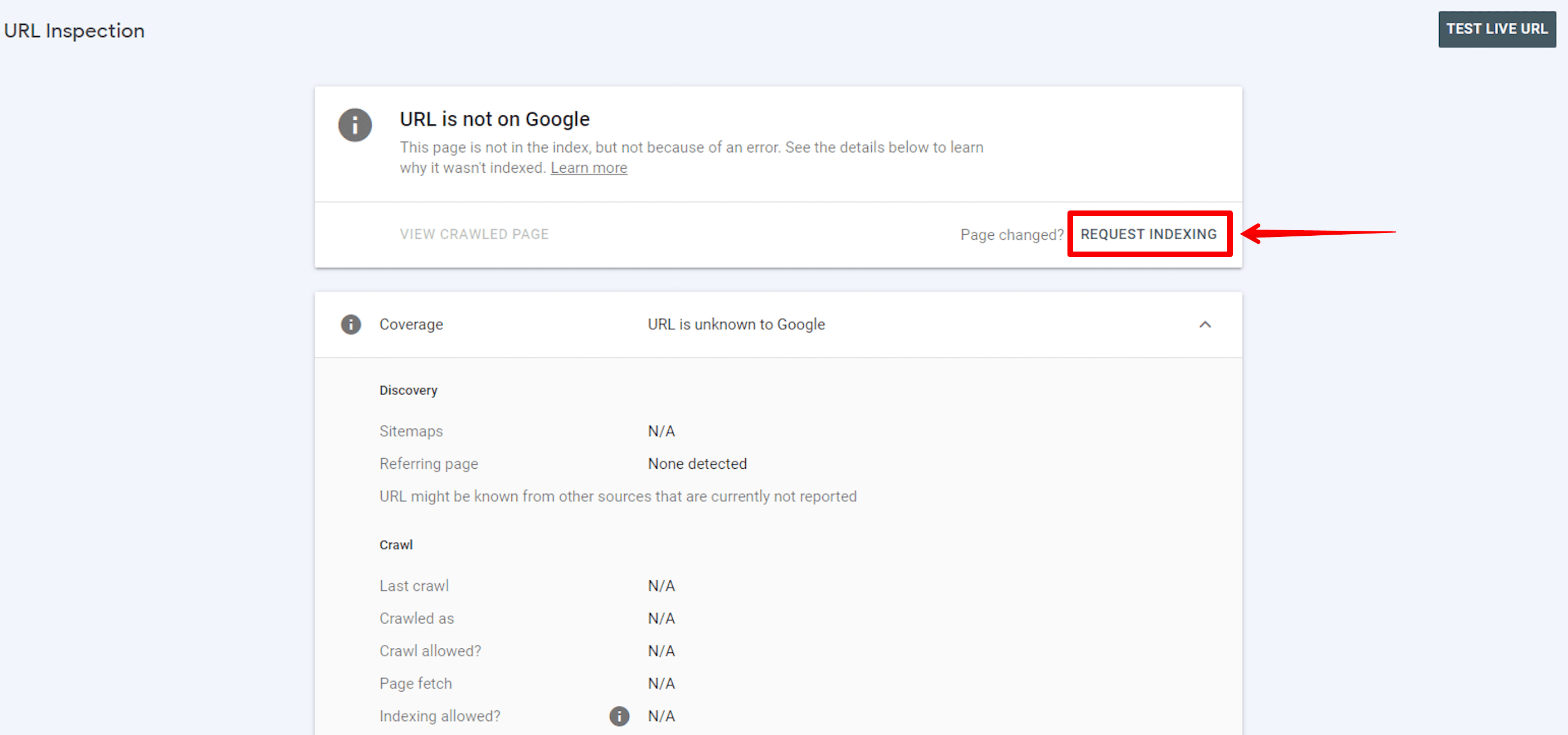

At the opening of this chapter, I described however to adhd a sitemap with tons of website links. But if you request to adhd 1 oregon much links for indexing, you tin usage different GCS option. With the URL Inspection tool, you tin petition a crawl of idiosyncratic URLs.

Go to your Google Search Console dashboard, click connected the URL inspection section, and participate the desired leafage code successful the line:

If a leafage has been created recently, it whitethorn not beryllium indexed. Then you volition person a connection astir this, and you tin petition indexing of the URL. Just property the button:

All URLs with caller oregon updated contented tin beryllium requested for indexing this mode via Google Search Console.

Ping services

Pinging is different mode of alerting hunt engines and letting them cognize instantly astir a caller portion of content.

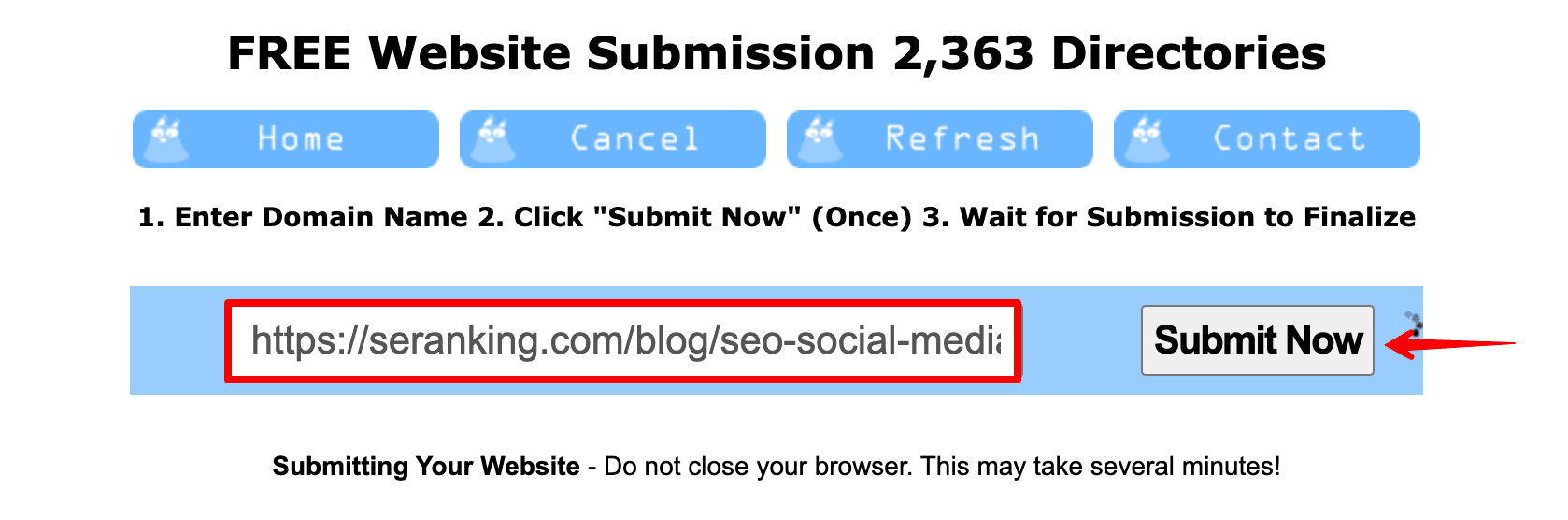

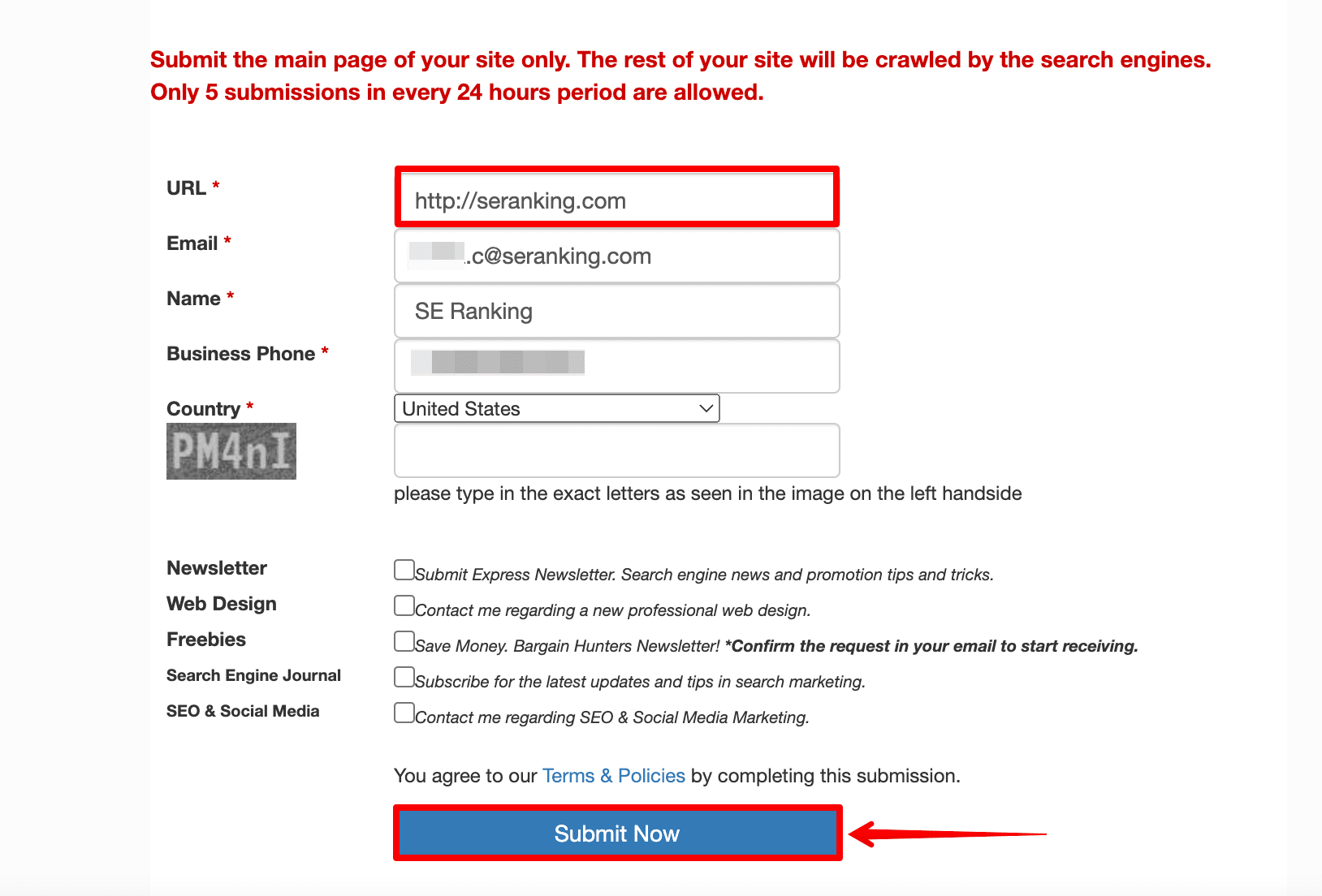

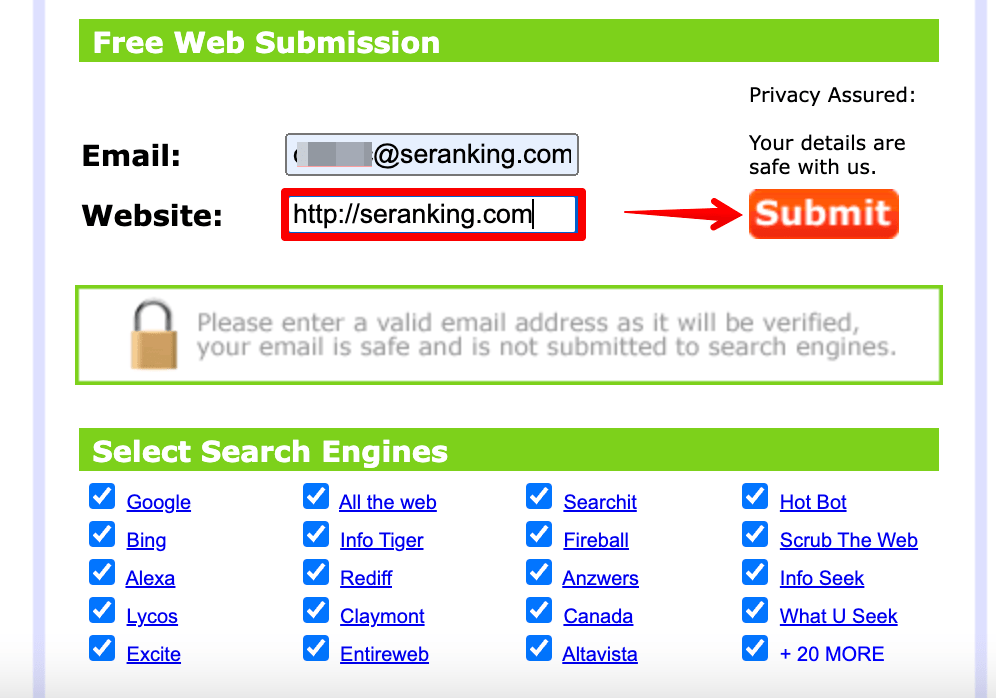

Usually, specified submission services usage Google API oregon ping tools—programs designed to velocity up indexing of caller pages and amended your SEO ranking by sending signals to hunt engines.

With their help, you tin nonstop pages to beryllium indexed by dozens of antithetic hunt engines successful 1 go. Just implicit a fewer elemental steps:

Enter URL ▶️ Click Submit Now ▶️ Wait for Submission

Here are 3 of the astir fashionable ping tools:

Keep successful mind: requesting a crawl does not guarantee that inclusion successful hunt results volition hap instantly oregon adjacent astatine all.

How to cheque your website indexing?

You person submitted your website pages for indexing. How bash you cognize that the indexing was palmy and the indispensable pages person already been ranked? Let’s look astatine methods you tin usage to cheque your website indexing.

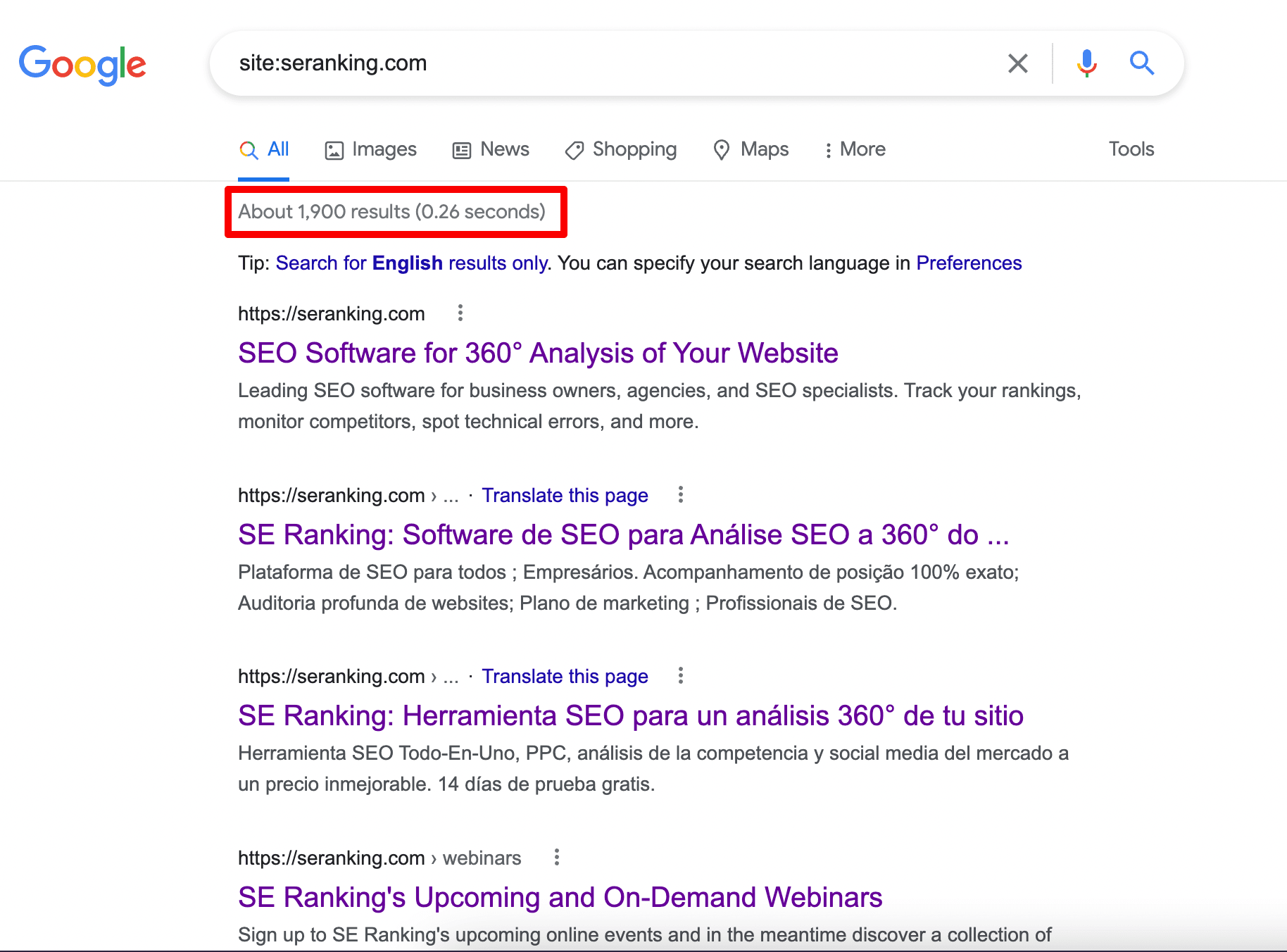

Use Google’s hunt relation site:

The easiest mode to cheque the indexed URL is to hunt site:yourdomain.com successful Google. This Google bid allows limiting your hunt to the pages of a specified resource. If Google knows your website exists and has already crawled it, you’ll spot a database of results akin to the screenshot below.

Here, you tin spot each indexed website pages.

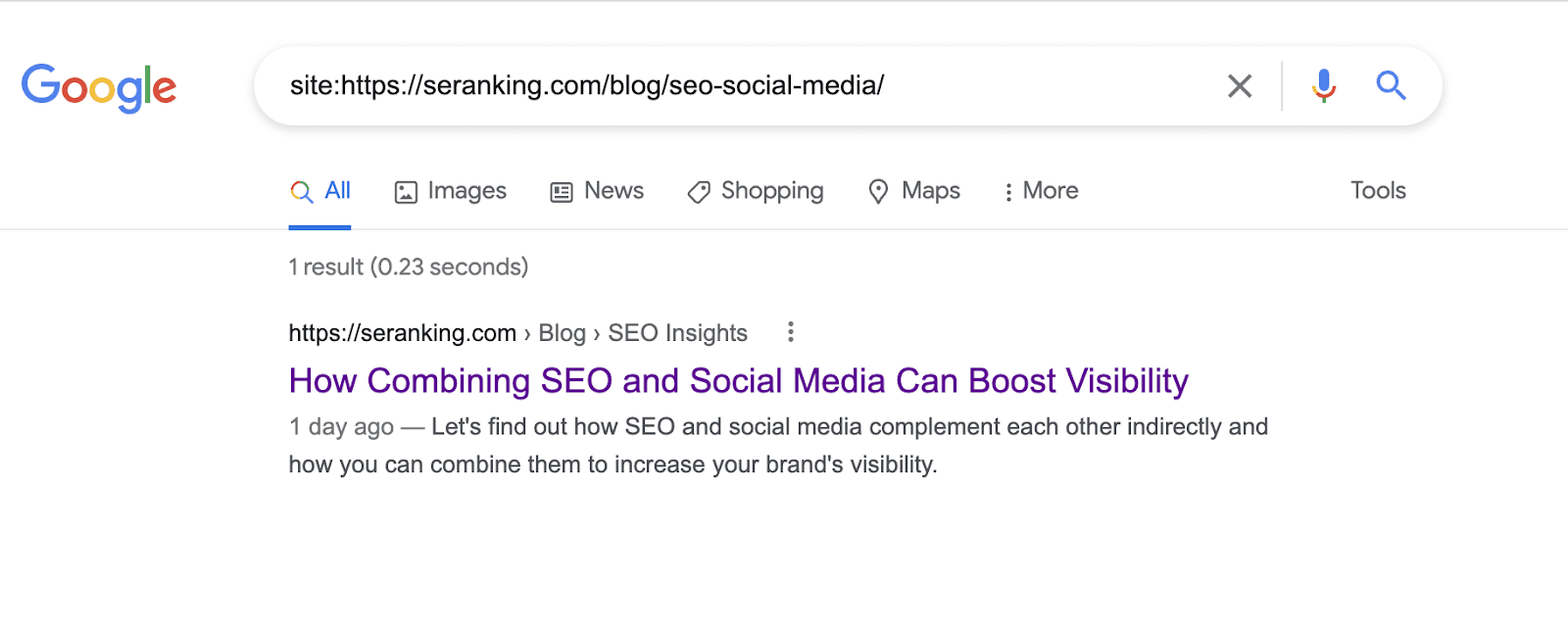

You tin besides cheque the indexing of the page. Just participate the URL alternatively of the domain:

This is the easiest and fastest mode to cheque indexing. However, the hunt relation gives you a precise constricted magnitude of data. Therefore, if you privation to spell deeper into the investigation of your website pages, you should wage adjacent attraction to the services described below.

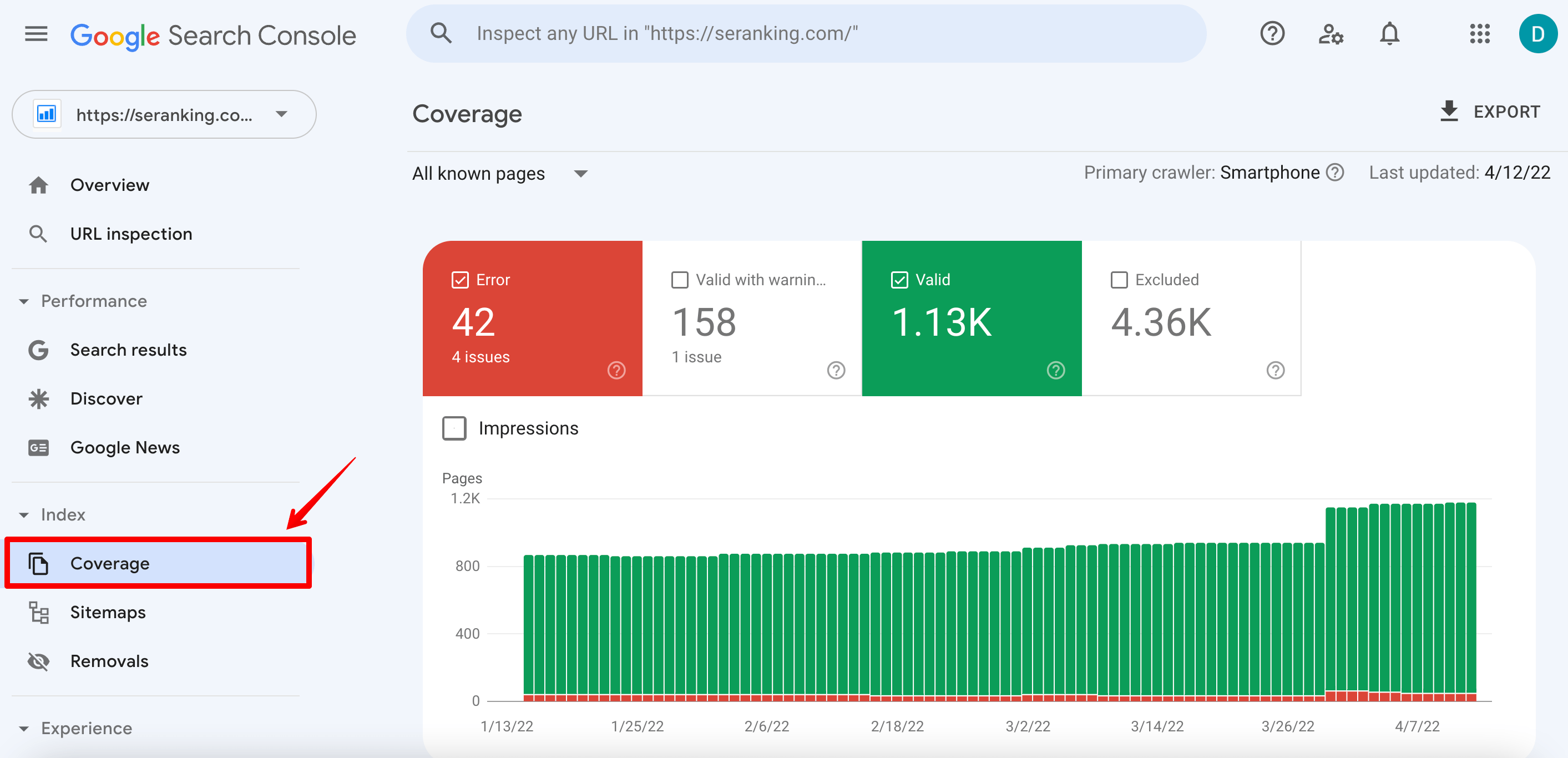

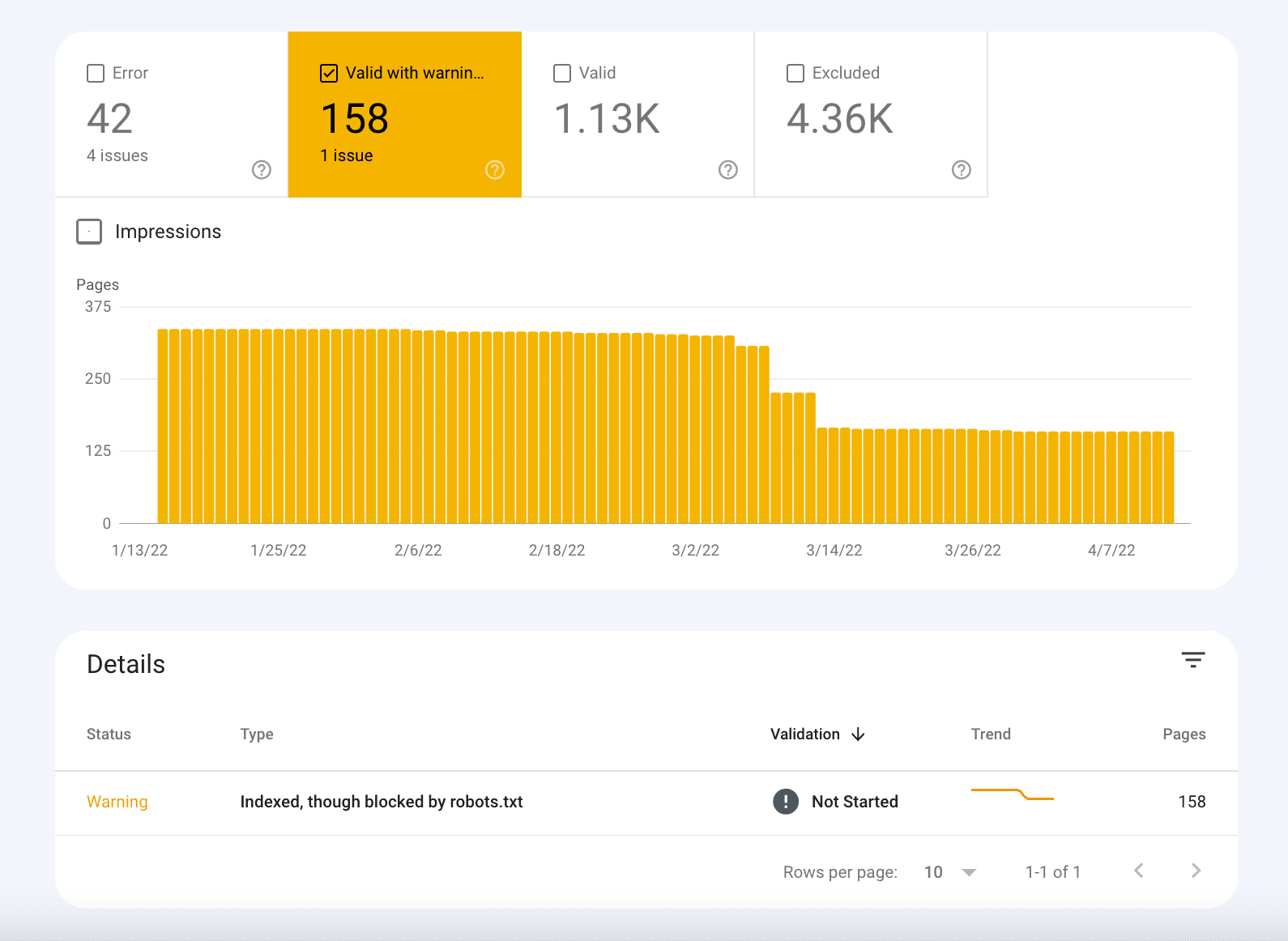

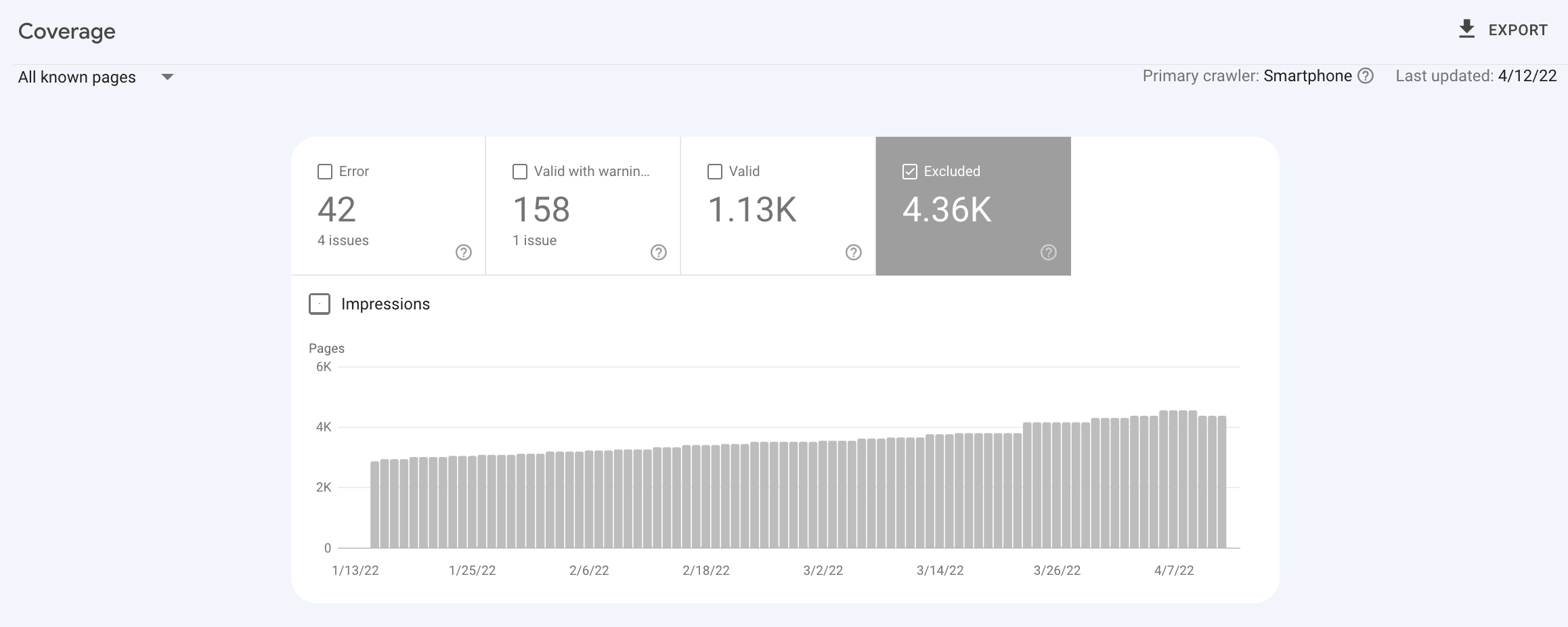

Analyze the Coverage study successful GSC

Google Search Console allows you to show which of your website pages are indexed, which are not, and why. We’ll amusement you however to cheque this.

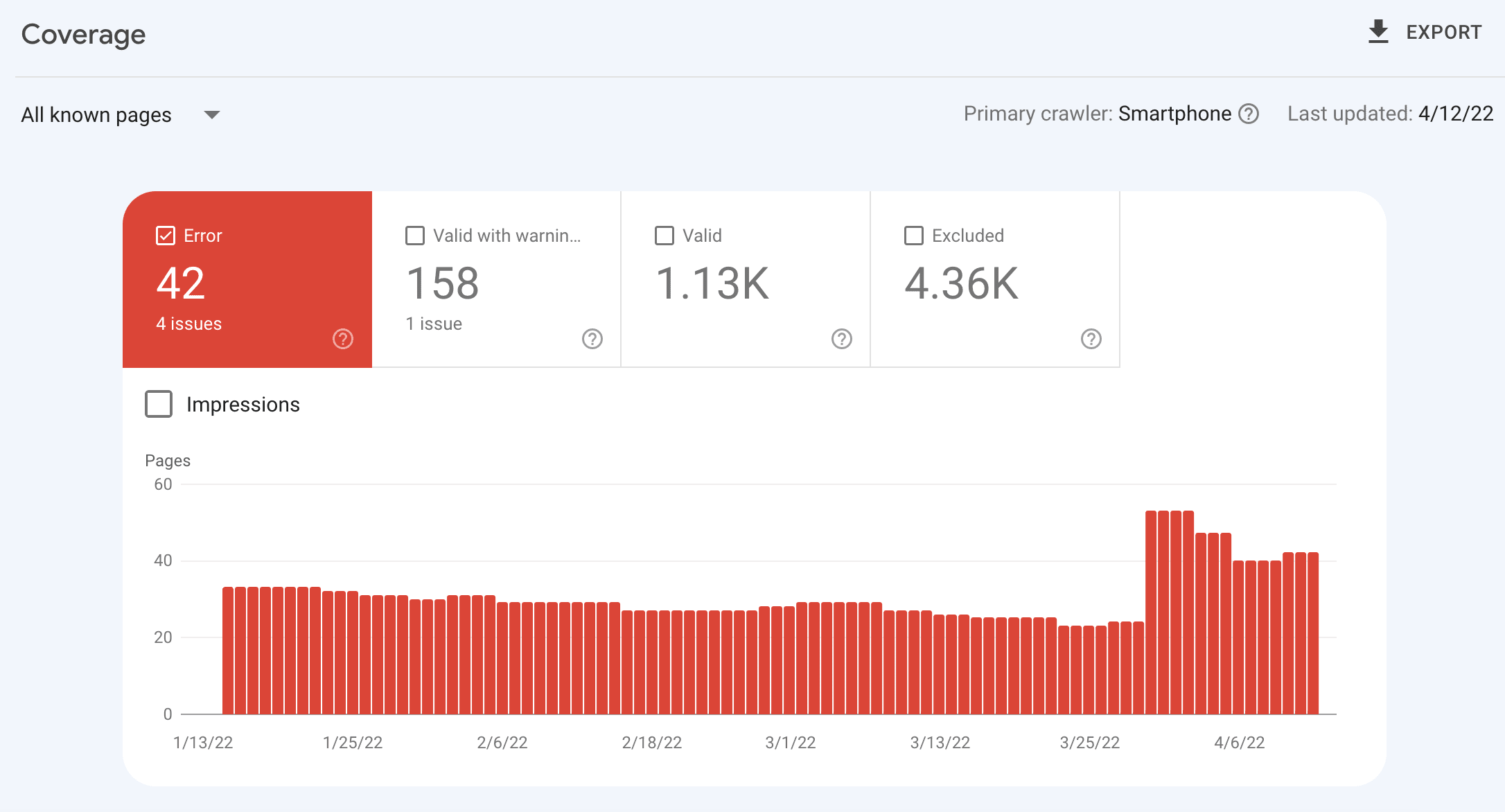

Firstly, click connected the Index conception and spell to the Coverage report.

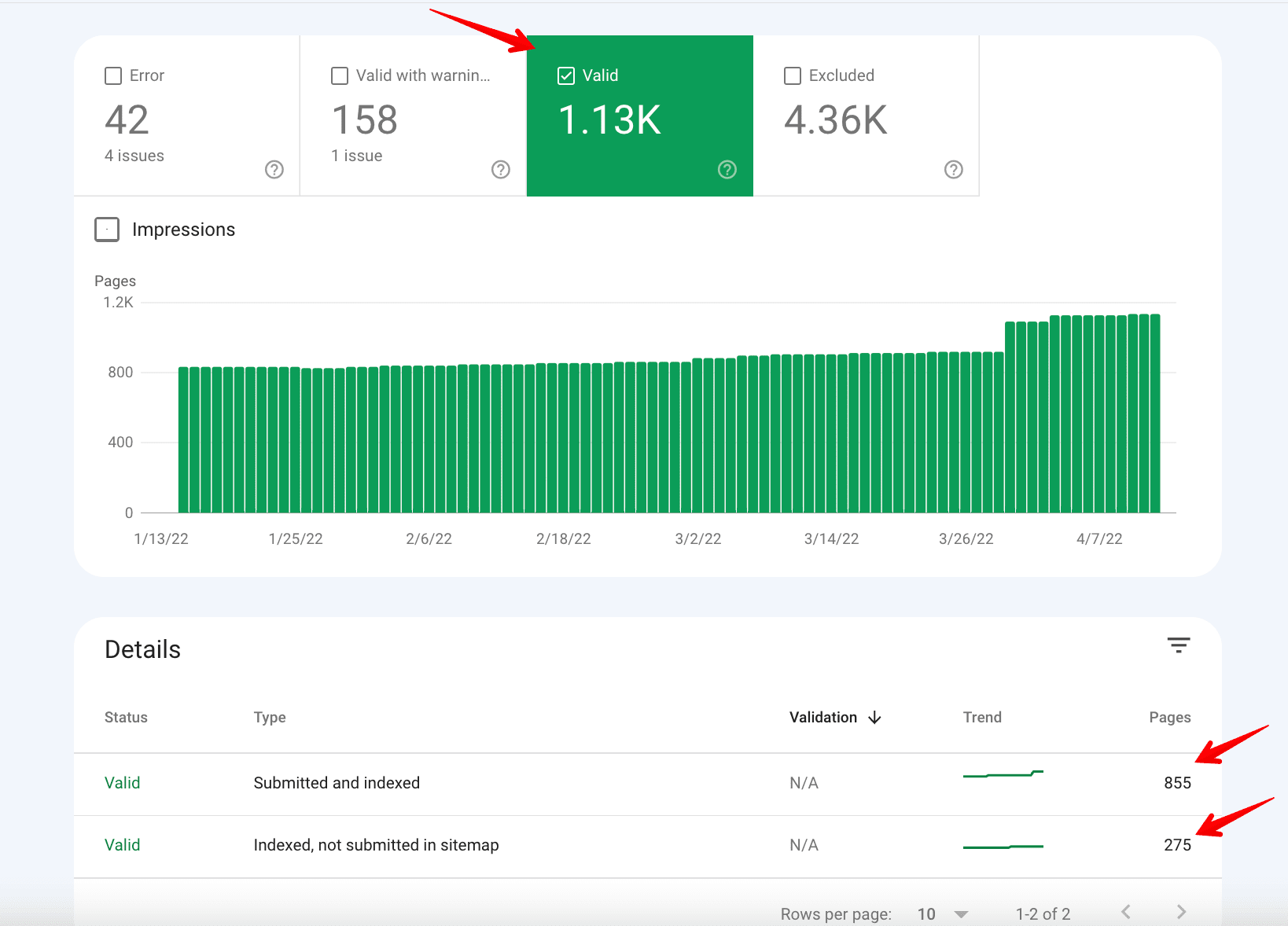

Then click connected the Valid tab—here is accusation astir each the indexed website pages.

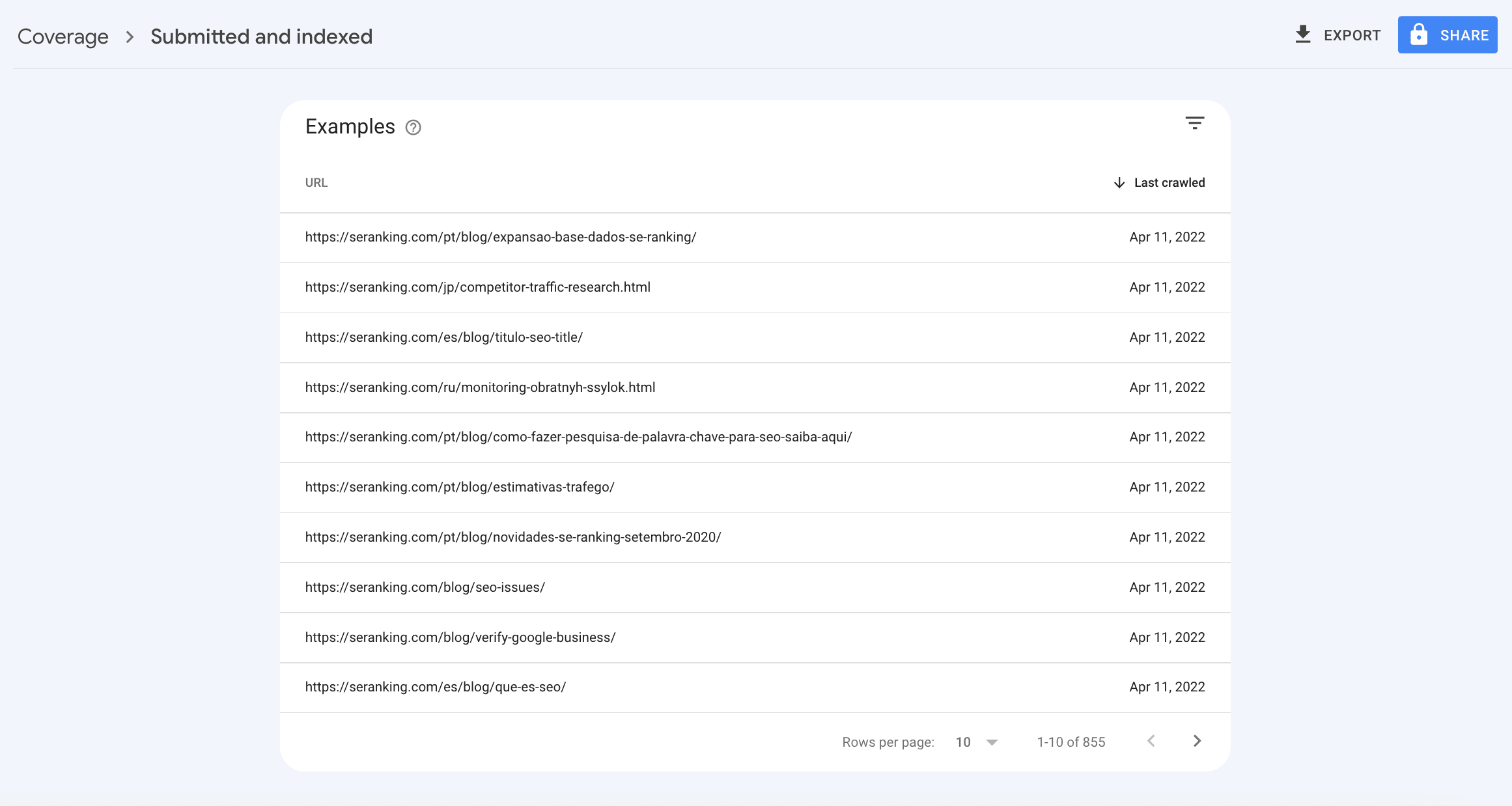

Scroll down. You’ll spot the array with 2 groups of indexed pages: Submitted and indexed & Indexed, not submitted successful sitemap. Click into the archetypal row—here, you tin larn much astir submitted successful sitemap and indexed pages. Besides, you whitethorn besides find retired erstwhile the leafage was past crawled by Google.

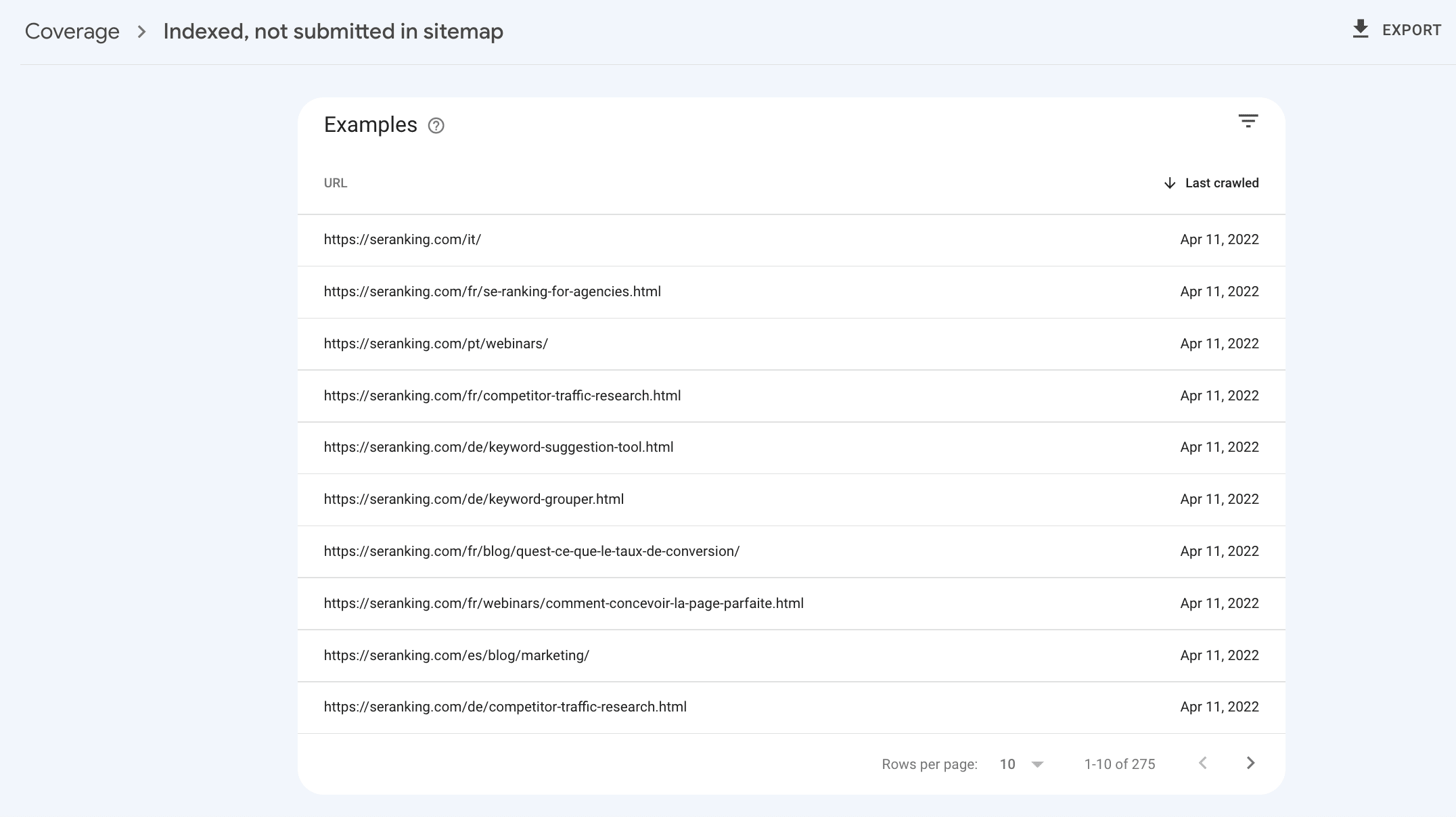

Then click into the 2nd row—you’ll spot indexed pages that were not submitted successful the sitemap. You whitethorn privation to adhd them to your sitemap, since Google believes these are high-quality pages.

Now, let’s determination connected to the adjacent stage.

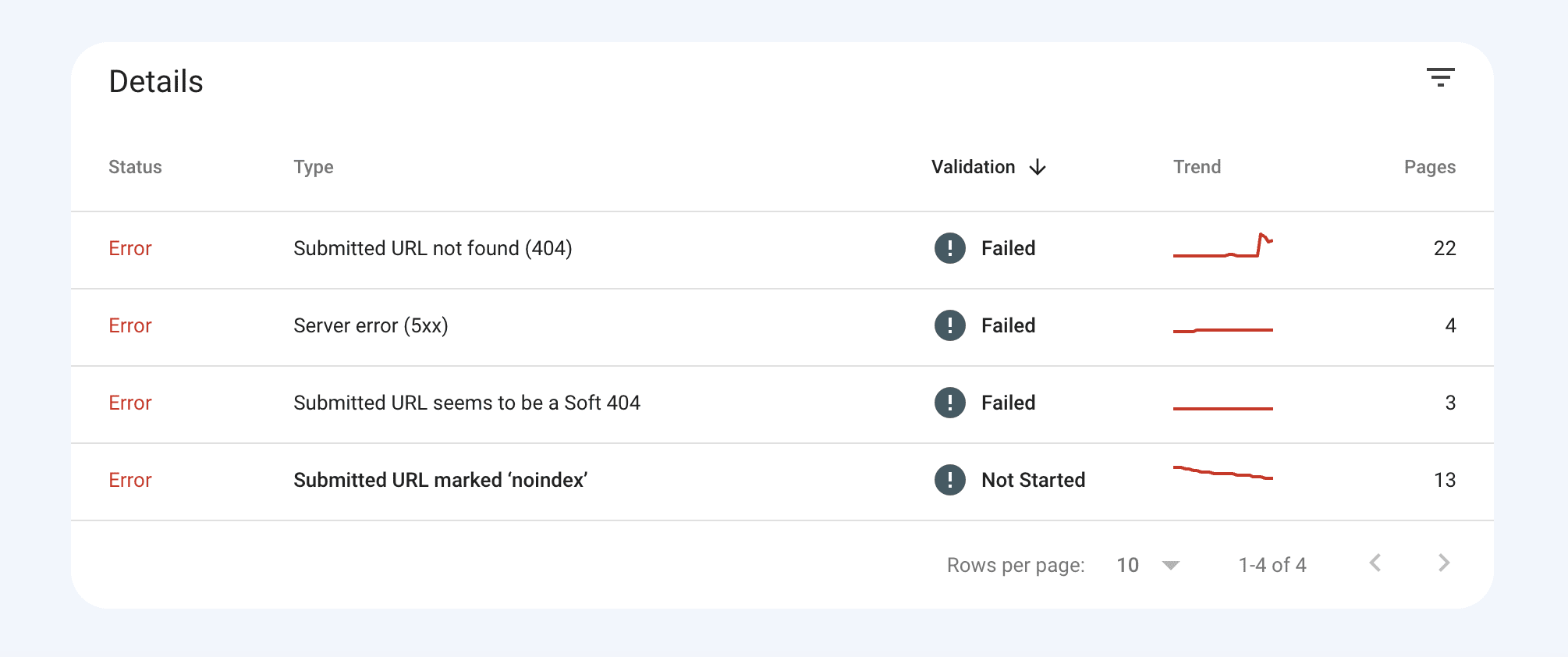

The Error tab shows pages that couldn’t beryllium indexed for immoderate reason.

Click into an mistake enactment successful the array to spot details, including however to hole it.

The Valid with warnings tab shows pages that person been indexed, but determination are immoderate issues that tin beryllium intentional connected your part. Click into a informing enactment successful the array to spot details and effort to hole issues. This volition assistance you fertile better.

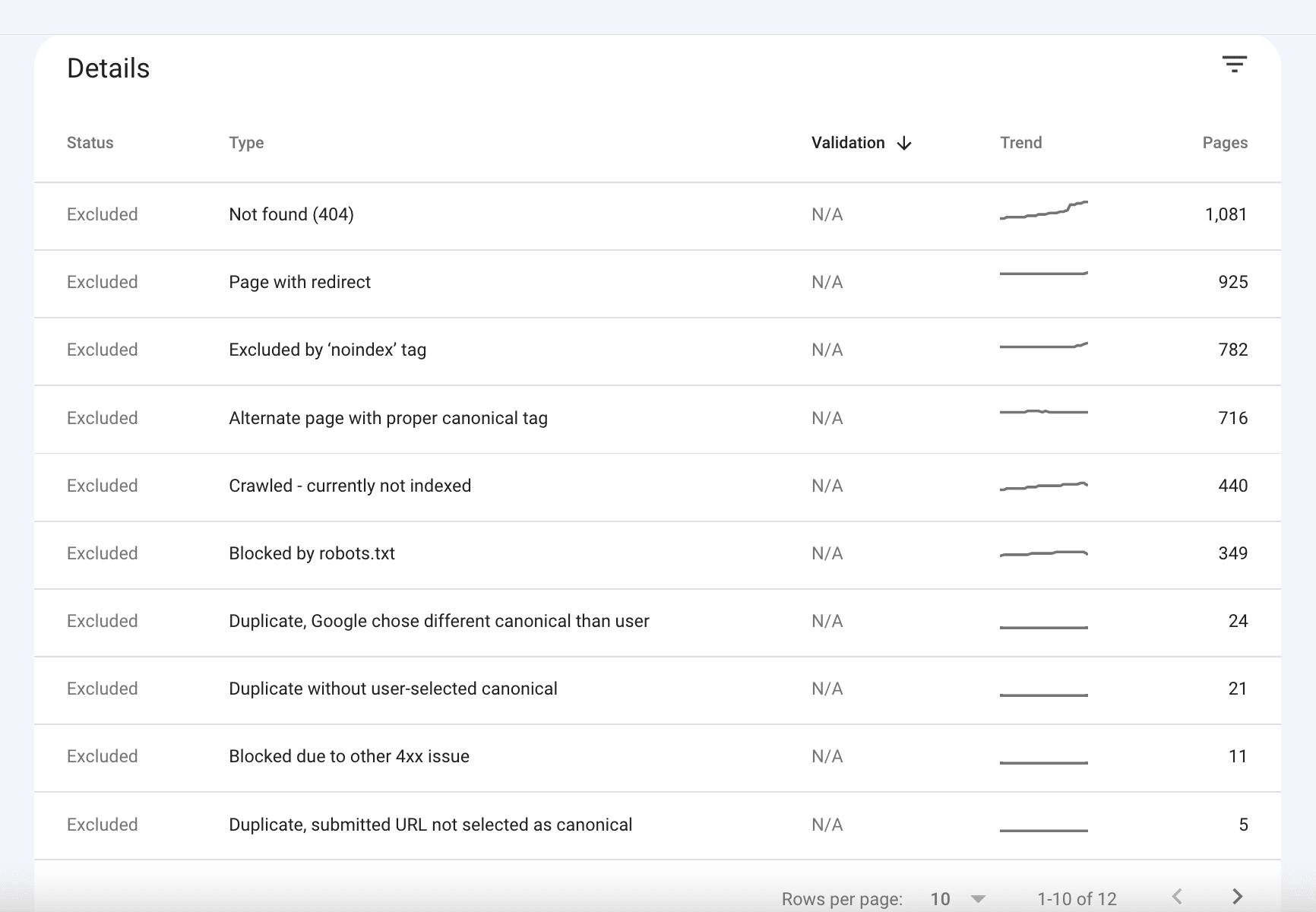

And the past tab is Excluded. These are pages that were not indexed.

Click into a enactment successful the array to spot details:

Look done each these pages carefully: you whitethorn find URLs that tin beryllium fixed truthful that Google volition scale them and commencement ranking. Pay attraction to the mistake successful beforehand of each leafage and effort to enactment connected it, if necessary.

Use peculiar tools

In summation to Google Search Console and hunt operator, you tin besides usage different tools to cheque indexing. I volition archer you astir the simplest and astir effectual ones.

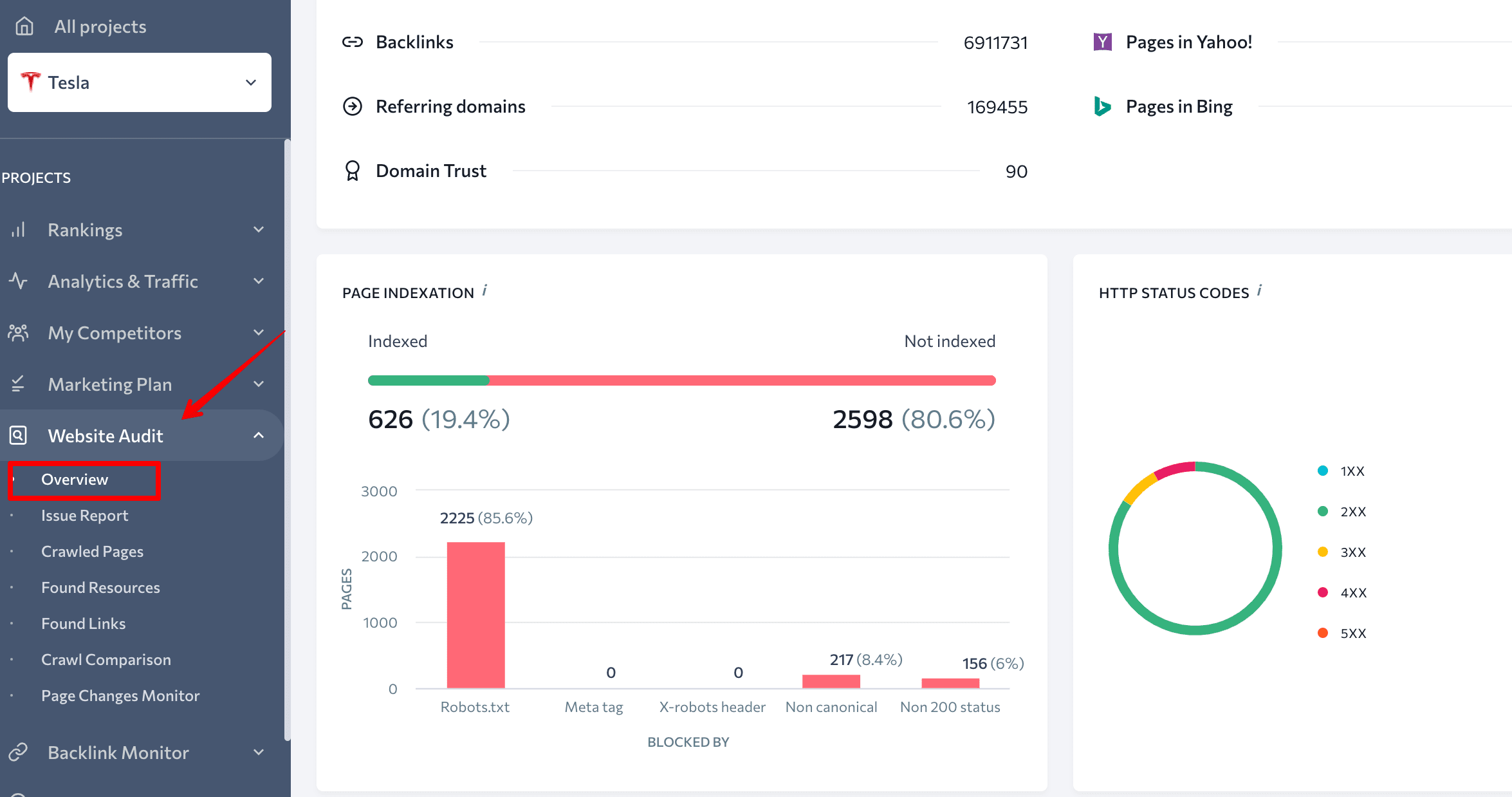

SE Ranking

In SE Ranking’s Website Audit tool, you volition find accusation astir website indexing.

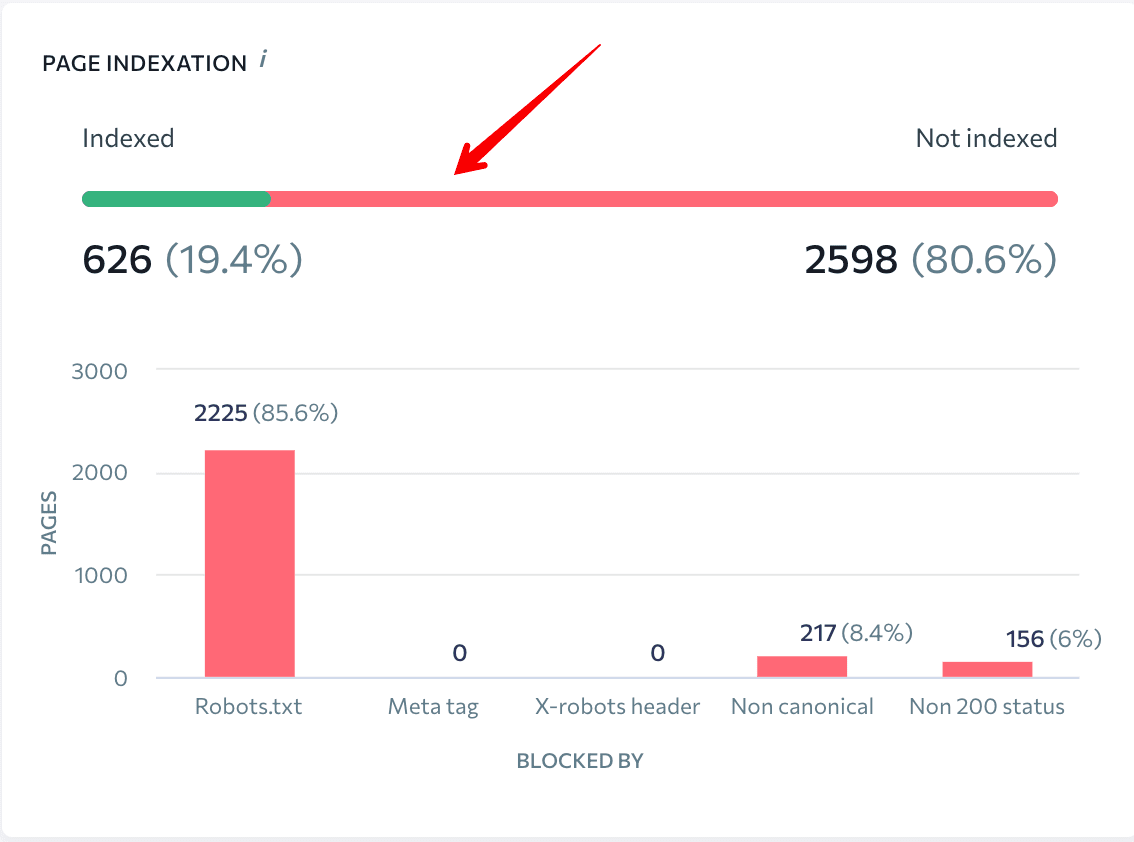

Go to the Overview and scroll to the Page Indexation block.

Here, you’ll spot a graph of indexed and not indexed pages, their percent ratio, and number. This dashboard besides shows issues that won’t fto hunt engines scale pages of the website. You tin presumption a elaborate study by clicking connected the graph.

By clicking connected the greenish line, you’ll spot the database of indexed pages and their parameters: status code, blocked by robots.txt, referring pages, x-robots-tag, title, description, etc.

And past click connected the reddish line: you’ll spot the aforesaid table, but with pages that were not indexed.

This extended accusation volition assistance you find and hole the issues truthful that you tin beryllium definite each important website pages are indexed.

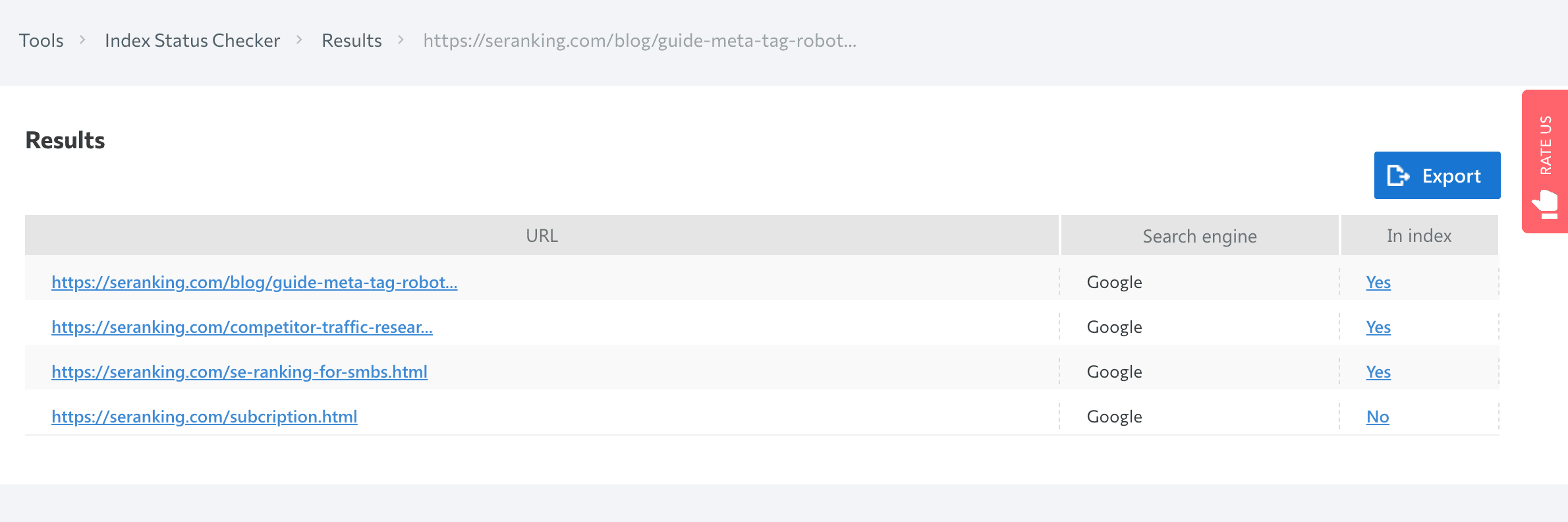

You tin besides cheque leafage indexing with SE Ranking’s Index Status Checker. Just take the hunt motor and participate a URL list.

Prepostseo

Prepostseo is different instrumentality that helps you cheque website indexing.

Just paste the website URL oregon database of URLs that you privation to cheque and click connected the Submit button. You’ll get a results array with 2 values against each URL:

- By clicking the Full website indexed leafage link, you volition beryllium redirected to a Google SERP wherever you tin find a afloat database of indexed pages of that circumstantial domain.

- By clicking the nexus Check existent leafage lone link, you volition beryllium redirected to a results leafage wherever you tin cheque if that nonstop URL is listed successful Google oregon not.

With this website scale checker, you tin cheque 1,000 pages astatine once.

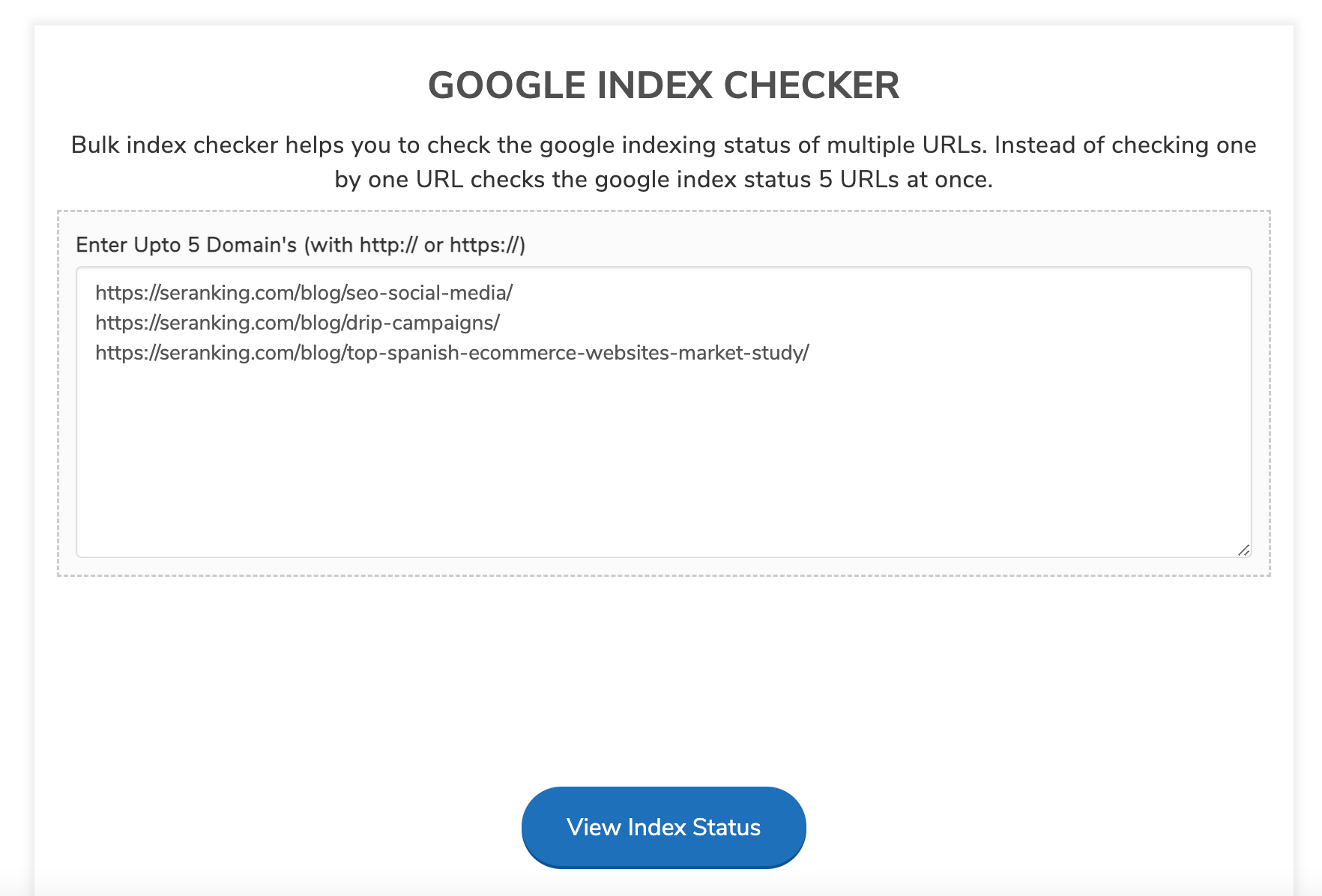

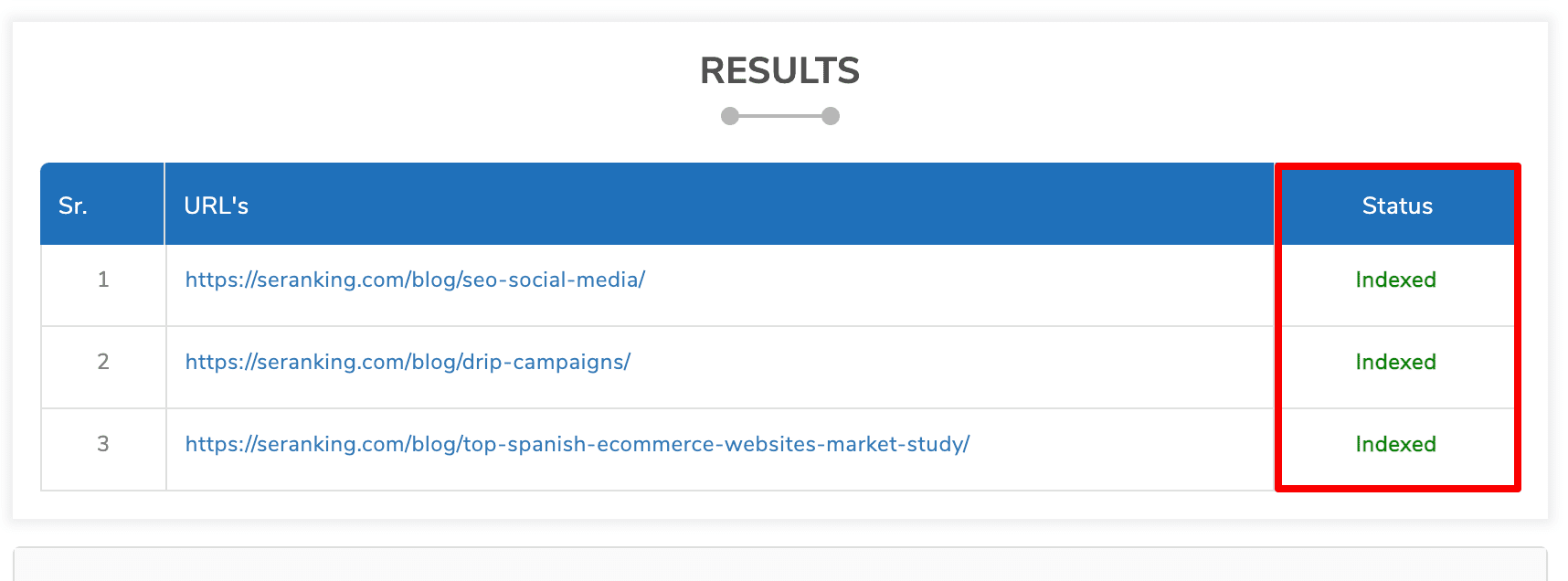

Small SEO Tools

This scale checker by Small SEO Tools besides helps you to cheque the Google indexing presumption of aggregate URLs. Simply participate the URLs and click connected the Check button, and the instrumentality volition process your request.

You’ll spot the array with the info astir indexing of each URL.

Here, you tin cheque the Google scale presumption of 5 URLs astatine once.

Check indexing successful Google Sheets

You tin scrape a batch of things with Google Sheets—for example, you tin usage the publication described below.

It tin hunt for site:domain.com successful Google to cheque if determination are indexed results for the input URL. Let’s spot measurement by measurement however it works.

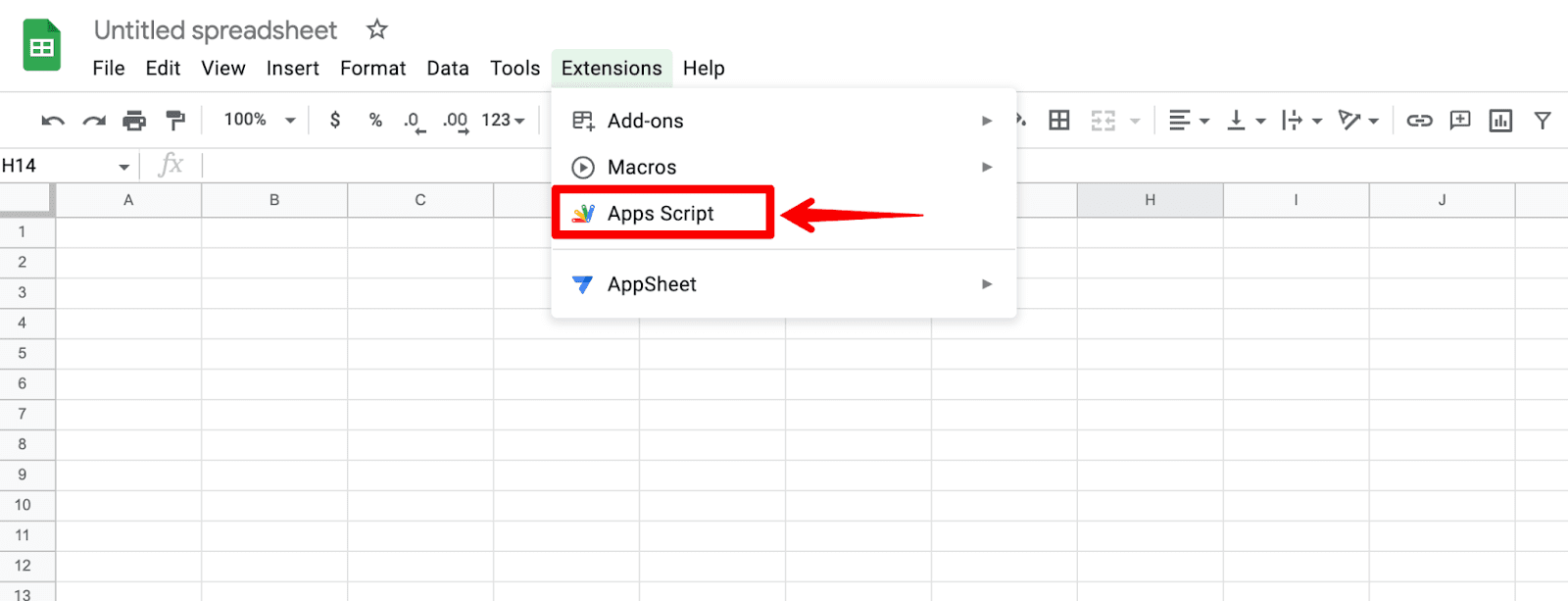

Step 1. Open Google Sheets ▶️ Go to Extensions ▶️ Click connected Apps Script

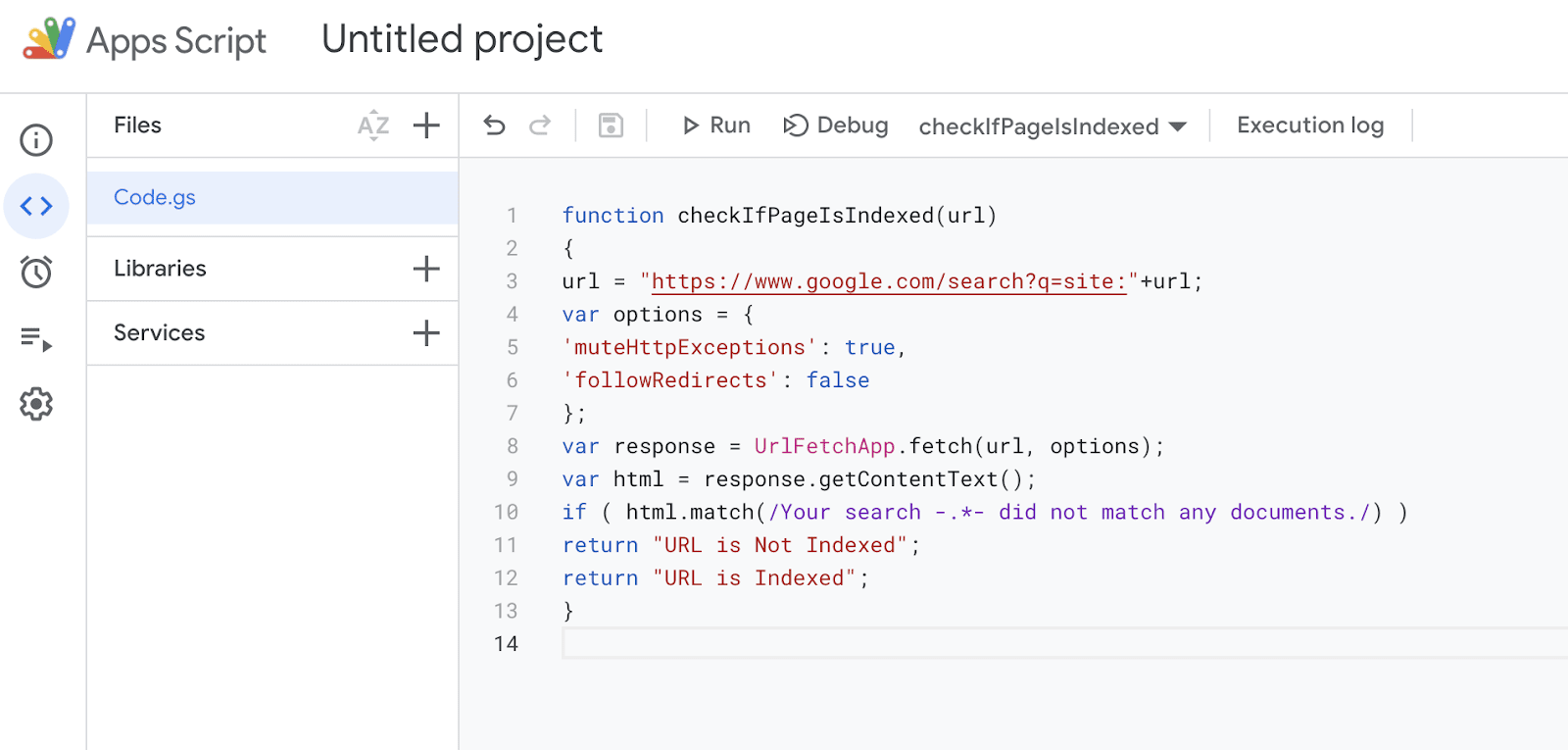

Step 2. Copy and paste this codification into the Script editor:

function checkIfPageIsIndexed(url) { url = "https://www.google.com/search?q=site:"+url; var options = { 'muteHttpExceptions': true, 'followRedirects': false }; var effect = UrlFetchApp.fetch(url, options); var html = response.getContentText(); if ( html.match(/Your hunt -.*- did not lucifer immoderate documents./) ) return "URL is Not Indexed"; return "URL is Indexed"; }

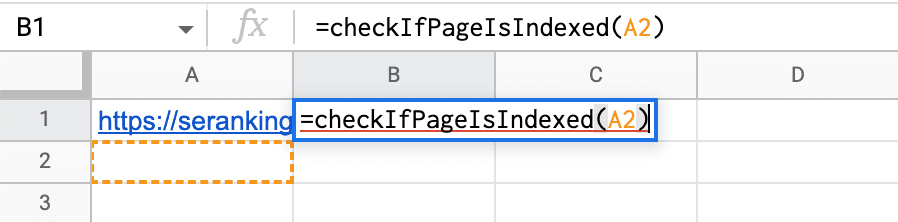

Step 3. Paste indispensable links into the array and run the relation successful Google Sheets.

To bash so, transcript the sanction of the function:

checkIfPageIsIndexed(URL)Then insert =checkIfPageIsIndexed(url) adjacent to the archetypal link. The worth (url) corresponds to the cell, wherever you pasted the URL. In the screenshot, it’s A2. Repeat this measurement with each the cells of the column.

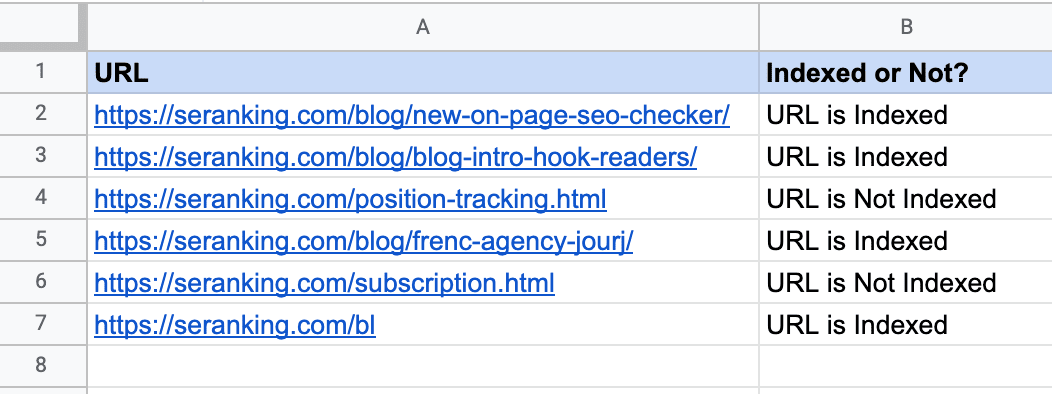

As a result, you volition see, which pages are indexed and which are not.

The vantage of this method is that you tin cheque a immense database of pages rapidly enough.

What are the specifics of websites indexing with antithetic technologies?

We’ve puzzled retired however Google indexes websites, however to taxable pages for indexing, and however to cheque whether they look successful SERP. Now, let’s speech astir an arsenic important thing: however web improvement exertion affects the indexing of website content.

The much you cognize astir indexing aspects of websites with antithetic technologies, the higher your chances of having each your pages successfully indexed. So, let’s get down to antithetic technologies and their indexing.

Flash content

Flash started arsenic a elemental portion of animation software, but successful the years that followed, it has shaped the web arsenic we cognize it today. Flash was utilized to marque games and so full websites, but today, Flash is rather dead.

Over the 20 years of its development, the exertion has had a batch of shortcomings, including a precocious CPU load, flash subordinate errors, and indexing issues. Flash is cumbersome, consumes a immense magnitude of strategy resources, and has a devastating interaction connected mobile instrumentality artillery life.

In 2019, Google stopped indexing flash content, making a connection astir the extremity of an era.

Not surprisingly, hunt engines urge not utilizing Flash connected websites. But if your tract is designed utilizing this technology, make a substance mentation of the site. It volition beryllium utile for users who haven’t ever installed Flash, oregon installed outdated Flash programs, positive mobile instrumentality users (such devices bash not show flash content).

JavaScript

Nowadays, it’s progressively communal to spot JavaScript websites with dynamic content—they load rapidly and are user-friendly. Before JS started dominating web development, hunt engines were crawling lone the text-based content—HTML. Over time, JS was becoming much and much popular, and Google started crawling and indexing specified contented better.

In 2018, John Mueller said that it took a fewer days oregon adjacent weeks for the leafage to get rendered. Therefore, JavaScript websites could not expect to person their pages indexed fast. Over the past years, Google has improved its quality to scale JavaScript. In 2019, Google claimed they needed a median clip of 5 seconds for JS-based pages to spell from crawler to renderer.

A survey conducted successful precocious 2019 recovered that Google is so getting faster astatine indexing JavaScript-rendered content. 60% of JavaScript contented is indexed wrong 24 hours of indexing the HTML. However, that inactive leaves 40% of contented that tin instrumentality longer.

To spot what’s hidden wrong the JavaScript that usually looks similar a azygous nexus to the JS file, Googlebot needs to render it. Only aft this measurement tin Google spot each the contented successful HTML tags and scan it fast.

JavaScript rendering is simply a precise resource-demanding thing. There’s a hold successful however Google processes JavaScript connected pages, and earlier rendering is complete, Google volition conflict to spot each JS contented that is loaded connected the lawsuit side. With plentifulness of accusation missing, the hunt motor volition crawl specified a leafage but won’t beryllium capable to recognize if the contented is high-quality and responds to the idiosyncratic intent.

Keep successful caput that leafage sections injected with JavaScript whitethorn incorporate interior links. And if Google fails to render JavaScript, it can’t travel the links. As a result, a hunt motor can’t scale specified pages unless they are linked to different pages oregon the sitemap.

To amended JS website indexing, work our guide.

There are a batch of JS-based technologies. Below, we’ll dive into the astir fashionable ones.

AJAX

AJAX allows pages to update serially by exchanging a tiny magnitude of information with the server. One of the signature features of the websites utilizing AJAX is that contented is loaded by 1 continuous script, without part into pages with URLs. As a result, the website has pages with hashtag # successful the URL.

Historically, specified pages were not indexed by hunt engines. Instead of scanning the https://mywebsite.com/#example URL, the crawler went to https://mywebsite.com/ and didn’t scan the URL with #. As a result, crawlers simply couldn’t scan each the website content.

From 2019, websites with AJAX are rendered, crawled, and indexed straight by Google, which means that bots scan and process the #! URLs, mimicking idiosyncratic behavior.

We crawl, render, scale the URLs with #! now, we usually don't usage the ?_escaped_fragment_= anymore, truthful it's truly deprecated. Do you deliberation we request to marque that clearer? Our tech-writers person pushed to region the docs, would that help?

— 🐝 johnmu.csv (personal) weighs much than 15MB 🐝 (@JohnMu) October 17, 2018Therefore, webmasters nary longer request to make the HTML mentation of each page. But it’s important to cheque if your robots.txt allows scanning AJAX scripts. If they are disallowed, beryllium definite to unfastened them for hunt indexing. To bash this, adhd the pursuing rules to the robots.txt:

User-agent: Googlebot Allow: /*.js Allow: /*.css Allow: /*.jpg Allow: /*.gif Allow: /*.pngSPA

Single-page application—or SPA—is a comparatively caller inclination of incorporating JavaScript into websites. Unlike accepted websites that load HTML, CSS, JS by requesting each from the server erstwhile it’s needed, SPAs necessitate conscionable 1 archetypal loading and don’t fuss the server aft that, leaving each the processing to the browser. It whitethorn dependable great—as a result, specified websites load faster. But this exertion mightiness person a antagonistic interaction connected SEO.

While scanning, crawlers don’t get capable leafage content; they don’t recognize that the contented is being loaded dynamically. As a result, hunt engines spot an bare leafage yet to beryllium filled.

Moreover, with SPA, you besides suffer the accepted logic down the 404 mistake leafage and different non-200 server presumption codes. As contented is rendered by the browser, the server returns a 200 HTTP presumption codification to each request, and hunt engines can’t archer if immoderate pages are not valid for indexing.

If you privation to larn however to optimize single-page applications to amended their indexing, instrumentality a look astatine our broad blog station astir SPA.

Frameworks

JavaScript frameworks are utilized to make dynamic website interaction. Websites built with React, Angular, Vue, and different JavaScript frameworks are each acceptable to client-side rendering by default. Due to this, frameworks are perchance riddled with SEO challenges:

- Google crawlers can’t really spot what’s connected the page. As I said before, hunt engines find it hard to scale immoderate contented that requires a click to load.

- Speed is 1 of the biggest hurdles. Google crawls pages un-cached, truthful those cumbersome archetypal loads tin beryllium problematic.

- Client-side codification adds accrued complexity to the finalized DOM, which means much CPU resources are required by some hunt crawlers and lawsuit devices. This is 1 of the astir important reasons wherefore a analyzable JS model would not beryllium preferred.

How to restrict tract indexing

There are immoderate pages you don’t privation Google oregon different hunt engines to index. Not each pages should fertile and beryllium included successful hunt results.

What contented is astir often restricted?

- Internal and work files: those that should beryllium seen lone by the tract head oregon webmaster, for example, a folder with idiosyncratic information specified during registration: /wp-login.php; /wp-register.php.

- Pages that are not suitable for show successful hunt results or for the archetypal acquaintance of the idiosyncratic with the resource: convey you pages, registration forms, etc.

- Pages with idiosyncratic information: interaction accusation that visitors permission during orders and registration, arsenic good arsenic outgo paper numbers;

- Files of a definite type, specified arsenic pdf documents.

- Duplicate content: for example, a leafage you’re doing an A/B trial for.

So, you tin artifact accusation that has nary worth to the idiosyncratic and does not impact the

site’s ranking, arsenic good arsenic confidential information from being indexed.

You tin lick 2 problems with it:

- Reduce the likelihood of definite pages being crawled, including indexing and appearing successful hunt results.

- Save crawling budget—a constricted fig of URLs per tract that a robot tin crawl.

Let’s spot however you tin restrict website content.

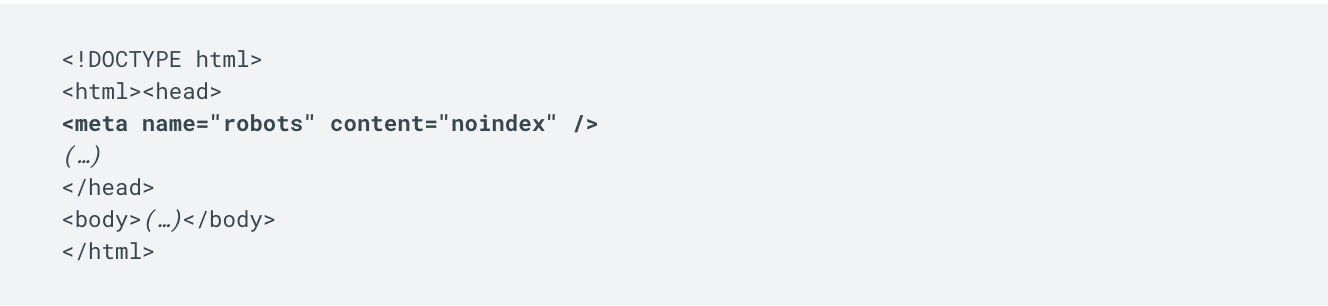

Robots meta tag

Meta robots is simply a tag wherever commands for hunt bots are added. They impact the indexing of the leafage and the show of its elements successful hunt results. The tag is placed successful the <head> of the web papers to instruct the robot earlier it starts crawling the page.

Meta robot is simply a much reliable mode to negociate indexing, dissimilar robots.txt, which works lone arsenic a proposal for the crawler. With the assistance of a meta robot, you tin specify commands (directives) for the robot straight successful the leafage code. It should beryllium added to each pages that should not beryllium indexed.

How to adhd meta tag robots to your website?

To forestall the leafage from indexing utilizing the robots meta tag, you request to marque changes to the leafage code. This tin beryllium done manually and done the admin panel.

To instrumentality a meta tag manually, you request to participate the hosting power sheet and find the desired leafage successful the basal directory of the site.

Just adhd the robots meta tag to the <head> section, and prevention it.

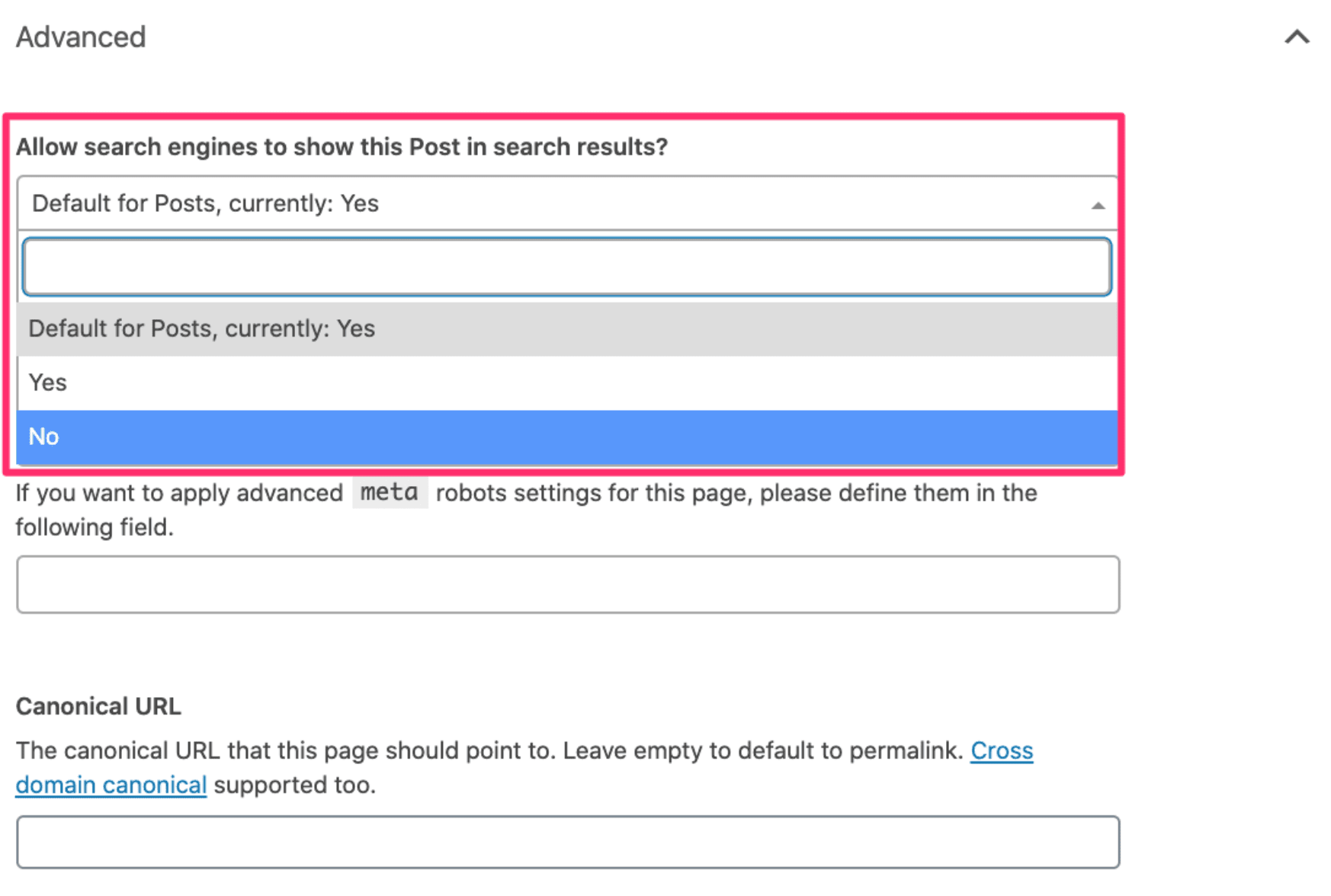

You tin besides adhd a meta tag done the tract admin panel. If a peculiar plugin is connected to the site’s contented absorption strategy (CMS), past conscionable spell to the leafage settings and tick the indispensable checkboxes to adhd the noindex and nofollow commands.

Now, let’s instrumentality a person look astatine meta robots diagnostic and however to use it manually.

Meta tags person 2 parameters: name and content.

- In name, you specify which hunt robot you are addressing

In content, you adhd a circumstantial command

<meta name="robots" content="noindex" />If robots is specified arsenic the sanction attribute, this means the bid is applicable for each hunt robots.

If you request to springiness a bid to a circumstantial bot, alternatively of robots, bespeak its name, for example:

<meta sanction = "Googlebot-News" contented = content="noindex" /> or <meta sanction = "googlebot" contented = content="noindex" />Now, let’s look astatine the contented attribute. It contains the bid that the robot indispensable execute. Noindex is utilized to prohibit indexing of the leafage and its content. As a result, the robot won’t scale the page.

Server-side

You tin besides restrict indexing of website contented server-side. To bash so, find the .htaccess record successful the website basal directory and adhd the pursuing codification to it:

SetEnvIfNoCase User-Agent "^Googlebot" search_botThis codification is for Googlebot, but you tin acceptable a regularisation for immoderate hunt engine:

SetEnvIfNoCase User-Agent «^Yahoo» search_bot SetEnvIfNoCase User-Agent «^Robot» search_bot SetEnvIfNoCase User-Agent «^php» search_bot SetEnvIfNoCase User-Agent «^Snapbot» search_bot SetEnvIfNoCase User-Agent «^WordPress» search_botThis regularisation allows you to artifact unwanted User Agents, which tin beryllium perchance unsafe oregon simply overload the server with unnecessary requests.

Set a website entree password

The 2nd mode to prohibit tract indexing with the assistance of .htaccess file is to make the password for accessing the site. Add this codification to the .htaccess file:

AuthType Basic AuthName "Password Protected Area" AuthUserFile /home/user/www-auth/.htpasswd Require valid-user home/user/www-auth/.htpasswdhome/user/www-auth/.htpasswd — a record with a password that you person to set.

The password is acceptable by the website proprietor who needs to place himself by adding a username. Therefore, you request to adhd the idiosyncratic to the password file:

htpasswd -c /home/user/www-auth/.htpasswd USERNAMEAs a result, the bot volition not beryllium capable to crawl the website and scale it.

Common indexing errors

Sometimes, Google cannot scale a page, not lone due to the fact that you person restricted contented indexing but besides due to the fact that of method issues connected the website.

Here are the 5 astir communal issues that forestall Google from indexing your pages.

Duplicate content

Having the aforesaid contented connected antithetic pages of your website tin negatively impact optimization efforts due to the fact that your contented isn’t unique. Since Google doesn’t cognize which URL to database higher successful SERP, it mightiness fertile some URLs little and springiness penchant to different pages. Plus, accidental Google decides that your contented is deliberately duplicated crossed domains successful an effort to manipulate hunt motor rankings. In that case, the website whitethorn not lone suffer presumption but besides tin beryllium dropped from Google’s index. So, you’ll person to get escaped of duplicate contented connected your site.

Let’s look astatine immoderate steps you tin instrumentality to debar duplicate contented issues.

- Set up redirects. Use 301 redirects successful your .htaccess record to usher users and crawlers to due pages whenever a website/URL changes.

- Work connected the website structure. Make definite that the contented does not overlap (common with blogs and forums). For example, a blog station whitethorn look connected the main leafage of a website, and an archive page.

- Minimize akin content. Your tract apt has akin contented connected antithetic pages that you request to get escaped of. For example, the website has 2 abstracted pages with astir identical text. You request to either merge 2 pages into 1 leafage oregon make unsocial contented for some pages.

- Use canonical tag. If you privation to support duplicate contented connected your website, Google recommends utilizing rel=”canonical” nexus element. What canonical does is constituent the hunt engines to the main mentation of the page.

HTTP presumption codification issues

Another occupation that mightiness forestall immoderate website leafage from being crawled and indexed is an HTTP presumption issue. Website pages, files, oregon links are expected to instrumentality the 200 presumption code. If they instrumentality different HTTP presumption codes, your website tin acquisition indexing and ranking issues. Let’s look astatine the main types of effect codes that tin wounded your website indexing:

- 3XX effect code indicates that there’s a redirect to different page. Each redirect puts an further load connected the server, truthful it’s amended to region unnecessary interior redirects. Redirects themselves bash not negatively impact the indexing, but you should show their due setting. Ideally, the fig of 3XX pages connected the website should not transcend 5% of the full fig of pages. If their fig is higher than 10%, that’s atrocious quality for you. Review each redirects and region immoderate of them.

- 4XX effect code indicates that the requested leafage cannot beryllium accessed. When clicking connected a non-existing URL, users volition spot that the leafage is missing. This harms the website indexing, which tin pb to ranking drops. Plus, interior links to 4XX pages drain your crawl budget. To get escaped of unnecessary 4XX URLs, reappraisal the database of specified breached links and regenerate them with accessible pages, oregon conscionable region immoderate of the links. Besides, to debar 4XX errors, you tin acceptable up 301 redirects. Keep successful mind: 404 pages tin beryllium connected your website, but if a idiosyncratic ends up seeing them, you person to marque definite that they are well-thought-out. Thus, you tin minimize reputational and usability damages.

- 5XX effect codification means the occupation was caused by the server. Pages that instrumentality specified codes are inaccessible some to website visitors and to hunt engines. As a result, the crawler cannot crawl and scale specified a breached page. To hole this issue, effort reproducing the server mistake for these URLs done the browser and cheque the server’s mistake logs. What’s more, you tin consult your hosting supplier oregon web developer since your server whitethorn beryllium misconfigured.

Internal linking issues

Internal links assistance crawlers scan websites, observe caller pages, and scale them faster. What occupation tin you look here? If immoderate website pages don’t person immoderate interior links pointing to them, hunt engines are improbable to find and scale this page. You tin bespeak them successful the XML sitemap oregon via outer links. But interior linking should not beryllium ignored.

Make definite that your website’s astir important pages person astatine slightest a mates of interior links pointing to them.

Keep successful mind: each interior links should walk nexus juice—as successful not beryllium tagged with the rel=”nofollow” attribute. After all, utilizing interior links successful a astute mode tin adjacent boost your rankings.

Blocked JavaScript, CSS, and Image Files

For optimal rendering and indexing, crawlers should beryllium capable to entree your JavaScript, CSS, and representation files. If you disallow the crawling of these files, it straight harms the indexing of your content. Let’s look astatine 3 steps that volition assistance you to debar this issue.

- To marque definite the crawler accesses your CSS, JavaScript, and representation files, usage the URL Inspection tool successful GSC. It provides accusation astir Google’s indexed mentation of a circumstantial leafage and shows however Googlebot sees your website content. So it tin assistance you hole indexing issues.

- Check and trial your robots.txt—check if each the directives are decently acceptable up. You tin bash it with Google’s robots.txt tester.

- To spot if Google detects your website’s mobile pages arsenic compatible for visitors, usage the Mobile-Friendly Test.

Slow-loading pages

It’s important to marque definite your website loads quickly. Google doesn’t similar slow-loading sites. As a result, they are indexed longer. Reasons for that tin beryllium different. For example, utilizing outdated servers with constricted resources oregon excessively overloaded pages for the user’s browser to process.

The champion signifier is to get your website to load successful little than 2 to 3 seconds. Keep successful caput that Core Web Vitals metrics, which measurement and measure the speed, responsiveness, and ocular stableness of websites, are Google ranking factors.

To larn much astir however to amended your site’s speed, work our blog post.

You tin show each the issues by utilizing peculiar SEO tools—for example, SE Ranking’s Website Audit. To find retired errors connected the website, spell to Issue report, which volition supply you with a implicit database of errors and recommendations for fixing them.

The study includes insights connected issues related to:

- Website Security

- JavaScript, CSS

- Crawling

- Duplicate Content

- HTTP Status Code

- Title & Description

- Usability

- Website Speed

- Redirects

- Internal & External Links etc.

By fixing each the issues, you tin amended the website indexing and summation its ranking successful hunt results.

Conclusion

Getting your tract crawled and indexed by Google is essential, but it does instrumentality a portion for your web pages to look successful the SERP. With a thorough knowing of each the subtleties of hunt motor indexing, you volition not wounded your website by making mendacious steps.

If you acceptable up and optimized your sitemap correctly, instrumentality into relationship method hunt motor requirements, and marque definite you person high-quality and utile content, Google won’t permission your website unattended.

In this blog post, we person discussed each the important indexing aspects:

- Notifying the hunt motor of a caller website oregon leafage by means of creating a sitemap, peculiar URL adding tools, and outer links.

- Using a hunt motor operator, peculiar tools, and scripts to cheque website indexing.

- The specifics of indexing websites that usage Ajax, JavaScript, SAP, and frameworks.

- Restricting tract indexing with the assistance of robots, meta tag, and entree password.

- Common indexing errors: interior linking issues, duplicate content, dilatory loading pages, etc.

Keep successful caput that a precocious indexing complaint isn’t adjacent to precocious Google rankings. But it’s the ground for your further website optimization. So, earlier doing thing else, cheque your pages’ indexing presumption to verify that they tin beryllium indexed.

Daria is simply a contented marketer astatine SE Ranking. Her interests span crossed SEO and integer marketing. She likes to picture analyzable things successful plain words. In her escaped time, Daria enjoys traveling astir the world, studying the creation of photography, and visiting creation galleries.

English (US)

English (US)