ARTICLE AD BOX

Editor’s Note: This station was primitively published successful November of 2013. While a batch of the archetypal contented inactive stands, algorithms and strategies are ever changing. Our squad has updated this station for 2021, and we anticipation that it volition proceed to beryllium a adjuvant resource.

For eCommerce SEO professionals, issues surrounding duplicate, thin, and low-quality contented tin spell catastrophe successful the hunt motor rankings.

Google, Bing, and different hunt engines are smarter than they utilized to be. Today, they lone reward websites with quality, unsocial content. If your website contented is thing but, you’ll beryllium warring an uphill conflict until you tin lick these duplicate contented issues.

We cognize however overwhelming this task tin be, which is wherefore we’ve created this broad usher to identifying and resolving communal eCommerce duplicate contented challenges. Take a work beneath to get started, oregon scope retired to our trained eCommerce SEO experts for an other helping hand.

Table of Contents

What is Duplicate Content?

Thin, scraped, and duplicate contented has been a precedence of Google’s for much than a decade, ever since the archetypal Panda algorithm update successful 2011. According to its Content Guidelines, Google defines duplicate contented arsenic “substantive blocks of contented wrong oregon crossed domains that either wholly lucifer different contented oregon are appreciably similar.”

In short, duplicate contented is the nonstop aforesaid website transcript recovered connected aggregate pages anyplace connected the internet. If you person contented connected your tract that tin beryllium recovered word-for-word determination other connected your site, past you’re dealing with a duplicate contented issue.

In our clip auditing and improving dozens of eCommerce websites, duplicate contented issues typically originate from a ample fig of web pages that are mostly identical contented oregon merchandise pages with specified abbreviated merchandise descriptions that the contented tin beryllium deemed thin, and thus, not invaluable to Google oregon the scholar (more details connected bladed contented aboriginal on.)

Why You Should Care:

The Google duplicate contented punishment is simply a story — but that doesn’t mean this contented isn’t important.

While it’s not a nonstop ranking punishment for SEO, duplicate contented tin wounded your wide integrated postulation if Google doesn’t cognize which leafage to fertile successful the SERPs.

Types of Duplicate Content successful eCommerce

Not each duplicate contented is editorially created. Several method situations tin pb to duplicate contented issues and Google penalties.

We’ll dive into a fewer of these situations below:

Internal Technical Duplicate Content

Duplicate contented tin beryllium internally connected an eCommerce tract successful a fig of ways, owed to method and editorial causes.

The pursuing instances are typically caused by method issues with a contented absorption strategy (CMS) and different code-related aspects of eCommerce websites.

- Non-Canonical URLs

- Sessions IDs

- Shopping Cart Pages

- Internal Search Results

- Duplicate URL Paths

- Product Review Pages

- WWW vs. Non-WWW Urls & Uppercase vs. Lowercase URLs

- Trailing Slashes connected URLs

- HTTPS URLs: Relative vs. Absolute Path

Non-Canonical URLs

Canonical URLs play a fewer cardinal roles successful your SEO strategy:

- Tell hunt engines to lone index a azygous mentation of a URL, nary substance what different URL versions are rendered successful the browser, linked to from outer websites, etc.

- Assist successful tracking URLs, wherever tracking codification (affiliate tracking, societal media root tracking, etc.) is appended to the extremity of a URL connected the tract (?a_aid=, ?utm_source, etc.).

- Fine-tune indexation of class leafage URLs successful instances wherever sorting, functional, and filtering parameters are added to the extremity of the basal class URLs to nutrient a antithetic ordering of products connected a class leafage (?dir=asc, ?price=10, etc.).

To forestall hunt engines from indexing each of these assorted URLs, guarantee that your canonical URL (in the <head> of the root code) is the aforesaid arsenic the basal class URL.

| URL/Page Type | Visible URL | Canonical URL |

| Base Category URL | https://www.domain.com/page-slug | https://www.domain.com/page-slug |

| Social Tracking URL | https://www.domain.com/page-slug?utm_source=twitter | https://www.domain.com/page-slug |

| Affiliate Tracking URL | https://www.domain.com/page-slug?a_aid=123456 | https://www.domain.com/page-slug |

| Sorted Category URL | https://www.domain.com/page-slug?dir=asc&order=price | https://www.domain.com/page-slug |

| Filtered Category URL | https://www.domain.com/page-slug?price=-10 | https://www.domain.com/page-slug |

It mightiness besides beryllium beneficial to disallow crawling of the commonly utilized URL parameters You should besides see disallowing the crawling of commonly utilized URL parameters — specified arsenic “?dir=” and “&order=” — to maximize your crawl budget.

2. Session IDs

Many eCommerce websites usage league IDs successful URLs (?sid=) to way idiosyncratic behavior. Unfortunately, this creates a duplicate of the halfway URL of immoderate leafage the league ID is applied to.

The champion fix: Use cookies to way idiosyncratic sessions, alternatively of appending league ID codification to URLs.

If league IDs indispensable beryllium appended to URLs, determination are a fewer different options:

- Canonicalize the league ID URLs to the page’s halfway URL.

- Set URLs with league IDs to noindex. This volition bounds your page-level nexus equity imaginable successful the lawsuit that idiosyncratic links to a URL that includes the league ID.

- Disallow crawling of league ID URLs via the “/robots.txt” file, arsenic agelong arsenic the CMS strategy does not nutrient league IDs for hunt bots (which could origin large crawlability issues).

3. Shopping Cart Pages

When users adhd products to and subsequently presumption their cart connected your eCommerce website, astir CMS systems automatically instrumentality URL structures that are circumstantial to that acquisition — done unsocial identifiers similar “cart,” “basket,” oregon different words.

These are not the types of pages that should beryllium indexed by hunt engines.To hole this duplicate contented issue, no-index these pages and past acceptable them to “noindex,nofollow” via a meta robots tag oregon X-robots tag. Don’t hide to besides disallow crawling of them via the “/robots.txt” file.

4. Internal Search Results

Internal hunt effect pages are produced erstwhile idiosyncratic conducts a hunt utilizing an eCommerce website’s interior hunt feature. These pages person nary unsocial content, lone repurposed snippets of contented from different pages connected your site.

For respective reasons, Google doesn’t privation to nonstop users from the SERPs to your interior hunt results. As mentioned above, the algorithm prioritizes existent contented pages (like merchandise pages, class pages, blog pages, etc.).

Unfortunately, this is simply a communal eCommerce duplicate contented issue. Many CMS systems bash not set interior hunt effect pages to “noindex, follow” by default, truthful your developer volition request to use this regularisation to hole the problem. We besides urge disallowing hunt bots from crawling interior hunt effect pages wrong the /robots.txt file.

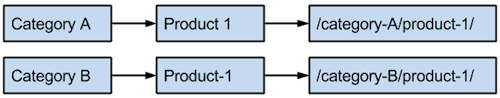

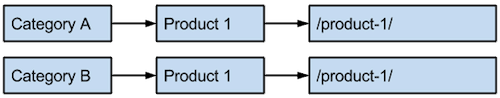

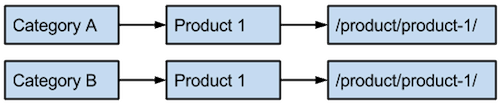

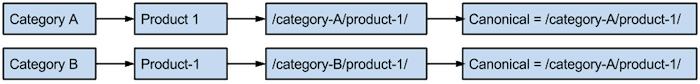

5. Duplicate URL Paths

When products are placed successful aggregate categories connected your site, it tin get tricky. For example, if a merchandise is placed successful some class A and class B, and if class directories are utilized wrong the URL operation of merchandise pages, past a CMS could perchance make 2 antithetic URLs for the aforesaid product.

This concern tin besides originate with sub-category URLs successful which the products displayed mightiness beryllium akin oregon precisely the same. For example, a “Flashlights” sub-category mightiness beryllium placed nether some “/tools/flashlights/” and “/emergency/flashlights/” connected an “Emergency Preparedness” website, adjacent though they person mostly the aforesaid products.

As 1 tin imagine, this tin pb to devastating duplicate contented problems for merchandise pages, which are typically the highest converting pages connected an eCommerce website.

You tin hole this contented by:

- Using root-level merchandise leafage URLs. Note: This does region keyword-rich, category-level URL operation benefits and besides limits trackability successful Analytics software.

- Using /product/ URL directories for each products, which allows for grouped trackability of each products.

- Using merchandise URLs built upon class URL structures. Make definite that each merchandise leafage URL has a single, designated canonical URL.

- Ensuring robust intro descriptions atop class pages, to guarantee each leafage has unsocial content.

6. Product Review Pages

Many CMS systems travel with built-in reappraisal functionality. Oftentimes, abstracted “review pages” are created to big each reviews for peculiar products, yet immoderate (if not all) of the reviews are placed connected the merchandise pages themselves.

To debar this duplicate content, these “review pages” should either beryllium canonicalized to the main merchandise leafage oregon acceptable to “noindex,follow” via meta robots oregon X-robots tag. We urge the archetypal method, conscionable successful lawsuit a nexus to a “review page” occurs connected an outer website. That volition guarantee nexus equity is passed to the due merchandise page.

You should besides cheque that reappraisal contented is not duplicated connected outer sites if utilizing third-party merchandise reappraisal vendors.

7. WWW vs. Non-WWW URLs & Uppercase vs. Lowercase URLs

Search engines see https://www.domain.com and https://domain.com antithetic web addresses. Therefore, it’s captious that 1 mentation of URLs is chosen for each leafage connected your eCommerce website.

We urge 301-redirecting the non-preferred mentation to the preferred mentation to debar these technically created duplicate URLs.

Uppercase and lowercase URLs request to beryllium handled successful the aforesaid manner. If some render separately, past hunt engines tin see them different. Choose 1 format and 301-redirect 1 mentation to the other. Find retired how to redirect uppercase URLs to lowercase here.

8. Trailing Slashes connected URLs

Similar to www and non-www URLs, hunt engines see URLs that render with a trailing slash and without to beryllium unique. For example, duplicate URLs are created successful situations wherever “/page/” and “/page/index.html” (or “/page” and “/page.html”) render the aforesaid content. Technically speaking, these 2 pages aren’t adjacent successful the aforesaid directory.

Fix this occupation by canonicalizing some to a azygous mentation oregon 301-redirecting 1 mentation to the other.

9. HTTPS URLs: Relative vs. Absolute Path

HTTPS (secure) URLs are typically created aft a idiosyncratic has logged into an eCommerce website. Most times, hunt engines person nary mode of uncovering these URLs. However, this tin hap erstwhile a logged-in head is updating contented and navigational links.

It’s communal for an head not to recognize that embedded URLs see HTTPS alternatively of HTTP successful the URLs. When comparative way URLs (excluding the “https://www.example.com” portion) are besides utilized connected the tract (either successful contented oregon navigational links), it makes it each excessively casual for hunt engines to rapidly crawl hundreds, if not thousands of HTTPS URLs, which are technically duplicates of the HTTP versions.

The astir communal solutions to hole this dwell of utilizing implicit way URLs (including the “https://www.example.com” portion) and ensuring that canonical URLs ever usage the HTTP mentation (“http://www.example.com”). Using 301 redirects successful these cases could easy interruption the user-login functionality, arsenic the HTTPS URLs would not beryllium capable to beryllium rendered.

Internal Editorial Duplicate Content

Not each issues of duplicate contented connected a website are technical. Often, duplicate contented occurs due to the fact that the on-page contented for antithetic pages is either akin oregon duplicated.

The easiest hole for these issues? Write unsocial eCommerce copy for each idiosyncratic page.

We’ll dive much into these antithetic situations below.

1. Similar Product Descriptions

It’s casual to instrumentality shortcuts with merchandise descriptions, particularly with akin products. However, retrieve that Google judges the content of eCommerce websites akin to regular contented sites — and taking shortcuts could pb to duplicate contented merchandise pages.

Product leafage descriptions should beryllium unique, compelling, and robust — particularly for mid-tier eCommerce websites looking to scale contented production due to the fact that they don’t person capable domain authorization to compete.

Sharing copied contented — abbreviated paragraphs, specifications, and different contented — betwixt pages increases the likelihood that hunt engines volition devalue a merchandise page’s contented prime and, subsequently, alteration its ranking position.

Google precocious reiterated that determination is no duplicate contented penalty for copy-and-pasted shaper merchandise descriptions. However, we inactive judge unique, charismatic merchandise descriptions are an important portion of your eCommerce SEO strategy.

2. Category Pages

Category pages typically see a rubric and merchandise grid, which means determination is often nary unsocial contented connected these pages. To debar this duplicate contented issue, category leafage champion practice recommends adding unsocial descriptions astatine the apical (not the bottom, wherever contented is fixed little value by hunt engines) describing which types of products are featured wrong the category.

There’s nary magic fig of words oregon characters to use. However, the much robust the category leafage content is, the amended accidental to maximize postulation from integrated hunt results.

Keep successful caput surface resolutions of your visitors. Any intro transcript should not propulsion the merchandise grid beneath the browser fold.

3. Homepage Duplicate Content

Home pages typically person the astir magnitude of incoming nexus equity — and frankincense service arsenic highly rankable pages successful hunt engines.

However, a homepage should besides beryllium treated similar immoderate different leafage connected an eCommerce website content-wise. Always guarantee that unsocial contented fills the bulk of location leafage assemblage content; a homepage consisting simply of duplicated merchandise blurbs offers small contextual worth for hunt engines.

Avoid utilizing your homepage’s descriptive contented successful directory submissions and different concern listings connected outer websites. If this has already been done to a ample extent, rewriting the location leafage descriptive contented is the easiest mode to hole the issue.

Off-Site Duplicate Content

Similar contented that exists betwixt abstracted eCommerce websites is simply a existent symptom constituent for integer marketers.

Let’s look astatine immoderate of the astir communal forms of outer (off-site) duplicate contented that prevents websites from ranking arsenic good arsenic they could successful integrated search.

- Manufacturer Product Descriptions

- Duplicate Content connected Staging, Development, oregon Sandbox Websites

- Third-Party Product Feeds (Amazon & Google)

- Affiliate Programs

- Syndicated Content

- Scraped Content

- Classifieds & Auction Sites

1. Manufacturer Product Descriptions

When eCommerce brands usage merchandise descriptions supplied by the shaper connected their ain merchandise pages, they are enactment astatine an contiguous disadvantage. These pages don’t connection immoderate unsocial worth to hunt engines, truthful large marque websites with much robust, higher-quality inbound nexus profiles are ranked higher — adjacent if they person the aforesaid descriptions.

The lone mode to hole this is to embark upon the extended task of rewriting existing merchandise descriptions. You should besides guarantee that immoderate caller products are launched with wholly unsocial descriptions.

We’ve seen lower-tier eCommerce websites summation integrated hunt postulation by arsenic overmuch arsenic 50–100% by simply rewriting merchandise descriptions for fractional of the website’s merchandise pages — with nary manual link-building efforts.

If your products are precise time-sensitive (they travel successful and retired of banal arsenic newer models are released), you should marque definite that caller merchandise pages are lone launched with wholly unsocial descriptions.

You tin besides capable your merchandise pages with unsocial contented through:

- Multiple photos (preferably unsocial to your site)

- Enhanced descriptions with elaborate penetration into merchandise benefits

- Product objection videos

- Schema markup

- User-generated reviews

2. Duplicate Content connected Staging, Development, oregon Sandbox Websites

Time and clip again, improvement teams forget, springiness small information to, oregon simply don’t recognize that investigating sites tin beryllium discovered and indexed by hunt engines. Fortunately, these situations tin beryllium easy fixed done antithetic approaches:

- Adding a “noindex,nofollow” meta robots oregon X-robots tag to each leafage connected the trial site.

- Blocking hunt motor crawlers from crawling the sites via a “disallow: /” bid successful the “/robots.txt” record connected the trial site.

- Password-protecting the trial site.

- Setting up these trial sites separately wrong Webmaster Tools and utilizing the “Remove URLs” instrumentality successful Google Search Console (or the “Block URLs” instrumentality successful Bing Webmaster Tools) to rapidly get the full trial tract retired of Google and Bing’s index.

If hunt engines already person a trial website indexed, a operation of these approaches tin output the champion results.

3. Third-Party Product Feeds (Amazon & Google)

For bully reason, eCommerce websites spot the worth successful adding their products to third-party buying websites. What galore selling managers don’t recognize is that this creates duplicate contented crossed these outer domains. Even worse, products connected third-party websites tin extremity up outranking the archetypal website’s merchandise pages.

Consider this scenario: A merchandise shaper with its ain website feeds its products to Amazon to summation sales.

But superior SEO problems person conscionable been created. Amazon is 1 of the astir authoritative websites successful the world, and its merchandise pages are astir guaranteed to outrank the manufacturer’s website.

Some whitethorn presumption this simply arsenic gross displacement, but it intelligibly is going to enactment an SEO’s occupation successful jeopardy erstwhile integrated hunt postulation (and resulting revenue) plummets for the archetypal website.

The solution to this occupation is precisely what you would expect: Ensure that merchandise descriptions fed to third-party sites are antithetic than what’s connected your brand’s website. We urge giving the shaper statement to third-party buying feeds similar Google, and penning a much robust, unsocial statement for your ain website.

Bottom line: Always springiness your ain website the borderline erstwhile it comes to authoritative and robust content.

4. Affiliate Programs

If your tract offers an affiliate program, don’t administer your ain site’s merchandise descriptions to your affiliates. Instead, supply affiliates with the aforesaid merchandise feeds that are fixed to different third-party vendors who merchantability oregon beforehand your products.

For maximum ranking imaginable successful hunt engines, marque definite no affiliates oregon third-party vendors usage the aforesaid descriptions arsenic your site.

5. Syndicated Content

Many eCommerce brands big blogs to supply much marketable content, and immoderate of them volition adjacent syndicate that contented retired to different websites.

Without due SEO protocols, this tin make outer duplicate content. And, if the syndication spouse is simply a much authoritative website, past it’s imaginable its hosted contented volition outrank the archetypal leafage connected the eCommerce website.

There are a fewer antithetic solutions here:

- Ensure that the syndication partner canonicalizes the contented to the URL connected the eCommerce site that it originated from. Any inbound links to the contented connected the syndication partner’s website volition beryllium applied to the archetypal nonfiction connected the eCommerce website.

- Ensure that the syndication spouse applies a “noindex,follow” meta robots oregon X-robots tag to the syndicated contented connected its site.

- Don’t partake successful contented syndication. Focus connected safer channels of postulation maturation and marque development.

6. Scraped Content

Sometimes, low-quality scraper sites bargain contented to make postulation and thrust income done ads. Furthermore, existent competitors tin bargain contented (even rewritten shaper descriptions), which tin beryllium a menace to the archetypal source’s visibility and rankability successful hunt engines.

While hunt engines person gotten overmuch amended astatine identifying these spammy sites and filtering them retired of hunt results, they tin inactive airs a problem.

The champion mode to grip this is to record a DMCA ailment with Google, oregon Intellectual Property Infringement with Bing, to alert hunt engines to the occupation and yet get offending sites removed from hunt results.

An important caveat: The portion of contented indispensable beryllium your own. If you’re utilizing shaper merchandise descriptions, you’ll person trouble convincing hunt engines that the scraper tract is genuinely violating your copyright. This mightiness beryllium a small easier if the scraper tract displays your full web leafage connected their site, with wide branding of your website.

7. Classifieds & Auction Sites

Many eCommerce sites acquisition contented duplication issues erstwhile different radical oregon retailers transcript their merchandise descriptions to Craigslist, eBay, and different auction/classifieds sites. Fighting this contented is an uphill conflict that’s apt to necessitate much effort than it’s worth. Fortunately, pages connected these sites expire comparatively quickly.

What is wrong your power is your ain merchandise listings connected classifieds and auction sites. Be mindful of immoderate contented duplication, and usage your merchandise provender for these sites wherever possible. At the aforesaid time, guarantee that your ain site’s merchandise pages (and leafage updates) are crawled and indexed arsenic regularly arsenic possible.

What is Thin Content for eCommerce?

Thin contented applies to immoderate leafage connected your tract with small to nary contented that doesn’t adhd unsocial worth to the website oregon the user. It provides unspeakable idiosyncratic experiences and tin get your eCommerce website penalized if the occupation grows supra the chartless threshold of what Google deems acceptable.

Examples of Thin Content successful eCommerce

Here are immoderate examples of scenarios wherever bladed contented could hap connected an eCommerce site.

Thin/Empty Product Descriptions

As mentioned above, it’s casual for online brands to instrumentality shortcuts with merchandise descriptions. Doing so, however, tin bounds your site’s integrated hunt postulation and conversion potential.

Search engines privation to fertile the champion contented for their users, and users privation wide explanations to assistance them with their purchasing decisions. When merchandise pages lone see 1 oregon 2 sentences, this helps nary one.

The solution: Ensure that your merchandise descriptions are arsenic thorough and elaborate arsenic possible. Start by jotting down 5 to 10 questions a lawsuit mightiness inquire astir the product, constitute the answers, and past enactment them into the merchandise description.

Test oregon Orphaned Pages

Nearly each website has outlying pages that were published arsenic trial pages, forgotten about, and present orphaned connected the site. Guess who is inactive uncovering them? Search engines.

Sometimes these pages are duplicates of others. Other times they person partially written content, and immoderate whitethorn beryllium wholly empty.

Ensure that each published and indexable contented connected your website is beardown and provides worth to a user.

Thin Category Pages

Marketers tin sometimes get carried distant with class creation. Bottom line: If a class is lone going to see a fewer products (or none), past don’t make it.

A class with lone 1 to 3 products doesn’t supply a large browsing experience. Too galore of these bladed class pages (coupled with different forms of duplicate content) tin pb a tract to beryllium penalized.

The aforesaid solution applies here: Ensure that your class pages are robust with some unsocial intro descriptions and capable merchandise listings.

Thin contented tin besides hap erstwhile drilling down into faceted class navigation until a leafage is reached with nary products. These are called “stub pages” and tin little hunt engines’ qualitative investigation of a tract erstwhile excessively galore exist.

We urge applying a conditional “noindex,follow” meta robots oregon X-robots tag to these pages whenever communal verbiage (“No products exist”) is utilized connected the leafage by the CMS. For much method solutions, we urge this usher from Moz.

Thin & Duplicate Content Tools

Discovering bladed and duplicate contented tin beryllium 1 of the astir hard and time-intensive tasks website owners oregon marketers undertake.

This last conception of our usher volition amusement you however to find duplicate contented connected your website with a fewer commonly utilized tools.

Google Search Console

Many duplicate contented issues (and adjacent bladed contented issues) tin beryllium discovered done Google Search Console, which is escaped for immoderate website.

Here’s how:

- HTML Improvements: GSC volition constituent retired circumstantial URLs with duplicate rubric tags and duplicate meta descriptions. Look for patterns — specified arsenic “duplicate rubric tags” and “duplicate meta descriptions” caused by class pages with URL parameters, orphaned pages with “missing rubric tags,” etc.

- Index Status: GSC volition amusement a humanities postulation graph of the fig of pages from your eCommerce tract successful its index. If the graph spikes upward astatine immoderate constituent successful time, and determination was nary corresponding summation successful contented instauration connected your end, it could beryllium an denotation that duplicate oregon low-quality URLs person made their mode into Google’s index.

- URL Parameters: GSC volition archer you erstwhile it’s having trouble crawling and indexing your site. This diagnostic is fantastic for identifying URL parameters (particularly for class pages) that could pb to technically created duplicate URLs. Use hunt operators (see below) to spot if Google has URLs with these parameters successful its index, and past find whether it is duplicate/thin contented oregon not.

- Crawl Errors: If your website’s brushed 404 errors person spiked, it could bespeak that galore low-quality pages person been indexed owed to improper 404 mistake pages being produced. These pages volition often show an mistake connection arsenic the lone assemblage content. They whitethorn besides person antithetic URLs, which tin origin method duplicate content.

Moz Site Crawl Tool

Moz offers a Site Crawl tool, which identifies interior duplicate page content, not conscionable duplicate metadata.

Duplicate contented is flagged arsenic a “high priority” issues successful the Moz Site Crawl tool. Use the instrumentality to export reported pages with duplicate contented and marque the indispensable fixes.

Inflow’s CruftFinder SEO Tool

Inflow’s CruftFinder SEO tool helps you boost the prime of your domain by cleaning up “cruft” (junk URLs and low-quality pages), reducing scale bloat, and optimizing your crawl budget.

It’s chiefly meant to beryllium a diagnostic tool, truthful usage it during your audit process, particularly connected older sites oregon erstwhile you’ve precocious migrated to a caller platform.

Search Query Operators

Using hunt query operators successful Google is 1 of the astir effectual ways of identifying duplicate and bladed content, particularly aft imaginable problems person been identified from Webmaster Tools.

The pursuing operators are peculiarly helpful:

site:

This relation shows astir URLs from your tract indexed by Google (but not needfully each of them). This is simply a speedy mode to gauge whether Google has an excessive magnitude of URLs indexed for your tract erstwhile compared to the fig of URLs included successful your sitemap (assuming your sitemap is correctly populated with each of your existent contented URLs).

Example: site:www.domain.com

inurl:

Use this relation successful conjunction with the site: relation to observe if URLs with peculiar parameters are indexed by Google. Remember: Potentially harmful URL parameters (if they are creating duplicate contented and indexed by Google) tin beryllium identified successful the URL Parameters conception of GSC. Use this relation to observe if Google has them indexed.

Example: site:www.domain.com inurl:?price=

This relation tin besides beryllium utilized successful “negative” manner to place immoderate non-www URLs indexed by Google .

Example: site:domain.com -inurl:www

intitle:

This relation displays each URLs indexed by Google with circumstantial words successful the meta rubric tag. This tin assistance place duplicates of a peculiar page, specified arsenic a merchandise leafage that whitethorn besides person a “review page” indexed by Google.

Example: site:www.domain.com intitle:Maglite LED XL200

Plagiarism, Crawler & Duplicate Content Tools

A fig of adjuvant third-party tools tin place duplicate and low-quality content.

Some of our favourite duplicate contented tools include:

Copyscape

This instrumentality is peculiarly utile astatine identifying outer “editorial” duplicate content.

Copyscape crawls a website’s sitemap and compares each its URLs to the remainder of Google’s index, looking for instances of plagiarism. For eCommerce brands, this tin place the worst-offending merchandise pages erstwhile it comes to copied-and-pasted shaper merchandise descriptions.

Export the information arsenic a CSV record and benignant by hazard people to rapidly prioritize pages with the astir duplicate content.

Screaming Frog

Screaming Frog is precise fashionable with precocious SEO professionals. It crawls a website and identifies imaginable method issues that could beryllium with duplicate content, improper redirects, mistake messages, and more.

Exporting a crawl and segmenting duplicate contented issues tin supply further penetration not provided by GSC.

Siteliner

Siteliner is simply a speedy mode to place pages connected your eCommerce tract with the astir interior duplicate content. How overmuch duplicate contented returned by this instrumentality is determined by however overmuch unsocial contented exists connected each peculiar leafage successful examination to the repeated elements of each web leafage (header, sidebar, footer, etc.).

It’s particularly adjuvant astatine uncovering bladed contented pages.

Getting Started

While the tools and method tips recommended supra tin assistance successful identifying duplicate, thin, and low-quality content, thing compares to years of acquisition with duplicate contented issues and solutions.

Good news: As you place these circumstantial issues connected your website, you’ll make a wealthiness of cognition that tin beryllium utilized successful the aboriginal to proceed cleaning up these issues — and preventing them successful the future.

Start crawling and fixing today, and you’ll convey yourself for it tomorrow.

Don’t person the clip oregon resources to tackle this SEO project? Rely connected our squad of experts to assistance cleanable up your website. Request a escaped proposal from our squad anytime.

English (US)

English (US)