ARTICLE AD BOX

AI is shaping each tract by making skills (such arsenic coding oregon data visualization) accessible to everyone, which weren’t disposable successful the past.

An AI relation who tin tally the close prompts tin execute low- and medium-level trouble tasks, allowing much absorption connected strategical decision-making.

In this guide, we volition locomotion you done measurement by measurement however to usage AI chatbots with ChatGPT arsenic an illustration to tally analyzable BigQuery queries for your SEO reporting needs.

We volition reappraisal 2 examples:

- How to Analyze Traffic Decline Because of Google Algorithm Impact

- How to Combine Search Traffic Data With Engagement Metrics from GA4

It volition besides springiness you an wide thought of however you tin usage chatbots to trim the load erstwhile moving SEO reports.

Why Do You Need To Learn BigQuery?

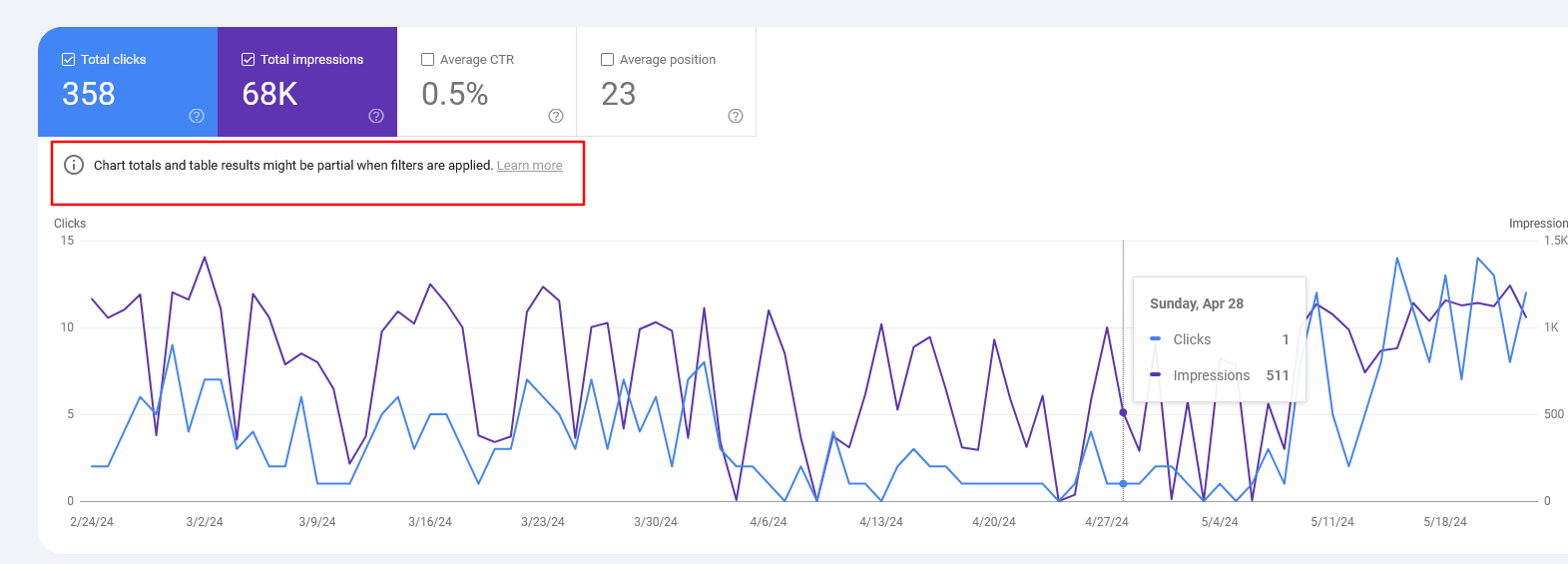

SEO tools similar Google Search Console oregon Google Analytics 4 person accessible idiosyncratic interfaces you tin usage to entree data. But often, they bounds what you tin bash and amusement incomplete data, which is usually called information sampling.

In GSC, this happens due to the fact that the instrumentality omits anonymized queries and limits array rows to up to 1,000 rows.

Screenshot from Google Search Console, May 2024

Screenshot from Google Search Console, May 2024

By utilizing BigQuery, you tin lick that occupation and tally immoderate analyzable reports you want, eliminating the information sampling contented that occurs rather often erstwhile moving with ample websites.

(Alternatively, you whitethorn effort utilizing Looker Studio, but the intent of this nonfiction is to exemplify however you tin run ChatGPT for BigQuery.)

For this article, we presume you person already connected your GSC and GA4 accounts to BigQuery. If you haven’t done it yet, you whitethorn privation to cheque our guides connected however to bash it:

SQL Basics

If you cognize Structured Query Language (SQL), you whitethorn skip this section. But for those who don’t, present is simply a speedy notation to SQL statements:

| Statement | Description |

| SELECT | Retrieves information from tables |

| INSERT | Inserts caller information into a table |

| UNNEST | Flattens an array into a acceptable of rows |

| UPDATE | Updates existing information wrong a table |

| DELETE | Deletes information from a table |

| CREATE | Creates a caller array oregon database |

| ALTER | Modifies an existing table |

| DROP | Deletes a array oregon a database. |

The conditions we volition beryllium utilizing truthful you tin familiarize yourself:

| Condition | Description |

| WHERE | Filters records for circumstantial conditions |

| AND | Combines 2 oregon much conditions wherever each conditions indispensable beryllium true |

| OR | Combines 2 oregon much conditions wherever astatine slightest 1 information indispensable beryllium true |

| NOT | Negates a condition |

| LIKE | Searches for a specified signifier successful a column. |

| IN | Checks if a worth is wrong a acceptable of values |

| BETWEEN | Select values wrong a fixed range |

| IS NULL | Checks for null values |

| IS NOT NULL | Checks for non-null values |

| EXISTS | Checks if a subquery returns immoderate records |

Now, let’s dive into examples of however you tin usage BigQuery via ChatGPT.

1. How To Analyze Traffic Decline Because Of Google Algorithm Impact

If you person been affected by a Google algorithm update, the archetypal happening you should bash is tally reports connected affected pages and analyse wherefore you person been impacted.

Remember, the worst happening you tin bash is commencement changing thing connected the website close distant successful panic mode. This whitethorn origin fluctuations successful hunt postulation and marque analyzing the interaction adjacent harder.

If you person less pages successful the index, you whitethorn find utilizing GSC UI information satisfactory for analyzing your data, but if you person tens of thousands of pages, it won’t fto you export much than 1,000 rows (either pages oregon queries) of data.

Say you person a week of information since the algorithm update has finished rolling retired and privation to comparison it with the erstwhile week’s data. To tally that study successful BigQuery, you whitethorn commencement with this elemental prompt:

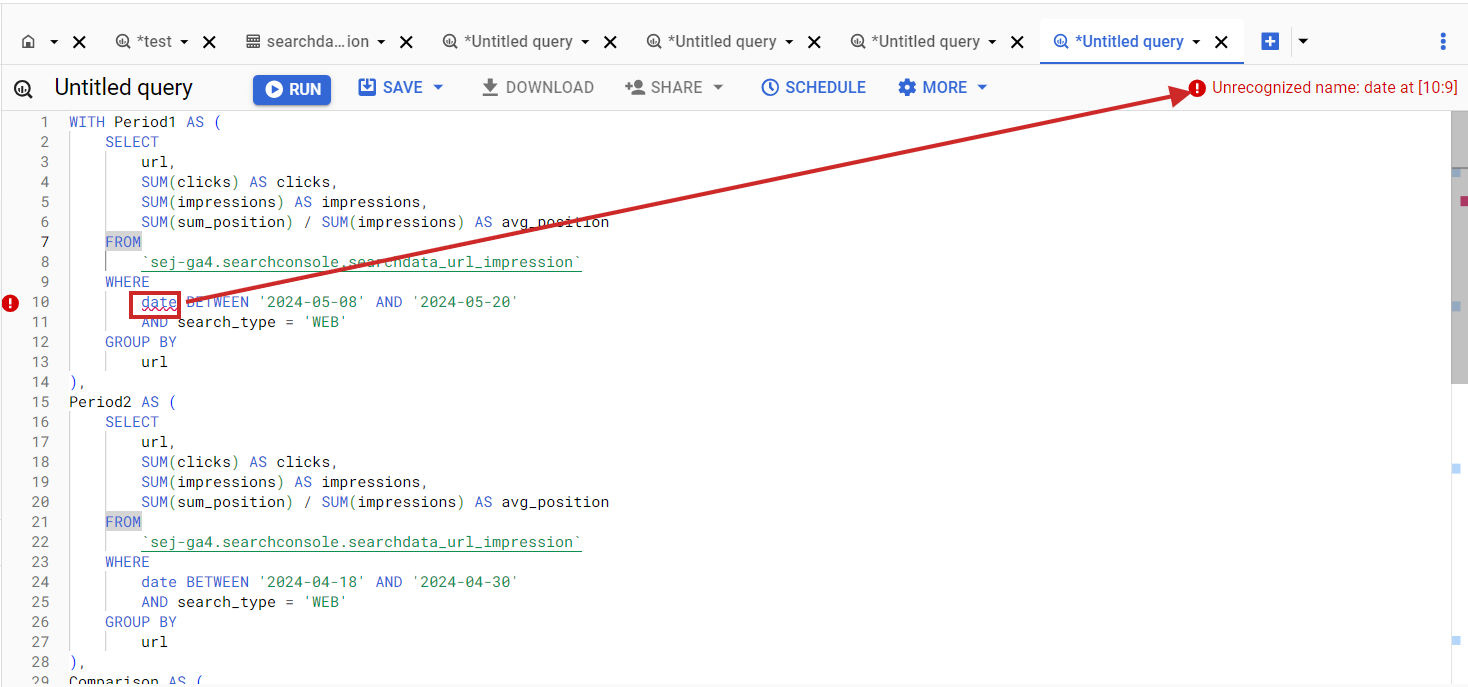

Once you get an SQL code, transcript and paste it into the BigQuery SQL editor, but I stake the archetypal codification you volition get volition person errors. For example, array file names whitethorn not lucifer what is successful your BigQuery dataset.

Error successful BigQuery SQL erstwhile file sanction doesn’t lucifer the dataset column.

Error successful BigQuery SQL erstwhile file sanction doesn’t lucifer the dataset column.

Things similar this hap rather often erstwhile performing coding tasks via ChatGPT. Now, let’s dive into however you tin rapidly hole issues similar this.

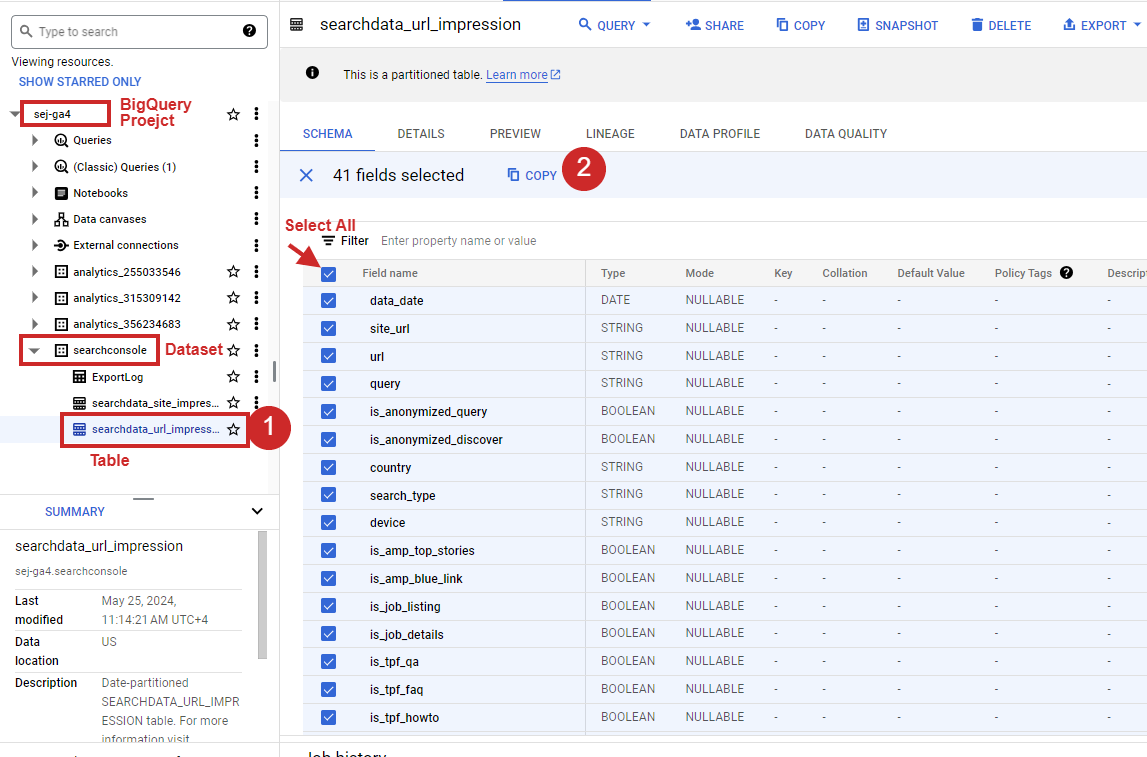

Simply click connected your dataset successful the left-right panel, prime each columns connected the close side, and click Copy arsenic Table.

How to prime each columns of the array successful BigQuery.

How to prime each columns of the array successful BigQuery.

Once you person it, conscionable transcript and paste it arsenic a follow-up punctual and deed enter.

This volition hole the generated SQL according to the GSC array operation arsenic follows:

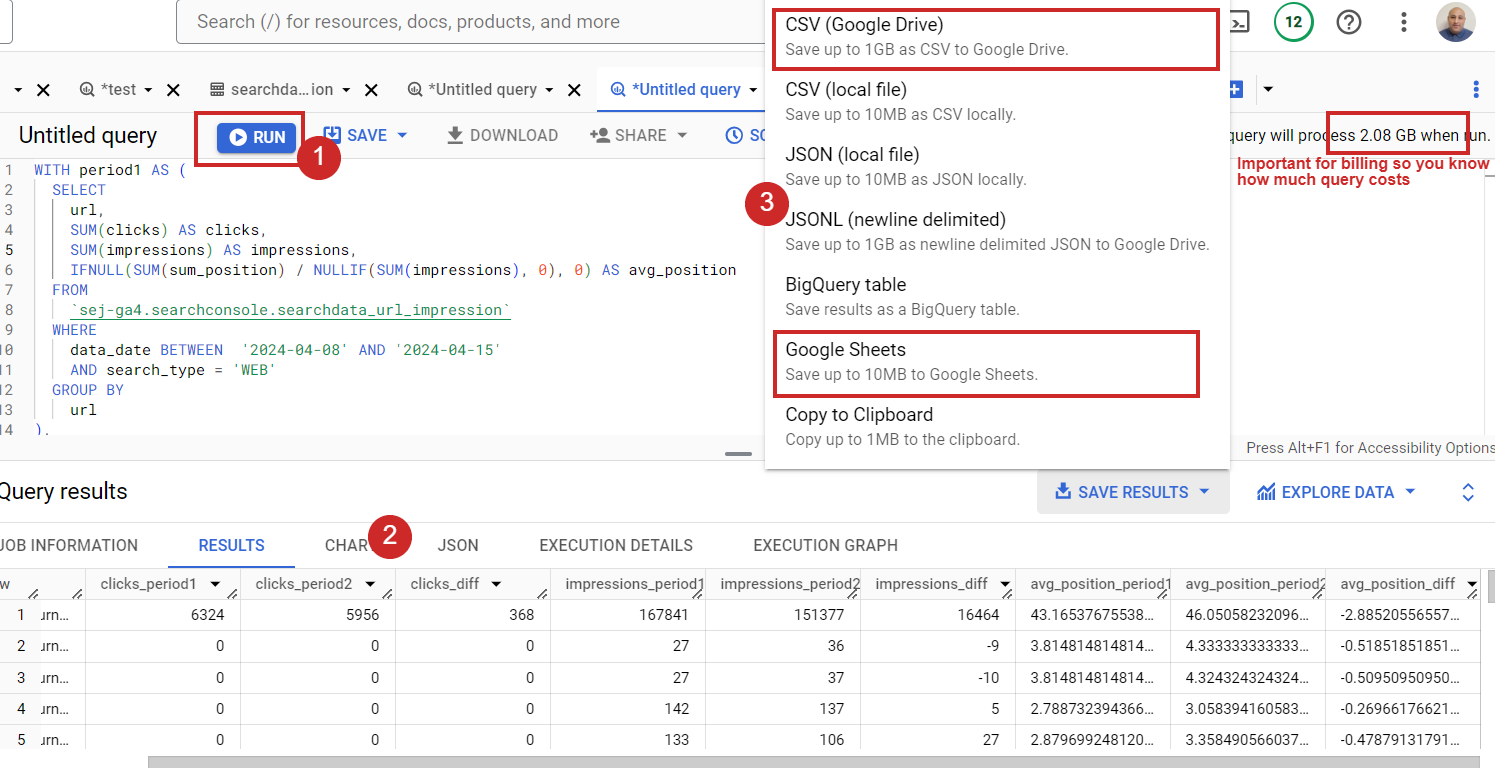

Now, tally it and bask the information either by exporting it into the CSV oregon Google Sheets.

How to tally SQL successful BigQuery.

How to tally SQL successful BigQuery.

In lawsuit you person millions of URLs, you whitethorn not beryllium capable to enactment successful Google Sheets oregon CSV export due to the fact that the information is excessively big. Plus, those apps person limitations connected however galore rows you tin person successful a azygous document. In that case, you tin prevention results arsenic a BigQuery array and link to it with Looker Studio to presumption the data.

But delight retrieve that BigQuery is simply a freemium service. It is escaped up to 1 TB of processed query information a month. Once you transcend that limit, your recognition paper volition beryllium automatically charged based connected your usage.

That means if you link your BigQuery to Looker Studio and browse your information there, it volition number against your billing each clip you unfastened your Looker dashboard.

That is why, erstwhile exports person a fewer tens of thousands oregon hundreds of thousands of rows, I similar utilizing Google Sheets. I tin easy link it to Looker Studio for data visualization and blending, and this will not number against my billing.

If you person ChatGPT Plus, you tin simply usage this custom GPT I’ve made, which takes into relationship array schemas for GA4 and Search Console. In the supra guide, I assumed you were utilizing the escaped version, and it illustrated however you tin usage ChatGPT wide for moving BigQuery.

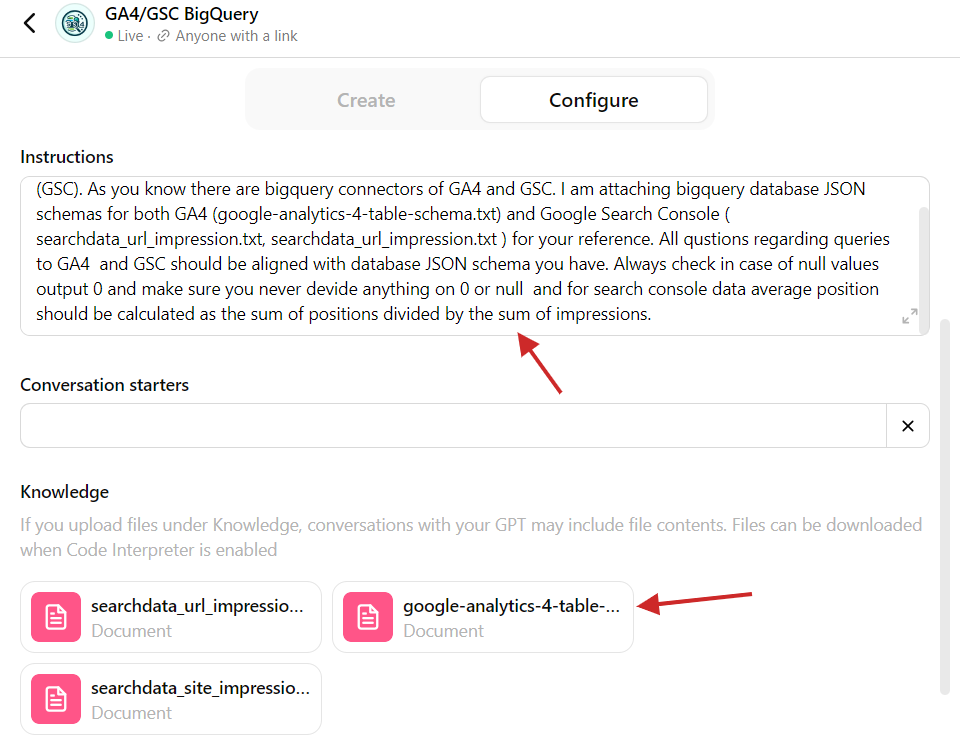

In lawsuit you privation to cognize what is successful that customized GPT, present is the screenshot of the backend.

Custom GPT with BigQuery array schemas.

Custom GPT with BigQuery array schemas.

Nothing analyzable – you conscionable request to transcript tables from BigQuery arsenic JSON successful the step explained above and upload them into the customized GPT truthful it tin notation to the array structure. Additionally, determination is simply a punctual that asks GPT to notation to the JSON files attached erstwhile composing queries.

This is different illustration of however you tin usage ChatGPT to execute tasks much effectively, eliminating repetitive tasks.

If you request to enactment with different dataset (different from GA4 oregon GSC) and you don’t cognize SQL, you tin upload the array schema from BigQuery into ChatGPT and constitute SQLs circumstantial to that array structure. Easy, isn’t it?

As homework, I suggest you analyse which queries person been affected by AI Overviews.

There is nary differentiator successful the Google Search Console array to bash that, but you tin tally a query to spot which pages didn’t suffer ranking but had a important CTR driblet aft May 14, 2024, erstwhile Google introduced AI Overviews.

You tin comparison the two-week play aft May 14th with the 2 weeks prior. There is inactive a anticipation that the CTR driblet happened due to the fact that of different hunt features, similar a rival getting a Featured Snippet, but you should find capable valid cases wherever your clicks were affected by AI Overviews (formerly Search Generative Experience oregon “SGE”).

2. How To Combine Search Traffic Data With Engagement Metrics From GA4

When analyzing hunt traffic, it is captious to recognize however overmuch users prosecute with contented due to the fact that idiosyncratic engagement signals are ranking factors. Please enactment that I don’t mean the nonstop metrics defined successful GA4.

However, GA4’s engagement metrics – specified arsenic “average engagement clip per session,” which is the mean clip your website was successful absorption successful a user’s browser – whitethorn hint astatine whether your articles are bully capable for users to read.

If it is excessively low, it means your blog pages whitethorn person an issue, and users don’t work them.

If you harvester that metric with Search Console data, you whitethorn find that pages with debased rankings besides person a debased mean engagement clip per session.

Please enactment that GA4 and GSC person antithetic attribution models. GA4 uses past data-driven oregon last-click attribution models, which means if 1 visits from Google to an nonfiction leafage erstwhile and past comes backmost straight 2 much times, GA4 whitethorn property each 3 visits to Google, whereas GSC volition study lone one.

So, it is not 100% close and whitethorn not beryllium suitable for firm reporting, but having engagement metrics from GA4 alongside GSC information provides invaluable accusation to analyse your rankings’ correlations with engagement.

Using ChatGPT with BigQuery requires a small preparation. Before we leap into the prompt, I suggest you work however GA4 tables are structured, arsenic it is not arsenic elemental arsenic GSC’s tables.

It has an event_params column, which has a grounds benignant and contains dimensions similar page_location, ga_session_id, and engagement_time_msec. It tracks however agelong a idiosyncratic actively engages with your website.

event_params cardinal engagement_time_msec is not the full clip connected the tract but the clip spent connected circumstantial interactions (like clicking oregon scrolling), erstwhile each enactment adds a caller portion of engagement time. It is similar adding up each the small moments erstwhile users are actively utilizing your website oregon app.

Therefore, if we sum that metric and mean it crossed sessions for the pages, we get the mean engagement clip per session.

Now, erstwhile you recognize engagement_time_msec , let’s inquire ChatGPT to assistance america conception a query that pulls GA4 “average engagement clip per session” for each URL and combines it with GSC hunt show information of articles.

The punctual I would usage is:

Once I copied and pasted into BigQuery, it gave maine results with “average engagement clip per session” being each nulls. So, apparently, ChatGPT needs much discourse and guidance connected however GA4 works.

I’ve helped to supply additional knowledge arsenic a follow-up question from GA4’s authoritative documentation connected however it calculates engagement_time_msec. I copied and pasted the papers into the follow-up punctual and asked to notation to that cognition erstwhile composing the query, which helped. (If you get immoderate syntax error, conscionable copy/paste it arsenic a follow-up question and inquire to hole it.)

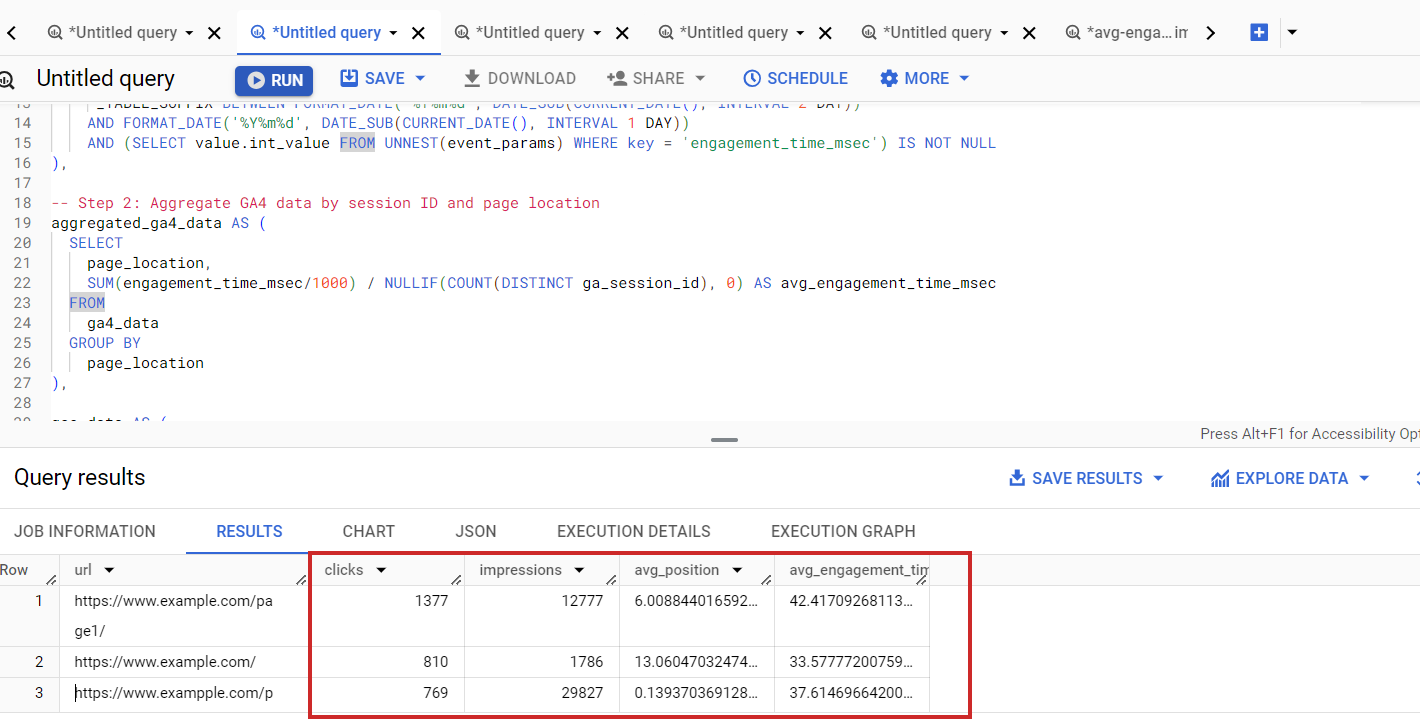

As a effect of 15 minutes of effort, I got the close SQL:

This pulls GSC information with engagement metrics from GA4.

Search Console combined information with GA4

Search Console combined information with GA4

Please enactment that you mightiness announcement discrepancies betwixt the numbers successful the GA4 UI and the information queried from BigQuery tables.

This happens due to the fact that GA4 focuses connected “Active Users” and groups uncommon information points into an “(other)” category, portion BigQuery shows each earthy data. GA4 besides uses modeled information for gaps erstwhile consent isn’t given, which BigQuery doesn’t include.

Additionally, GA4 whitethorn illustration information for quicker reports, whereas BigQuery includes each data. These variations mean GA4 offers a speedy overview, portion BigQuery provides elaborate analysis. Learn a much elaborate mentation of wherefore this happens in this article.

Perhaps you whitethorn effort modifying queries to see lone progressive users to bring results 1 measurement person to GA4 UI.

Alternatively, you tin usage Looker Studio to blend data, but it has limitations with precise ample datasets. BigQuery offers scalability by processing terabytes of information efficiently, making it perfect for large-scale SEO reports and elaborate analyses.

Its precocious SQL capabilities let analyzable queries for deeper insights that Looker Studio oregon different dashboarding tools cannot match.

Conclusion

Using ChatGPT’s coding abilities to constitute BigQuery queries for your reporting needs elevates you and opens caller horizons wherever you tin harvester aggregate sources of data.

This demonstrates however ChatGPT tin streamline analyzable information investigation tasks, enabling you to absorption connected strategical decision-making.

At the aforesaid time, these examples taught america that humans perfectly request to run AI chatbots due to the fact that they whitethorn hallucinate oregon nutrient incorrect answers.

More resources:

- Get Started With GSC Queries In BigQuery

- Leveraging Generative AI Tools For SEO

- Advanced Technical SEO: A Complete Guide

Featured Image: NicoElNino/Shutterstock

![Win Higher-Quality Links: The PR Approach To SEO Success [Webinar] via @sejournal, @lorenbaker](https://www.searchenginejournal.com/wp-content/uploads/2025/03/featured-1-716.png)

English (US)

English (US)