ARTICLE AD BOX

Reading Time: 11 minutes

Reading Time: 11 minutes

As the predominant web crawler accounting for nearly 29% of bot hits, Googlebot plays a important relation successful determining however users entree accusation online.

By continuously scanning implicit millions of domains, Googlebot helps Google’s algorithms recognize the immense scenery of the net and support its dominance successful hunt with over 92% of the planetary marketplace share.

Despite being the astir progressive crawler, galore tract owners stay unaware of however Googlebot functions and what aspects of their tract it assesses.

Read connected arsenic we demystify Googlebot’s interior workings successful 2024 by examining its assorted types, halfway purpose, and however your tract tin optimize itself for this influential bot.

What Is Googlebot and How Does it Work?

Googlebot is the web crawler Google uses to observe and archive web pages for Google Search. To function, Googlebot relies connected web crawling to find and work pages connected the net systematically. It discovers caller pages by pursuing links connected antecedently encountered pages and different methods that we’ll outline later.

It past shares the crawled pages with Google’s systems that fertile hunt results. In this process, Googlebot considers some however galore pages it crawls (called crawl volume) and however efficaciously it tin scale important pages (called crawl efficacy). There are immoderate different concepts that are besides important to recognize that I’ll outline below:

Crawl Budget

This is the regular maximum fig of URLs that Googlebot volition crawl for a circumstantial domain. For astir websites and clients, this is not a interest arsenic Google volition allocate capable resources to find each of your content. Of the hundreds of sites I’ve worked on, I’ve lone encountered a fewer instances wherever the crawl fund was an issue. For 1 tract that had implicit 100 cardinal pages, Googlebot’s crawls were maxed retired astatine 4 cardinal per time and that wasn’t capable to get each of the contented indexed. I was capable to get successful interaction with Google engineers and person them summation the crawl fund to 8 cardinal regular crawls. This is an highly uncommon script and astir sites volition lone spot a fewer 1000 regular crawls, truthful this is not a concern.

Crawl Rate

The measurement of requests that Googlebot makes successful a fixed timeframe. It tin beryllium measured in requests per second, oregon astir often regular crawls that you tin find successful the crawl reports successful Google Search Console. Googlebot measures server effect clip successful existent clip and if your tract starts slowing down, it volition little the crawl complaint truthful arsenic not to dilatory down the acquisition for your users. If your tract is lacking resources and is dilatory to respond, Googlebot volition not observe and get to caller oregon updated contented arsenic quickly.

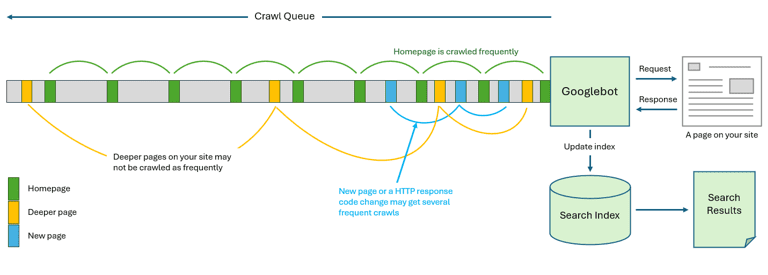

Crawl Queue

This is the database of URLs that Googlebot volition crawl. It’s a continuous watercourse of URLs and arsenic Google discovers caller URLs it has to adhd them to the queue to beryllium crawled. The process of updating and maintaining the queue is rather analyzable and Google has to negociate respective scenarios, for example:

- When a caller leafage is discovered

- When a leafage changes HTTP presumption codification (e.g.: deleted oregon redirected)

- When a leafage is blocked from crawling

- When a tract is down for attraction (usually erstwhile a HTTP 503 effect is received)

- When the server is unavailable owed to a DNS, web issue, oregon different infrastructure issue

- When a site’s interior linking changes, altering nexus signals for an idiosyncratic page

- If a page’s contented changes regularly oregon was updated since the past crawl

Each of these scenarios volition origin Googlebot to reevaluate a URL and alteration the crawl complaint and/or the crawl frequency.

Crawl Frequency

This describes however often Googlebot volition crawl an idiosyncratic URL. Typically your homepage is your astir important leafage and has the strongest nexus signals, truthful it volition beryllium crawled astir often. Conversely, pages that are deeper connected your site, that are further distant (need much clicks from the homepage) volition beryllium crawled little frequently. This means that immoderate updates to your homepage volition beryllium recovered by Google beauteous quickly, but an update to a merchandise leafage nether 3 subcategories and listed connected leafage 50 whitethorn not beryllium crawled for respective months.

When Google discovers a caller URL, oregon the HTTP presumption changes, Googlebot volition often crawl that leafage again respective times, to marque definite the alteration is imperishable earlier updating the hunt index. Many sites go temporarily unavailable for a assortment of reasons, truthful ensuring the changes are unchangeable helps it conserve resources successful updating its scale and helps searchers.

Below is an illustration showing a simplified presumption of the crawl queue, however antithetic pages whitethorn person antithetic crawl frequencies successful the queue and however a leafage is requested and makes its mode into the hunt scale and past the hunt results:

How Google Discovers Pages connected Your Site

Google uses a assortment of methods to observe URLs connected your tract to adhd to the crawl queue, it does not conscionable trust connected the links that Googlebot finds. Below are a fewer different methods of URL discovery:

- Links from different sites

- Internal links

- XML sitemap files

- Web forms that usage a GET action

- URLs from atom, RSS oregon different feeds

- URLs from Google ads

- URLs from societal media feeds

- URL submissions from Google Search Console

- URLs successful emails

- URLs included successful JavaScript files

- URLs recovered successful the robots.txt file

- URLs discovered from it’s different crawlers

All of these, and different methods lend to a excavation of URLs that tin beryllium sent to the crawl queue. This means developers request to beryllium cautious however web applications, navigation, pagination and different tract features are coded arsenic they tin perchance make thousands, oregon millions of further debased worth URLs that Google whitethorn privation to crawl.

Developer Pro Tip: When populating a adaptable successful JavaScript debar utilizing the guardant slash arsenic a delimiter arsenic it looks similar a URL to Google and it volition commencement crawling those URLs. e.g.:

var myCategories == “home/hardware/tools”;

If Google finds codification similar this, it volition deliberation it’s a comparative URL and commencement to crawl it.

Types of Google Web Crawlers

Google efficaciously uses antithetic web crawlers to scale contented crossed the net for its hunt motor and advertisement services. Each benignant of crawler is designed for circumstantial media formats and devices. Here are immoderate examples:

-

Googlebot Smartphone

Googlebot Smartphone crawlers are optimized to scale mobile-friendly web pages.

They tin render and recognize the layout and contented designed for tiny screens. This ensures that smartphone users searching connected Google person the astir applicable mobile versions of websites successful their hunt results.

-

Googlebot Desktop

Googlebot Desktop crawlers scale accepted desktop and laptop web pages.

They are focused connected optimized contented and navigation designed for larger screens. Desktop crawlers assistance aboveground the modular machine views of websites to those performing searches from a non-mobile device.

-

Googlebot Image

Googlebot Image crawlers scale representation files connected the Internet.

They let Google to recognize images and integrate them into representation searches. It does this by being capable to scan representation files and tin extract metadata, record names, and ocular characteristics of images.

Pro Tip: By utilizing optimized representation names and metadata, you tin assistance radical observe your ocular contented much easy done Google Image searches.

-

Googlebot News

Googlebot News crawlers specialize successful uncovering and knowing quality articles and content.

They purpose to scale breaking quality stories and updates from publishers comprehensively. News contented indispensable beryllium processed and indexed overmuch much rapidly than evergreen contented owed to its time-sensitive nature.

This supports Google News and ensures timely sum of existent events done its quality hunt features.

-

Googlebot Video

Googlebot Video crawlers tin discover, parse, and verify embedded video files connected the web.

As such, it helps to integrate video contented into Google’s hunt results for applicable video queries from users and provender video contented into Google’s video hunt and proposal features.

-

Googlebot Adsbot

Googlebot Adsbot crawls and analyzes landing leafage contented and compares it to the advertisement transcript which is factored into the prime score. As advertizing makes up a ample information of contented online, Googlebot Adsbot plays an important supporting role. It is designed specifically for ads and promotional material.

-

Googlebot Storebot

Googlebot besides uses Storebot arsenic a specialized web crawler. Storebot focuses its crawling exclusively connected e-commerce sites, retailers, and different commercialized pages. It parses merchandise listings, pricing information, reviews, and different details that are important for buying queries.

Storebot provides a heavy investigation of pricing, availability, and different commerce-related information crossed antithetic stores. This accusation helps Googlebot comprehensively recognize these commercialized corners of the web. With Storebot’s assistance, Googlebot tin amended service users searching for products online done Search and different Google services.

These are the astir fashionable crawlers that astir sites volition encounter, you tin spot their afloat list of Googlebot crawlers.

Why You Should Make Your Site Available for Crawling

To guarantee your website is correctly represented successful hunt results and evolves with changes to hunt algorithms, it’s important to marque your tract disposable for crawling. Here are a fewer cardinal reasons why:

Get More Organic Traffic

When Google’s crawler visits your site, it helps hunt engines recognize the structure, contented and linking relationships of your website. This allows them to accurately scale your pages truthful searchers tin find them successful the SERPS.

As Google’s John Mueller says, “Indexing is the process wherever Google discovers, renders, and understands your pages truthful they tin beryllium included successful Google hunt results.”

In different words, the much crawlable your tract is, the amended occupation hunt engines tin bash astatine indexing each of your important pages and serving them successful hunt results.

Improve Your Site Performance and UX

As crawlers render and analyse your pages, they tin supply feedback connected tract velocity and contented format that impacts idiosyncratic experience.

This accusation is typically disposable wrong your website analytics oregon hunt console tools.

As issues recovered during crawling, similar dilatory pages oregon pages loading improperly, tin wounded users’ interactions with your site—you tin leverage this feedback to amended the wide idiosyncratic acquisition crossed your website.

Pro Tip: Keeping your tract well-organized and crawl-optimized tin guarantee a amended acquisition for hunt motor crawlers and existent users. According to Search Engine Watch, a amended idiosyncratic interface leads to accrued clip connected tract and a lower bounce rate.

Why You Should Not Make Your Site Crawlable

We urge that improvement oregon staging sites are blocked from being crawled truthful they don’t inadvertently extremity up successful the hunt index. It’s not capable to conscionable anticipation that Google won’t find it, we request to beryllium explicit, arsenic remember, Google uses a assortment of methods to observe URLs.

Just retrieve that erstwhile you propulsion your caller tract live, cheque you didn’t overwrite the existent robots.txt record with the 1 that’s blocking access. I can’t archer you however galore times I’ve seen this happen.

If you person sensitive, private, oregon idiosyncratic information, oregon different contented that you bash not privation successful the hunt results, past that should besides beryllium blocked. However, if you hap to find immoderate contented successful the hunt results that needs to beryllium removed, you tin artifact it utilizing the robots.txt, meta robots noindex tag, password support it, oregon the x-robots-tag successful the HTTP header, past usage the URL removal instrumentality successful Google Search Console.

How to Optimize Your Site for Crawling

Providing a sitemap tin assistance Google observe pages connected your tract much rapidly which affects however rapidly they tin beryllium added oregon updated successful the hunt results. But determination are galore different considerations including your interior nexus structure, however the Content Management System (CMS) functions, tract search, and different tract features.

Here are immoderate champion practices that tin assistance Google find and recognize your astir invaluable pages.

- Ensure casual accessibility and bully UX: Make definite your tract operation is logically organized and interior links let creaseless navigation betwixt pages. Google and users volition much readily crawl and prosecute with a tract that loads quickly, is casual to navigate.

- Use meta tags: Meta descriptions and titles pass Google astir your leafage content. Ensure each leafage has unique, keyword-rich meta tags to clarify what the leafage is about. Web crawlers volition sojourn your pages to work the meta tags and recognize what contented is connected each page.

- Create, high-quality, adjuvant content: Original, regularly updated contented encourages repetition visitors and hunt engines to re-crawl pages. Google favors sites with fresh, in-depth worldly covering taxable areas completely.

- Increase contented organisation and update aged pages: Leverage societal sharing and interior linking to administer invaluable content. Keep signature pages similar the homepage updated monthly. Outdated pages whitethorn beryllium excluded from hunt results.

- Use integrated keywords: Include your people keywords and related synonyms successful a natural, readable mode wrong headings, leafage text, and alt tags. This helps hunt engines recognize the taxable of your content. Overly repetitive oregon unnatural usage of keywords tin beryllium counterproductive.

- If you person a precise ample site, guarantee you person capable server resources truthful Googlebot doesn’t dilatory down oregon intermission crawling. It tries to beryllium polite and show server effect times successful existent time, truthful it doesn’t dilatory down your tract for users.

- Ensure each of your important pages person a bully interior linking operation to let Googlebot to find them. Your important pages are going to beryllium typically nary much than 3 clicks from the homepage.

- Ensure your XML sitemaps are up to day and formatted correctly.

- Be mindful of crawl traps and artifact them from being crawled. These are sections of a tract that tin nutrient an astir infinite fig of URLs, the classical illustration is simply a web based calendar, wherever determination are links to pages for aggregate years, months and days.

- Block pages that connection no, oregon precise small value. Many ecommerce sites person filtering, oregon faceted search, and sorting functions that tin besides adhd hundreds of thousands, oregon adjacent millions of further URLs connected your site.

- Block interior tract hunt results, arsenic these tin besides make thousands oregon millions of further URLs.

- For precise ample sites effort to get outer heavy links arsenic portion of your nexus gathering strategy, arsenic that besides helps supply Google with signals that those pages are important. Those heavy inbound links besides assistance the different deeper pages connected the site.

- Link to the canonical mentation of your pages, by avoiding the usage of tracking parameters oregon click trackers similar bit.ly. This besides helps debar redirect chains, which conscionable adds much overhead to your server resources, crawlers, and for users.

- Ensure your Content Delivery Network (CDN) that hosts resources similar images, JavaScript and CSS files needed to render the leafage are not blocking Googlebot.

- Avoid cloaking, i.e. detecting the user-agent drawstring and serving antithetic contented to Googlebot than users. This is not lone much hard to acceptable up, maintain, and troubleshoot, but whitethorn besides beryllium against Google’s Webmaster Guidelines.

How Can You Resolve Crawling Issues?

There are respective steps you tin instrumentality to resolve crawling issues and guarantee Googlebot is interacting with your tract appropriately:

- Register your tract with Google Search Console: This is Google’s dashboard that offers Google’s presumption connected however it sees your website. It provides galore reports to place occupation areas connected your tract and a mode for Google to interaction you. The indexing reports volition amusement you areas of your tract that person problems and supply a illustration database of affected URLs.

- Audit Robots.txt: Carefully audit your robots.txt record and guarantee it doesn’t incorporate immoderate disallow rules blocking important tract sections from being crawled. A azygous typing mistake could forestall due discovery.

- Crawl your ain site: There are galore crawling tools disposable that tin beryllium tally connected your ain PC, oregon SAAS based services astatine varying terms points. They volition supply a summary and bid of reports connected what they find, which is often much broad than Google Search Console, arsenic those reports usually lone amusement a illustration of URLs with issues.

- Check for redirects: Double-check your .htaccess record and server configurations for immoderate redirect rules that whitethorn unintentionally unit Googlebot to exit prematurely. Check all the redirects to guarantee they relation properly.

- Identify low-quality links: Audit each backlinks to find and disavow those coming from nexus schemes oregon unrelated sites that could beryllium seen arsenic manipulative. Disavowing low-quality links whitethorn assistance unblock crawling to invaluable pages.

- Assess the XML sitemap file: Validate that the sitemap.xml record points to each intended interior pages and is generated regularly. Outdated sitemaps volition beryllium missing your caller content, which whitethorn hinder them from being discovered and crawled. If your XML sitemaps incorporate links to URLs that redirect oregon person 4XX errors, hunt engines whitethorn not spot them and usage them for aboriginal crawling, diminishing their full purpose.

- Analyze server effect codes: In Google Search Console, cheque the crawl stats reports and troubleshoot issues with the URL Inspection tool, cautiously show leafage effect codes for timeouts and blocked resources.

- Analyze your log files: There are tools and services that volition let you to upload your server logs and analyse them. This tin beryllium utile for ample sites that are having problems getting contented discovered and indexed.

A Hat Tip to Bingbot

While this nonfiction is focused connected Googlebot, we don’t privation to hide astir Bingbot, which is utilized by Bing. Many of the aforesaid principals that enactment for Googlebot besides enactment for Bingbot, truthful you don’t usually request to bash thing different.

However, astatine times Bingbot tin beryllium rather assertive successful its crawl rate, truthful if you find that you request to reclaim server resources, you tin set the crawl complaint successful Bing Webmaster tools with the Crawl Control settings. I’ve done this successful the past connected immense sites to bias astir of the crawling to hap during disconnected highest hours; your server admins volition convey you for that.

Conclusion

Google’s proposal is not to optimize your tract for Google, but to optimize it for users, and that’s existent erstwhile it comes to the contented and leafage experience, however, arsenic we’ve laid retired successful this article, determination are a batch of intricacies successful the mode a website is developed and maintained that tin impact however Googlebot discovers and crawls your site. For a tiny to medium-sized tract that mightiness beryllium powered by WordPress, galore of these considerations are not a factor, however, this commencement to go progressively important for e-commerce sites and different ample sites that whitethorn person a customized Content Management System (CMS), and tract features that tin perchance make thousands oregon millions of unnecessary URLs.

Googlebot volition proceed to play a captious relation successful however Google understands and indexes the net successful 2024 and beyond. While caller technologies whitethorn look to analyse online contented successful antithetic ways, Googlebot’s tried and existent web crawling methodology remains highly effectual astatine discovering caller and applicable accusation astatine a monolithic scale.

At Vizion Interactive, we person the expertise, experience, and enthusiasm to get results and support clients happy! Learn much astir however our SEO Audits, Local Listing Management, Website Redesign Consulting, and B2B integer marketing services tin summation income and boost your ROI. But don’t conscionable instrumentality our connection for it, cheque retired what our clients person to say, on with our case studies.

English (US)

English (US)