ARTICLE AD BOX

Google offers PageSpeed Insights API to assistance SEO pros and developers by mixing real-world information with simulation data, providing load show timing information related to web pages.

The quality betwixt the Google PageSpeed Insights (PSI) and Lighthouse is that PSI involves some real-world and laboratory data, portion Lighthouse performs a leafage loading simulation by modifying the transportation and user-agent of the device.

Another constituent of quality is that PSI doesn’t proviso immoderate accusation related to web accessibility, SEO, oregon progressive web apps (PWAs), portion Lighthouse provides each of the above.

Thus, erstwhile we usage PageSpeed Insights API for the bulk URL loading show test, we won’t person immoderate information for accessibility.

However, PSI provides much accusation related to the leafage velocity performance, specified arsenic “DOM Size,” “Deepest DOM Child Element,” “Total Task Count,” and “DOM Content Loaded” timing.

One much vantage of the PageSpeed Insights API is that it gives the “observed metrics” and “actual metrics” antithetic names.

In this guide, you volition learn:

- How to make a production-level Python Script.

- How to usage APIs with Python.

- How to conception information frames from API responses.

- How to analyse the API responses.

- How to parse URLs and process URL requests’ responses.

- How to store the API responses with due structure.

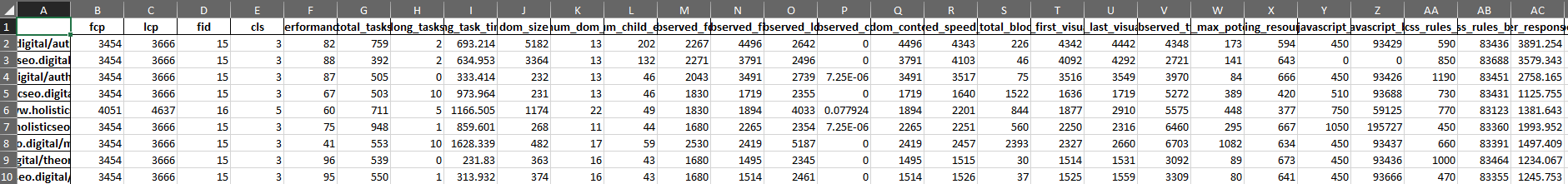

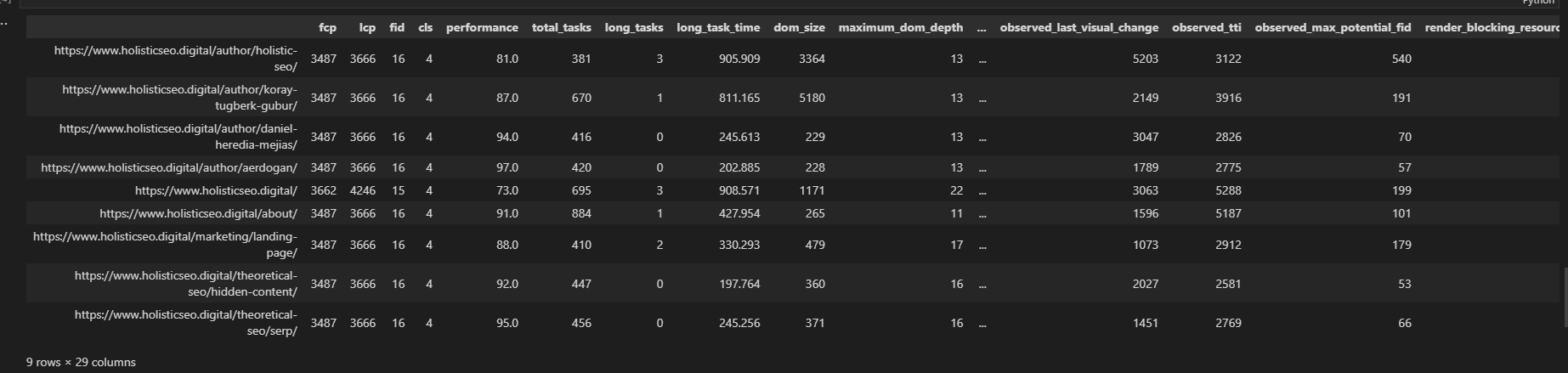

An illustration output of the Page Speed Insights API telephone with Python is below.

Screenshot from author, June 2022

Screenshot from author, June 2022

Libraries For Using PageSpeed Insights API With Python

The indispensable libraries to usage PSI API with Python are below.

- Advertools retrieves investigating URLs from the sitemap of a website.

- Pandas is to conception the information framework and flatten the JSON output of the API.

- Requests are to marque a petition to the circumstantial API endpoint.

- JSON is to instrumentality the API effect and enactment it into the specifically related dictionary point.

- Datetime is to modify the circumstantial output file’s sanction with the day of the moment.

- URLlib is to parse the trial taxable website URL.

How To Use PSI API With Python?

To usage the PSI API with Python, travel the steps below.

- Get a PageSpeed Insights API key.

- Import the indispensable libraries.

- Parse the URL for the trial taxable website.

- Take the Date of Moment for record name.

- Take URLs into a database from a sitemap.

- Choose the metrics that you privation from PSI API.

- Create a For Loop for taking the API Response for each URLs.

- Construct the information framework with chosen PSI API metrics.

- Output the results successful the signifier of XLSX.

1. Get PageSpeed Insights API Key

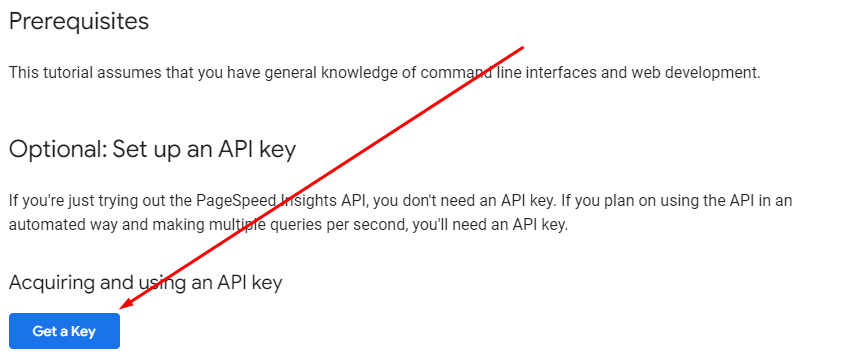

Use the PageSpeed Insights API Documentation to get the API Key.

Click the “Get a Key” fastener below.

Image from developers.google.com, June 2022

Image from developers.google.com, June 2022

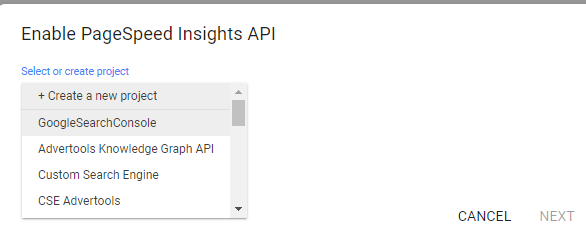

Choose a task that you person created successful Google Developer Console.

Image from developers.google.com, June 2022

Image from developers.google.com, June 2022

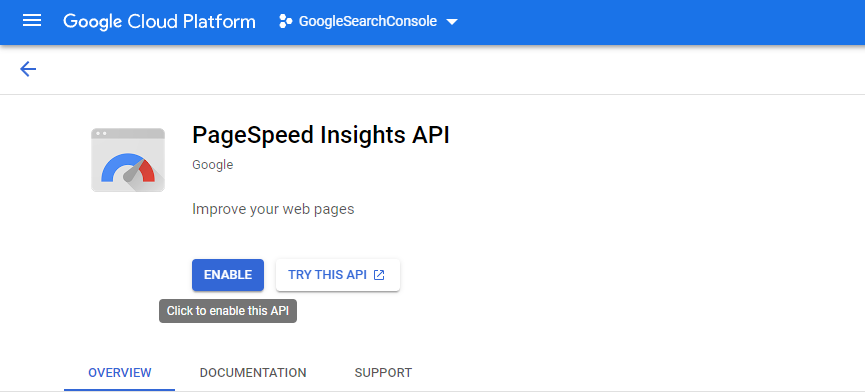

Enable the PageSpeed Insights API connected that circumstantial project.

Image from developers.google.com, June 2022

Image from developers.google.com, June 2022

You volition request to usage the circumstantial API Key successful your API Requests.

2. Import The Necessary Libraries

Use the lines beneath to import the cardinal libraries.

import advertools arsenic adv import pandas arsenic pd import requests import json from datetime import datetime from urllib.parse import urlparse3. Parse The URL For The Test Subject Website

To parse the URL of the taxable website, usage the codification operation below.

domain = urlparse(sitemap_url) domain = domain.netloc.split(".")[1]The “domain” adaptable is the parsed mentation of the sitemap URL.

The “netloc” represents the circumstantial URL’s domain section. When we divided it with the “.” it takes the “middle section” which represents the domain name.

Here, “0” is for “www,” “1” for “domain name,” and “2” is for “domain extension,” if we divided it with “.”

4. Take The Date Of Moment For File Name

To instrumentality the day of the circumstantial relation telephone moment, usage the “datetime.now” method.

Datetime.now provides the circumstantial clip of the circumstantial moment. Use the “strftime” with the “%Y”, “”%m”, and “%d” values. “%Y” is for the year. The “%m” and “%d” are numeric values for the circumstantial period and the day.

day = datetime.now().strftime("%Y_%m_%d")5. Take URLs Into A List From A Sitemap

To instrumentality the URLs into a database signifier from a sitemap file, usage the codification artifact below.

sitemap = adv.sitemap_to_df(sitemap_url) sitemap_urls = sitemap["loc"].to_list()If you work the Python Sitemap Health Audit, you tin larn further accusation astir the sitemaps.

6. Choose The Metrics That You Want From PSI API

To take the PSI API effect JSON properties, you should spot the JSON record itself.

It is highly applicable to the reading, parsing, and flattening of JSON objects.

It is adjacent related to Semantic SEO, acknowledgment to the conception of “directed graph,” and “JSON-LD” structured data.

In this article, we won’t absorption connected examining the circumstantial PSI API Response’s JSON hierarchies.

You tin spot the metrics that I person chosen to stitchery from PSI API. It is richer than the basal default output of PSI API, which lone gives the Core Web Vitals Metrics, oregon Speed Index-Interaction to Next Paint, Time to First Byte, and First Contentful Paint.

Of course, it besides gives “suggestions” by saying “Avoid Chaining Critical Requests,” but determination is nary request to enactment a condemnation into a information frame.

In the future, these suggestions, oregon adjacent each idiosyncratic concatenation event, their KB and MS values tin beryllium taken into a azygous file with the sanction “psi_suggestions.”

For a start, you tin cheque the metrics that I person chosen, and an important magnitude of them volition beryllium archetypal for you.

PSI API Metrics, the archetypal conception is below.

fid = [] lcp = [] cls_ = [] url = [] fcp = [] performance_score = [] total_tasks = [] total_tasks_time = [] long_tasks = [] dom_size = [] maximum_dom_depth = [] maximum_child_element = [] observed_fcp = [] observed_fid = [] observed_lcp = [] observed_cls = [] observed_fp = [] observed_fmp = [] observed_dom_content_loaded = [] observed_speed_index = [] observed_total_blocking_time = [] observed_first_visual_change = [] observed_last_visual_change = [] observed_tti = [] observed_max_potential_fid = []This conception includes each the observed and simulated cardinal leafage velocity metrics, on with immoderate non-fundamental ones, similar “DOM Content Loaded,” oregon “First Meaningful Paint.”

The 2nd conception of PSI Metrics focuses connected imaginable byte and clip savings from the unused codification amount.

render_blocking_resources_ms_save = [] unused_javascript_ms_save = [] unused_javascript_byte_save = [] unused_css_rules_ms_save = [] unused_css_rules_bytes_save = []A 3rd conception of the PSI metrics focuses connected server effect time, responsive representation usage benefits, oregon not, utilizing harms.

possible_server_response_time_saving = [] possible_responsive_image_ms_save = []Note: Overall Performance Score comes from “performance_score.”

7. Create A For Loop For Taking The API Response For All URLs

The for loop is to instrumentality each of the URLs from the sitemap record and usage the PSI API for each of them 1 by one. The for loop for PSI API automation has respective sections.

The archetypal conception of the PSI API for loop starts with duplicate URL prevention.

In the sitemaps, you tin spot a URL that appears aggregate times. This conception prevents it.

for one successful sitemap_urls[:9]: # Prevent the duplicate "/" trailing slash URL requests to override the information. if i.endswith("/"): r = requests.get(f"https://www.googleapis.com/pagespeedonline/v5/runPagespeed?url={i}&strategy=mobile&locale=en&key={api_key}") else: r = requests.get(f"https://www.googleapis.com/pagespeedonline/v5/runPagespeed?url={i}/&strategy=mobile&locale=en&key={api_key}")Remember to cheque the “api_key” astatine the extremity of the endpoint for PageSpeed Insights API.

Check the presumption code. In the sitemaps, determination mightiness beryllium non-200 presumption codification URLs; these should beryllium cleaned.

if r.status_code == 200: #print(r.json()) data_ = json.loads(r.text) url.append(i)The adjacent conception appends the circumstantial metrics to the circumstantial dictionary that we person created earlier “_data.”

fcp.append(data_["loadingExperience"]["metrics"]["FIRST_CONTENTFUL_PAINT_MS"]["percentile"]) fid.append(data_["loadingExperience"]["metrics"]["FIRST_INPUT_DELAY_MS"]["percentile"]) lcp.append(data_["loadingExperience"]["metrics"]["LARGEST_CONTENTFUL_PAINT_MS"]["percentile"]) cls_.append(data_["loadingExperience"]["metrics"]["CUMULATIVE_LAYOUT_SHIFT_SCORE"]["percentile"]) performance_score.append(data_["lighthouseResult"]["categories"]["performance"]["score"] * 100)Next conception focuses connected “total task” count, and DOM Size.

total_tasks.append(data_["lighthouseResult"]["audits"]["diagnostics"]["details"]["items"][0]["numTasks"]) total_tasks_time.append(data_["lighthouseResult"]["audits"]["diagnostics"]["details"]["items"][0]["totalTaskTime"]) long_tasks.append(data_["lighthouseResult"]["audits"]["diagnostics"]["details"]["items"][0]["numTasksOver50ms"]) dom_size.append(data_["lighthouseResult"]["audits"]["dom-size"]["details"]["items"][0]["value"])The adjacent conception takes the “DOM Depth” and “Deepest DOM Element.”

maximum_dom_depth.append(data_["lighthouseResult"]["audits"]["dom-size"]["details"]["items"][1]["value"]) maximum_child_element.append(data_["lighthouseResult"]["audits"]["dom-size"]["details"]["items"][2]["value"])The adjacent conception takes the circumstantial observed trial results during our Page Speed Insights API.

observed_dom_content_loaded.append(data_["lighthouseResult"]["audits"]["metrics"]["details"]["items"][0]["observedDomContentLoaded"]) observed_fid.append(data_["lighthouseResult"]["audits"]["metrics"]["details"]["items"][0]["observedDomContentLoaded"]) observed_lcp.append(data_["lighthouseResult"]["audits"]["metrics"]["details"]["items"][0]["largestContentfulPaint"]) observed_fcp.append(data_["lighthouseResult"]["audits"]["metrics"]["details"]["items"][0]["firstContentfulPaint"]) observed_cls.append(data_["lighthouseResult"]["audits"]["metrics"]["details"]["items"][0]["totalCumulativeLayoutShift"]) observed_speed_index.append(data_["lighthouseResult"]["audits"]["metrics"]["details"]["items"][0]["observedSpeedIndex"]) observed_total_blocking_time.append(data_["lighthouseResult"]["audits"]["metrics"]["details"]["items"][0]["totalBlockingTime"]) observed_fp.append(data_["lighthouseResult"]["audits"]["metrics"]["details"]["items"][0]["observedFirstPaint"]) observed_fmp.append(data_["lighthouseResult"]["audits"]["metrics"]["details"]["items"][0]["firstMeaningfulPaint"]) observed_first_visual_change.append(data_["lighthouseResult"]["audits"]["metrics"]["details"]["items"][0]["observedFirstVisualChange"]) observed_last_visual_change.append(data_["lighthouseResult"]["audits"]["metrics"]["details"]["items"][0]["observedLastVisualChange"]) observed_tti.append(data_["lighthouseResult"]["audits"]["metrics"]["details"]["items"][0]["interactive"]) observed_max_potential_fid.append(data_["lighthouseResult"]["audits"]["metrics"]["details"]["items"][0]["maxPotentialFID"])The adjacent conception takes the Unused Code magnitude and the wasted bytes, successful milliseconds on with the render-blocking resources.

render_blocking_resources_ms_save.append(data_["lighthouseResult"]["audits"]["render-blocking-resources"]["details"]["overallSavingsMs"]) unused_javascript_ms_save.append(data_["lighthouseResult"]["audits"]["unused-javascript"]["details"]["overallSavingsMs"]) unused_javascript_byte_save.append(data_["lighthouseResult"]["audits"]["unused-javascript"]["details"]["overallSavingsBytes"]) unused_css_rules_ms_save.append(data_["lighthouseResult"]["audits"]["unused-css-rules"]["details"]["overallSavingsMs"]) unused_css_rules_bytes_save.append(data_["lighthouseResult"]["audits"]["unused-css-rules"]["details"]["overallSavingsBytes"])The adjacent conception is to supply responsive representation benefits and server effect timing.

possible_server_response_time_saving.append(data_["lighthouseResult"]["audits"]["server-response-time"]["details"]["overallSavingsMs"]) possible_responsive_image_ms_save.append(data_["lighthouseResult"]["audits"]["uses-responsive-images"]["details"]["overallSavingsMs"])The adjacent conception is to marque the relation proceed to enactment successful lawsuit determination is an error.

else: continueExample Usage Of Page Speed Insights API With Python For Bulk Testing

To usage the circumstantial codification blocks, enactment them into a Python function.

Run the script, and you volition get 29 leafage speed-related metrics successful the columns below.

Screenshot from author, June 2022

Screenshot from author, June 2022

Conclusion

PageSpeed Insights API provides antithetic types of page loading show metrics.

It demonstrates however Google engineers comprehend the conception of leafage loading performance, and perchance usage these metrics arsenic a ranking, UX, and quality-understanding constituent of view.

Using Python for bulk leafage velocity tests gives you a snapshot of the full website to assistance analyse the imaginable idiosyncratic experience, crawl efficiency, conversion rate, and ranking improvements.

More resources:

- How To Use Python For IndexNow API Bulk Indexing & Automation

- Best Browser Add-Ons For Analyzing Page Speed

- Advanced Technical SEO: A Complete Guide

Featured Image: Dundanim/Shutterstock

English (US)

English (US)