ARTICLE AD BOX

Researchers tested the thought that an AI exemplary whitethorn person an vantage successful self-detecting its ain contented due to the fact that the detection was leveraging the aforesaid grooming and datasets. What they didn’t expect to find was that retired of the 3 AI models they tested, the contented generated by 1 of them was truthful undetectable that adjacent the AI that generated it couldn’t observe it.

The survey was conducted by researchers from the Department of Computer Science, Lyle School of Engineering astatine Southern Methodist University.

AI Content Detection

Many AI detectors are trained to look for the telltale signals of AI generated content. These signals are called “artifacts” which are generated due to the fact that of the underlying transformer technology. But different artifacts are unsocial to each instauration exemplary (the Large Language Model the AI is based on).

These artifacts are unsocial to each AI and they originate from the distinctive grooming information and good tuning that is ever antithetic from 1 AI exemplary to the next.

The researchers discovered grounds that it’s this uniqueness that enables an AI to person a greater occurrence successful self-identifying its ain content, importantly amended than trying to place contented generated by a antithetic AI.

Bard has a amended accidental of identifying Bard-generated contented and ChatGPT has a higher occurrence complaint identifying ChatGPT-generated content, but…

The researchers discovered that this wasn’t existent for contented that was generated by Claude. Claude had trouble detecting contented that it generated. The researchers shared an thought of wherefore Claude was incapable to observe its ain contented and this nonfiction discusses that further on.

This is the thought down the probe tests:

“Since each exemplary tin beryllium trained differently, creating 1 detector instrumentality to observe the artifacts created by each imaginable generative AI tools is hard to achieve.

Here, we make a antithetic attack called self-detection, wherever we usage the generative exemplary itself to observe its ain artifacts to separate its ain generated substance from quality written text.

This would person the vantage that we bash not request to larn to observe each generative AI models, but we lone request entree to a generative AI exemplary for detection.

This is simply a large vantage successful a satellite wherever caller models are continuously developed and trained.”

See: Google On How Googlebot Handles AI Generated Content

Methodology

The researchers tested 3 AI models:

- ChatGPT-3.5 by OpenAI

- Bard by Google

- Claude by Anthropic

All models utilized were the September 2023 versions.

A dataset of 50 antithetic topics was created. Each AI exemplary was fixed the nonstop aforesaid prompts to make essays of astir 250 words for each of the 50 topics which generated 50 essays for each of the 3 AI models.

Each AI exemplary was past identically prompted to paraphrase their ain contented and make an further effort that was a rewrite of each archetypal essay.

They besides collected 50 quality generated essays connected each of the 50 topics. All of the quality generated essays were selected from the BBC.

The researchers past utilized zero-shot prompting to self-detect the AI generated content.

Zero-shot prompting is simply a benignant of prompting that relies connected the quality of AI models to implicit tasks for which they haven’t specifically trained to do.

The researchers further explained their methodology:

“We created a caller lawsuit of each AI strategy initiated and posed with a circumstantial query: ‘If the pursuing substance matches its penning signifier and prime of words.’ The process is

repeated for the original, paraphrased, and quality essays, and the results are recorded.

We besides added the effect of the AI detection instrumentality ZeroGPT. We bash not usage this effect to comparison show but arsenic a baseline to amusement however challenging the detection task is.”

They besides noted that a 50% accuracy complaint is adjacent to guessing which tin beryllium regarded arsenic fundamentally a level of accuracy that is simply a failure.

Results: Self-Detection

It indispensable beryllium noted that the researchers acknowledged that their illustration complaint was debased and said that they weren’t making claims that the results are definitive.

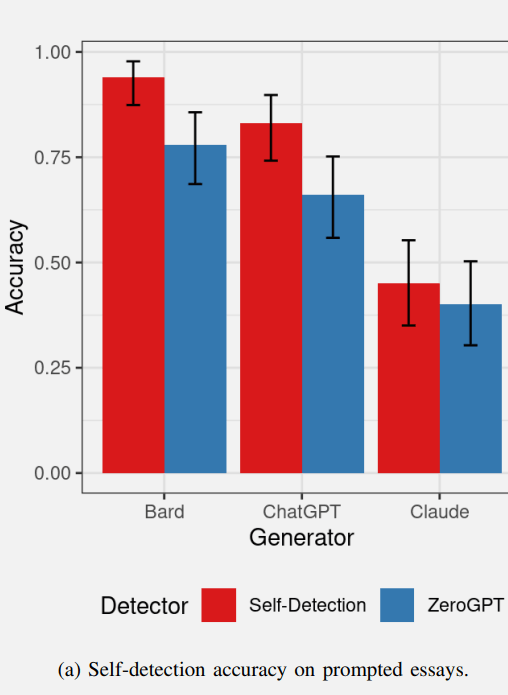

Below is simply a graph showing the occurrence rates of AI self-detection of the archetypal batch of essays. The reddish values correspond the AI self-detection and the bluish represents however good the AI detection instrumentality ZeroGPT performed.

Results Of AI Self-Detection Of Own Text Content

Bard did reasonably good astatine detecting its ain contented and ChatGPT besides performed likewise good astatine detecting its ain content.

ZeroGPT, the AI detection instrumentality detected the Bard contented precise good and performed somewhat little amended successful detecting ChatGPT content.

ZeroGPT fundamentally failed to observe the Claude-generated content, performing worse than the 50% threshold.

Claude was the outlier of the radical due to the fact that it was incapable to to self-detect its ain content, performing importantly worse than Bard and ChatGPT.

The researchers hypothesized that it whitethorn beryllium that Claude’s output contains little detectable artifacts, explaining wherefore some Claude and ZeroGPT were incapable to observe the Claude essays arsenic AI-generated.

So, though Claude was incapable to reliably self-detect its ain content, that turned retired to beryllium a motion that the output from Claude was of a higher prime successful presumption of outputting little AI artifacts.

ZeroGPT performed amended astatine detecting Bard-generated contented than it did successful detecting ChatGPT and Claude content. The researchers hypothesized that it could beryllium that Bard generates much detectable artifacts, making Bard easier to detect.

So successful presumption of self-detecting content, Bard whitethorn beryllium generating much detectable artifacts and Claude is generating little artifacts.

Results: Self-Detecting Paraphrased Content

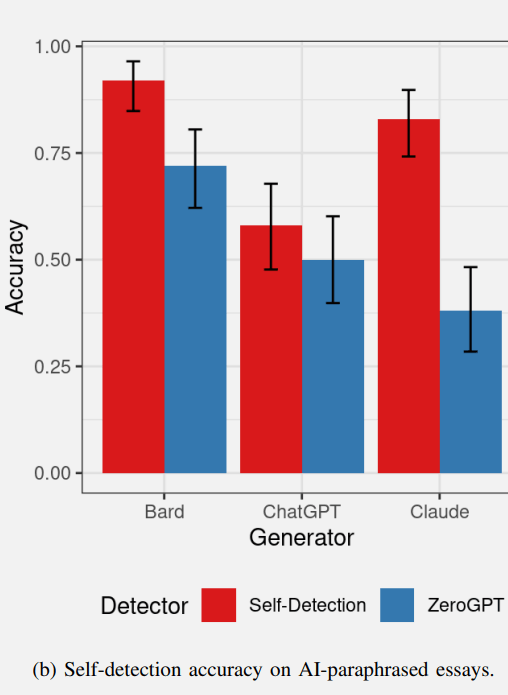

The researchers hypothesized that AI models would beryllium capable to self-detect their ain paraphrased substance due to the fact that the artifacts that are created by the exemplary (as detected successful the archetypal essays) should besides beryllium contiguous successful the rewritten text.

However the researchers acknowledged that the prompts for penning the substance and paraphrasing are antithetic due to the fact that each rewrite is antithetic than the archetypal substance which could consequently pb to a antithetic self-detection results for the self-detection of paraphrased text.

The results of the self-detection of paraphrased substance was so antithetic from the self-detection of the archetypal effort test.

- Bard was capable to self-detect the paraphrased contented astatine a akin rate.

- ChatGPT was not capable to self-detect the paraphrased contented astatine a complaint overmuch higher than the 50% complaint (which is adjacent to guessing).

- ZeroGPT show was akin to the results successful the erstwhile test, performing somewhat worse.

Perhaps the astir absorbing effect was turned successful by Anthropic’s Claude.

Claude was capable to self-detect the paraphrased contented (but it was not capable to observe the archetypal effort successful the erstwhile test).

It’s an absorbing effect that Claude’s archetypal essays seemingly had truthful fewer artifacts to awesome that it was AI generated that adjacent Claude was incapable to observe it.

Yet it was capable to self-detect the paraphrase portion ZeroGPT could not.

The researchers remarked connected this test:

“The uncovering that paraphrasing prevents ChatGPT from self-detecting portion expanding Claude’s quality to self-detect is precise absorbing and whitethorn beryllium the effect of the interior workings of these 2 transformer models.”

Screenshot of Self-Detection of AI Paraphrased Content

These tests yielded astir unpredictable results, peculiarly with respect to Anthropic’s Claude and this inclination continued with the trial of however good the AI models detected each others content, which had an absorbing wrinkle.

Results: AI Models Detecting Each Other’s Content

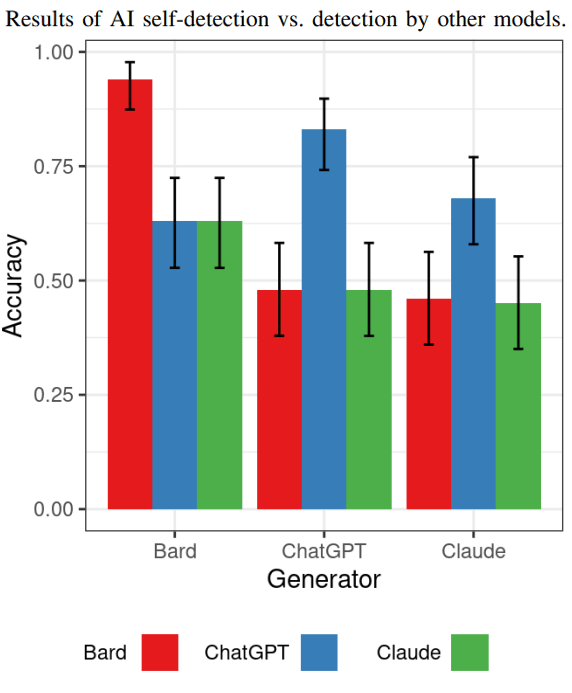

The adjacent trial showed however good each AI exemplary was astatine detecting the contented generated by the different AI models.

If it’s existent that Bard generates much artifacts than the different models, volition the different models beryllium capable to easy observe Bard-generated content?

The results amusement that yes, Bard-generated contented is the easiest to observe by the different AI models.

Regarding detecting ChatGPT generated content, some Claude and Bard were incapable to observe it arsenic AI-generated (justa arsenic Claude was incapable to observe it).

ChatGPT was capable to observe Claude-generated contented astatine a higher complaint than some Bard and Claude but that higher complaint was not overmuch amended than guessing.

The uncovering present is that each of them weren’t truthful bully astatine detecting each others content, which the researchers opined whitethorn amusement that self-detection was a promising country of study.

Here is the graph that shows the results of this circumstantial test:

At this constituent it should beryllium noted that the researchers don’t assertion that these results are conclusive astir AI detection successful general. The absorption of the probe was investigating to spot if AI models could win astatine self-detecting their ain generated content. The reply is mostly yes, they bash a amended occupation astatine self-detecting but the results are akin to what was recovered with ZEROGpt.

The researchers commented:

“Self-detection shows akin detection powerfulness compared to ZeroGPT, but enactment that the extremity of this survey is not to assertion that self-detection is superior to different methods, which would necessitate a ample survey to comparison to galore state-of-the-art AI contented detection tools. Here, we lone analyse the models’ basal quality of aforesaid detection.”

Conclusions And Takeaways

The results of the trial corroborate that detecting AI generated contented is not an casual task. Bard is capable to observe its ain contented and paraphrased content.

ChatGPT tin observe its ain contented but works little good connected its paraphrased content.

Claude is the standout due to the fact that it’s not capable to reliably self-detect its ain contented but it was capable to observe the paraphrased content, which was benignant of weird and unexpected.

Detecting Claude’s archetypal essays and the paraphrased essays was a situation for ZeroGPT and for the different AI models.

The researchers noted astir the Claude results:

“This seemingly inconclusive effect needs much information since it is driven by 2 conflated causes.

1) The quality of the exemplary to make substance with precise fewer detectable artifacts. Since the extremity of these systems is to make human-like text, less artifacts that are harder to observe means the exemplary gets person to that goal.

2) The inherent quality of the exemplary to self-detect tin beryllium affected by the utilized architecture, the prompt, and the applied fine-tuning.”

The researchers had this further reflection astir Claude:

“Only Claude cannot beryllium detected. This indicates that Claude mightiness nutrient less detectable artifacts than the different models.

The detection complaint of self-detection follows the aforesaid trend, indicating that Claude creates substance with less artifacts, making it harder to separate from quality writing”.

But of course, the weird portion is that Claude was besides incapable to self-detect its ain archetypal content, dissimilar the different 2 models which had a higher occurrence rate.

The researchers indicated that self-detection remains an absorbing country for continued probe and suggest that further studies tin absorption connected larger datasets with a greater diverseness of AI-generated text, trial further AI models, a examination with much AI detectors and lastly they suggested studying however punctual engineering whitethorn power detection levels.

Read the archetypal probe insubstantial and the abstract here:

AI Content Self-Detection for Transformer-based Large Language Models

Featured Image by Shutterstock/SObeR 9426

English (US)

English (US)